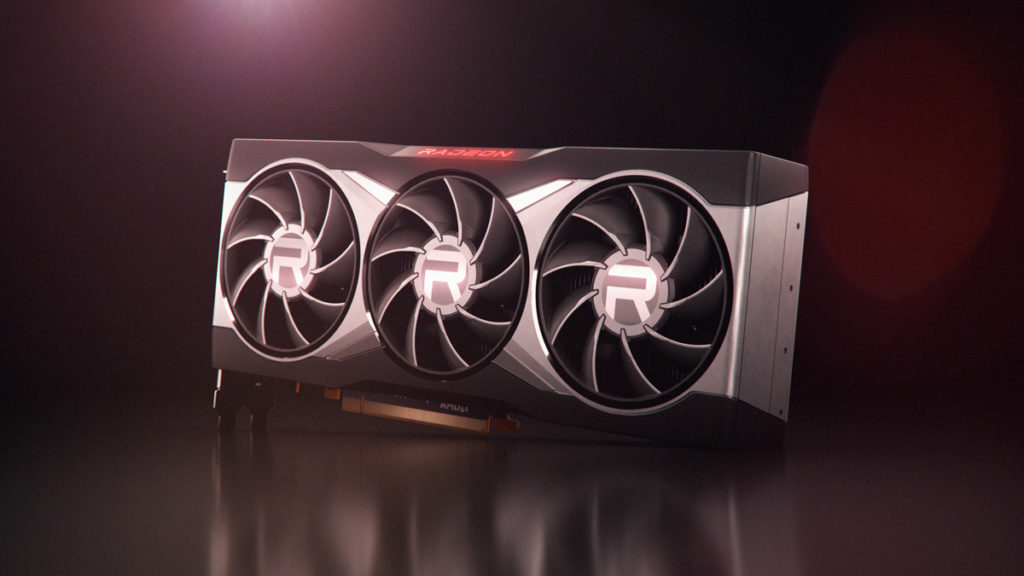

AMD shocked the graphics world today by unveiling its Radeon RX 6900 XT, Radeon RX 6800 XT, and Radeon RX 6800 cards, which – according to red team’s first-party benchmarks – can trade blows with NVIDIA’s entire GeForce RTX 30 Series lineup (GeForce RTX 3090 included).

Something that CEO Dr. Lisa Su and Scott Herkelman (CVP & GM) seemed awfully secretive about, however, is the Radeon RX 6000 Series’s ray-tracing performance. While the RDNA 2 event showcased plenty of benchmarks that favored the Radeon RX 6900 XT, Radeon RX 6800 XT, and Radeon RX 6800 over the competition, none of them seem to include metrics related to ray tracing.

Luckily, some users on r/AMD have provided a bit of insight on that after scoping out AMD’s new RDNA 2 landing page, which elaborates on the hardware component that Radeon RX 6000 Series GPUs leverage for ray tracing – the Ray Accelerator (RA). According to a diagram, each Compute Unit houses a single RA.

“New to the AMD RDNA 2 compute unit is the implementation of a high-performance ray tracing acceleration architecture known as the Ray Accelerator,” a description reads. “The Ray Accelerator is specialized hardware that handles the intersection of rays providing an order of magnitude increase in intersection performance compared to a software implementation.”

Thanks to the following footnote, which elaborates on how AMD reached that figure, users on r/AMD (e.g., NegativeXyzen) have been able to draw early ray-tracing performance comparisons between the Radeon RX 6800 XT and NVIDIA’s GeForce RTX 30 Series.

“Measured by AMD engineering labs 8/17/2020 on an AMD RDNA 2 based graphics card, using the Procedural Geometry sample application from Microsoft’s DXR SDK, the AMD RDNA 2 based graphics card gets up to 13.8x speedup (471 FPS) using HW based raytracing vs using the Software DXR fallback layer (34 FPS) at the same clocks. Performance may vary.”

As always, there’s plenty of factors that could affect performance, but here’s how the Radeon RX 6800 XT’s ray-tracing prowess looks vs. NVIDIA’s GeForce RTX 3090 and GeForce RTX 3080, courtesy of a comparison shared by VideoCardz.

| GPU | Ray -Tracing Cores | DXR Performance | Tensor Cores |

|---|---|---|---|

| NVIDIA GeForce RTX 3090 | 82 | 749 FPS | 328 |

| NVIDIA GeForce RTX 3080 | 68 | 630 FPS | 272 |

| NVIDIA GeForce RTX 3070 | 46 | not tested | 184 |

| AMD Radeon RX 6900XT | 80 | not tested | – |

| AMD Radeon RX 6800XT | 72 | 471 FPS | – |

| AMD Radeon RX 6800 | 60 | not tested | – |

Assuming that these numbers are accurate, AMD’s Radeon RX 6800 XT’s ray-tracing performance appears to be 46 percent slower than the GeForce RTX 3090. The Radeon RX 6800 XT is also 29 percent slower than the GeForce RTX 3080 in that regard.

We’ll learn the truth next month when the Radeon RX 6800 XT and Radeon RX 6800 debut on November 18 (the Radeon RX 6900 XT isn’t coming until December 8), but we’re curious about how the disparity in ray-tracing performance might affect those of you who are on the market for a new GPU. Would you pay more strictly for improved ray tracing (and DLSS)? Let us know in the comments.