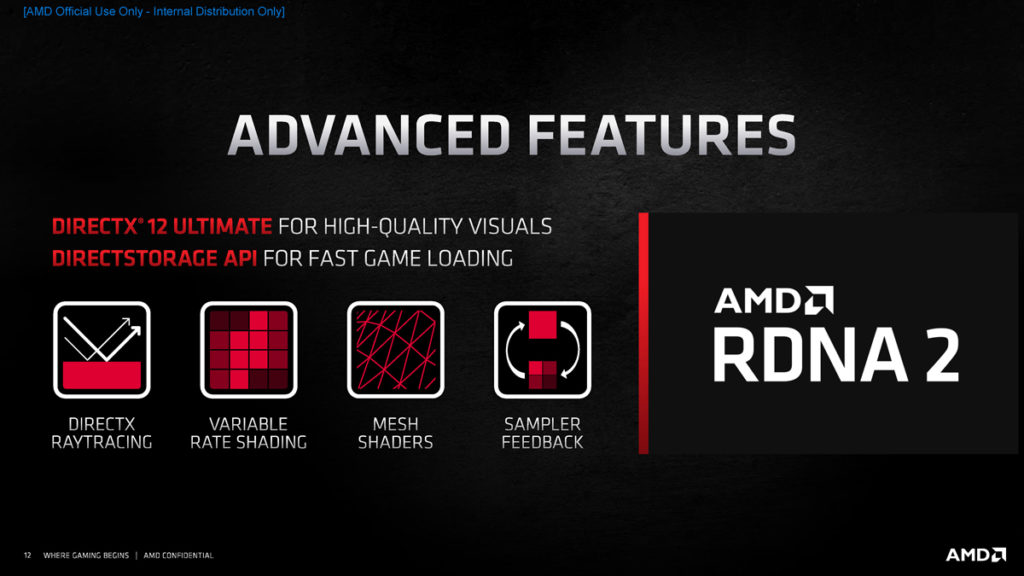

It’s too early to tell how AMD’s Radeon RX 6000 Series graphics cards will cope with ray tracing, but what we can definitely say is that they’ll include pervasive support of the demanding rendering technique.

AMD’s marketing department made that abundantly clear in a new statement today, confirming that the Radeon RX 6900 XT, Radeon RX 6800 XT, and Radeon RX 6800 will support most of today’s ray-traced titles, many of which leverage Microsoft’s DirectX API.

“AMD will support all ray tracing titles using industry-based standards, including the Microsoft DXR API and the upcoming Vulkan raytracing API,” the company told AdoredTV.

AMD also pointed out something else that should be obvious: its RDNA 2 GPUs will not include any level of official support for NVIDIA’s RTX extensions, which is no surprise, being that they were custom developed by NVIDIA.

“Games making of use of proprietary raytracing APIs and extensions will not be supported,” the company noted.

That said, this poses an interesting conundrum, particularly for those of you who are trying to decide between a GeForce or Radeon card based on the level of ray-tracing support that AMD and NVIDIA can provide.

While green team appears to be the immediate winner (it was the first to bring ray tracing to the masses, after all), there’s a slight question mark here in that nobody seems to know how AMD’s Radeon RX 6000 Series will perform in ray-traced games that utilize NVIDIA’s custom Vulkan extensions in games such as Quake II RTX and Wolfenstein Youngblood.

Due to the fact that Vulkan Ray Tracing Extensions are not out yet, NVIDIA’s ray tracing implementation used custom Vulkan extensions in those games. AMD has the option to write extensions for Vulkan to bring ray tracing support for those titles, however, AMD’s recent statement would suggest that the ray-tracing options in these titles will simply be disabled.

We’re wondering whether developers will actually go back and update their titles so Radeon RX 6000 Series users can enjoy them at their intended fidelity. There’s also the slimmer possibility of third-party workarounds (e.g., a mod or wrapper) that might enable RTX effects on red team’s cards.

If AMD’s ray-tracing support does manage to reach parity with NVIDIA, it’ll be interesting to see how the latter responds.

Editor’s Note: The above paragraphs were edited to better clarify the distinction of the methods that ray tracing can be brought into games, the options available to enable it.