Samsung has announced that it’s the first company to develop a type of memory called HBM-PIM (high bandwidth memory-processing in memory). It features AI to increase speeds in applications such as HPC systems, data centers, and other AI-related solutions. Presently, most memory is based on the Von Neumann architecture. Introduced in 1945, it uses sequential processing, which can result in bottlenecks and higher power usage. HBM-PIM places a DRAM-optimized AI engine inside the memory unit to help avoid this, potentially doubling performance and reducing power usage by 30 percent. Samsung says that this technology can be incorporated into existing systems because it does not require any software or hardware upgrades.

Original Press Release

Samsung Electronics, the world leader in advanced memory technology, today announced that it has developed the industry’s first High Bandwidth Memory (HBM) integrated with artificial intelligence (AI) processing power — the HBM-PIM. The new processing-in-memory (PIM) architecture brings powerful AI computing capabilities inside high-performance memory, to accelerate large-scale processing in data centers, high-performance computing (HPC) systems, and AI-enabled mobile applications.

Kwangil Park, senior vice president of Memory Product Planning at Samsung Electronics stated, “Our groundbreaking HBM-PIM is the industry’s first programmable PIM solution tailored for diverse AI-driven workloads such as HPC, training, and inference. We plan to build upon this breakthrough by further collaborating with AI solution providers for even more advanced PIM-powered applications.”

Rick Stevens, Argonne’s Associate Laboratory Director for Computing, Environment and Life Sciences commented, “I’m delighted to see that Samsung is addressing the memory bandwidth/power challenges for HPC and AI computing. HBM-PIM design has demonstrated impressive performance and power gains on important classes of AI applications, so we look forward to working together to evaluate its performance on additional problems of interest to Argonne National Laboratory.”

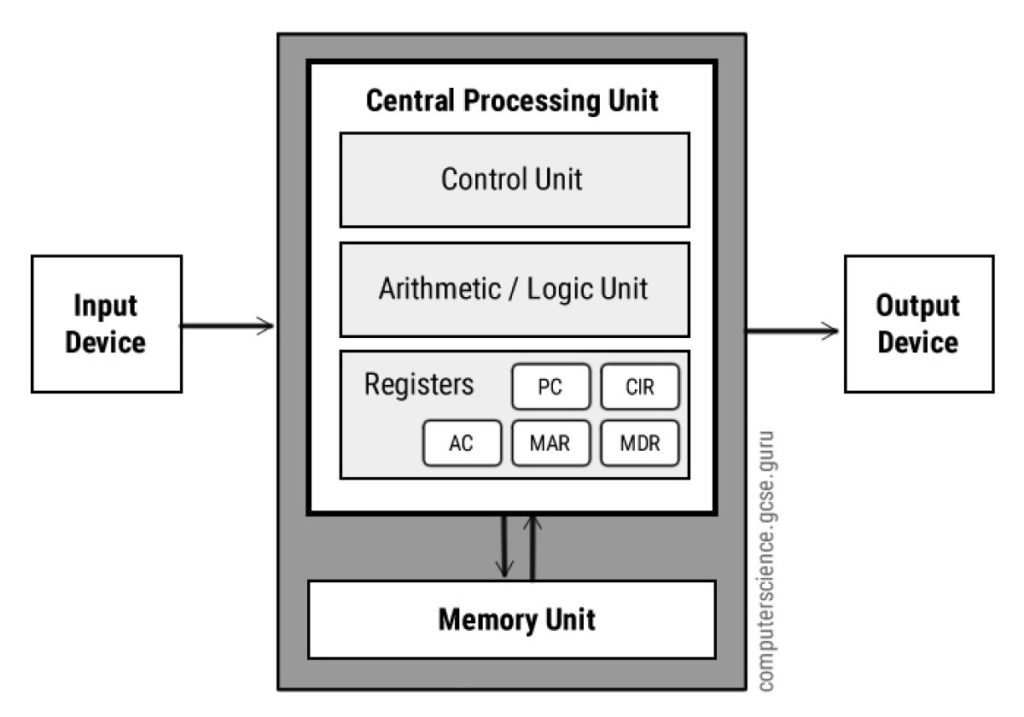

Most of today’s computing systems are based on the von Neumann architecture, which uses separate processor and memory units to carry out millions of intricate data processing tasks. This sequential processing approach requires data to constantly move back and forth, resulting in a system-slowing bottleneck especially when handling ever-increasing volumes of data.

Instead, the HBM-PIM brings processing power directly to where the data is stored by placing a DRAM-optimized AI engine inside each memory bank — a storage sub-unit — enabling parallel processing and minimizing data movement. When applied to Samsung’s existing HBM2 Aquabolt solution, the new architecture is able to deliver over twice the system performance while reducing energy consumption by more than 70%. The HBM-PIM also does not require any hardware or software changes, allowing faster integration into existing systems.

Samsung’s paper on the HBM-PIM has been selected for presentation at the renowned International Solid-State Circuits Virtual Conference (ISSCC) held through Feb. 22. Samsung’s HBM-PIM is now being tested inside AI accelerators by leading AI solution partners, with all validations expected to be completed within the first half of this year.