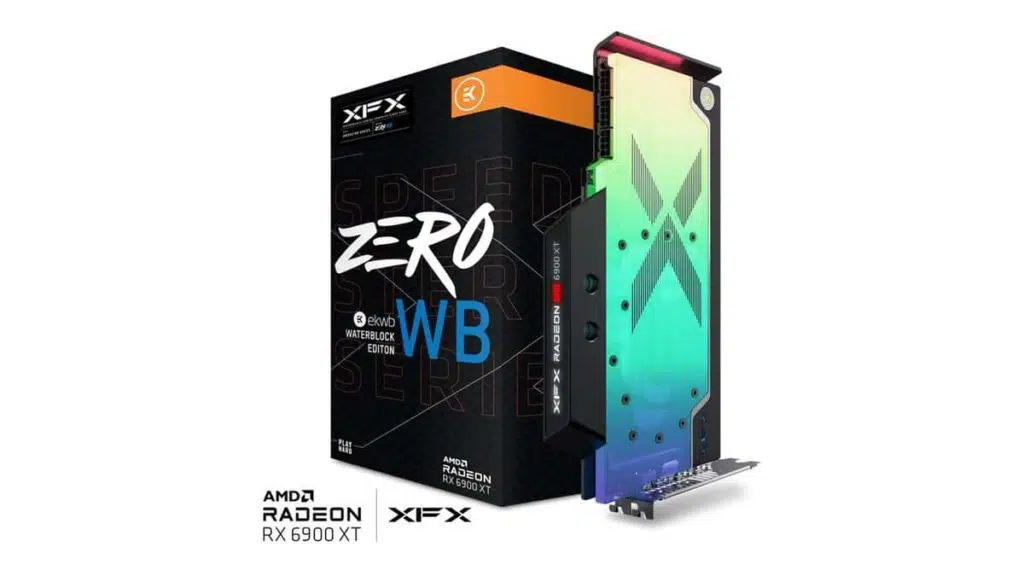

XFX has partnered with EKWB to create the Speedster Zero Radeon RX 6900 XT. XFX says that the two-slot card can be overclocked to 3 GHz. This is plausible, as there are Radeon RX 6900 XT cards that can exceed 3 GHz. “The base of the block is CNC-machined out of nickel-plated high-grade copper, while its top is CNC-machined out of glass-like cast Acrylic.” It comes with pre-installed brass standoffs and high-quality EPDM O-rings. ARGB is also present.

It features a number of upgrades over the reference design. Power delivery has been increased from an 11+1 to 14+2 VRM phase design. There is dual BIOS. The PCB features 3x 8-pin connectors, and while TGP isn’t listed, it could reach well over 400 watts when overclocked. The base clock is up to 2,200 MHz and boost clock up to 2,525 MHz,. An 850-watt PSU is required, while a 1000-watt PSU is recommended. It has 3x DP 1.4 DSC, 1x HDMI 2.1 VRR and FRL, and 1x USB-C. Pricing has not been announced.

The card comes with a 14+2 phase VRM design and Dual-BIOS, increasing overclock potential and bringing an extra safety layer if a BIOS gets corrupted. Out of the box, the GPU boost clocked is rated at 2525MHz, but XFX promises it can surpass 3000MHz if overclocked.