TechSpot has shared new benchmarks that suggest the AMD Radeon RX 6500 XT could be a disappointment for gamers who will be running the graphics card in PCIe 3.0 mode.

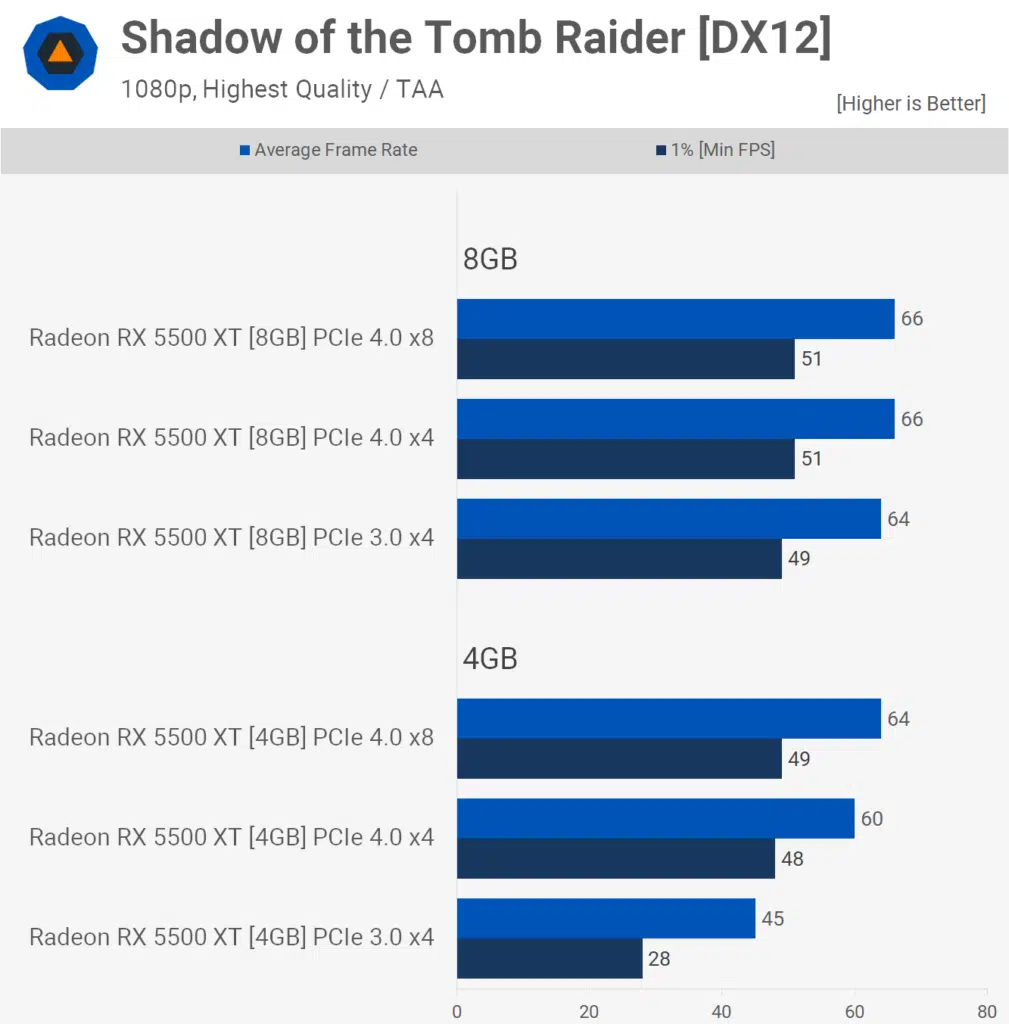

While third-party benchmarks for the Radeon RX 6500 XT will not be shared until later this week, TechSpot has teased the potential performance of AMD’s new $200 budget option by benchmarking its predecessor, the Radeon RX 5500 XT, under various PCIe bandwidth configurations. Assuming that the cards are truly as similar as TechSpot believes, Radeon RX 6500 XT users may see performance decreases of as much as 43% (1% min FPS) in select games such as Shadow of the Tomb Raider when the card is run under PCIe 3.0 mode (x4, 4 GB/s vs. 8 GB/s).

The benchmarks suggest that AMD could have avoided this problem partially by matching the memory capacity of the Radeon RX 6500 XT with that of the previous model. Performance degradation seems to be considerably less in the metrics derived from the Radeon RX 5500 XT with 8 GB of memory versus the 4 GB version.

“[…] limiting the 4GB model to even PCIe 4.0 x4 heavily reduced performance, suggesting that out of the box the 6500 XT could be in many instances limited primarily by the PCIe connection, which is pretty shocking,” concluded TechSpot. “It also strongly suggests that installing the 6500 XT into a system that only supports PCI Express 3.0 could in many instances be devastating to performance.”

“At this point we feel all reviewers should be mindful of this and make sure to test the 6500 XT in PCIe 3.0 mode. There’s no excuse not to do this as you can simply toggle between 3.0 and 4.0 in the BIOS. Of course, AMD is hoping reviewers overlook this and with most now testing on PCIe 4.0 systems, the 6500 XT might end up looking a lot better than it’s really going to be for users.”

Announced earlier this month, the Radeon RX 6500 XT is a graphics card with 1024 cores, 4 GB of memory, a boost clock of up to 2815 MHz, and TBP of 107 watts. The GPU enables up to 35 percent faster gaming performance on average in 1080p under high settings compared to the competition’s offerings, according to AMD.

Source: TechSpot