A research paper from NVIDIA illustrates how it could use new technology to improve ray tracing performance by up to 20% in future graphics cards. The authors begin by explaining that ray tracing “applications have naturally high thread divergence, low warp occupancy and are limited by memory latency,” and an “architectural enhancement called Subwarp Interleaving” could be one solution for performance gains.

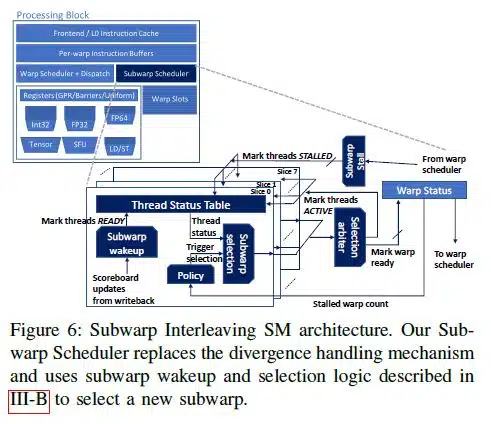

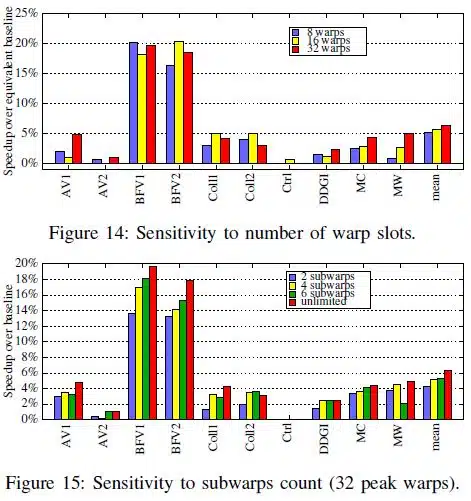

Subwarp Interleaving allows for fine-grained interleaved execution of diverged paths within a warp with the goal of increasing hardware utilization and reducing warp latency. However, notwithstanding the promise shown by early microbenchmark studies and an average performance upside of 6.3% (up to 20%) on a simulator across a suite of raytracing application traces, the Subwarp Interleaving design feature has shortcomings that preclude its near-term implementation.

They expand on the importance of ray tracing, as “Video games are a staple in today’s culture, and though GPUs are used for a wide variety of applications, they are still designed with graphics in mind.” With billions of dollars in revenue, spanning platforms from the office to the home, data centers, cloud solutions, and mobile devices, they reiterate the importance of GPUs in the world. Even though the focus of the paper is ray tracing, the authors do briefly touch upon that “any divergent GPU program, including GPU computing programs, with long stalls and low occupancy might also benefit from our work.” This follows previous statements from NVIDIA on how ray tracing could be applied to more than just shadows and light, but also applications of physics in gaming and simulations.

In conclusion, the paper doubles down on the need to find new means of dealing with the issues of highly divergent warps and latency issues. NVIDIA has already been pioneering ray tracing in the consumer market with the first introduction of its RTX GPUs. Other companies such as AMD and Intel continue to offer their own solutions for compute-heavy tasks such as ray tracing as well. Only time will tell how the industry and its technology will evolve, but with so much on the line, it’s obvious all are hard at work in finding new solutions. The PDF of the research paper can be found here.

Conclusion

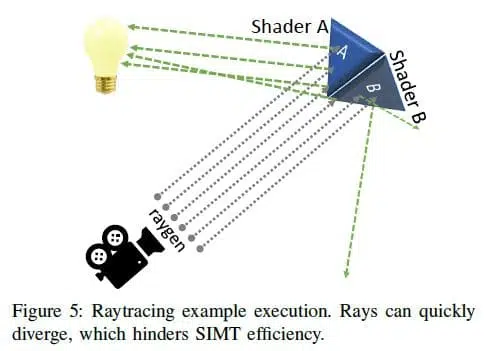

While real-time raytracing produces more realistic and immersive images than rasterization techniques, the added realism comes with a cost. Raytracing kernels stress GPUs in three fundamental ways: they are highly divergent, they suffer from poor occupancy, and warps routinely stall waiting for long latency operations to complete. Ordinarily, GPU schedulers can hide long latency stalls by switching to other ready warps, but raytracing kernels often have insufficient active warps to hide latency. Subwarp Interleaving is a new technique that aims to reduce pipeline bubbles in raytracing kernels. When a long latency operation stalls a warp and the GPU’s warp scheduler cannot find an active warp to switch to, a subwarp scheduler can instead switch execution to another divergent subwarp of the current warp. We present

architectural extensions to an NVIDIA Turing-like GPU, which leverages many of the features inherent to the baseline architecture for supporting independent thread scheduling. SI substantially reduces the exposed load-to-use stalls by 10.5%. Our evaluation shows that secondary performance limiters cap the potential of SI in current applications and architectures. While Subwarp Interleaving shows some compelling performance gains (6.3% average, 20% maximum) across a suite of raytracing application traces, its narrow usage and design complexity limit its attractiveness to current GPU architectures. However, the evolution of application demands and their control behavior may motivate future examination of latency tolerance and divergence mitigation approaches such as Subwarp Interleaving.

Sources: NVIDIA (1,2) (via Tom’s Hardware), PC Gamer