This may come as a major shocker, but it turns out that Apple had its Reality Distortion Field tuned a little too high when it claimed that the new Mac Studio with M1 Ultra chip could compete, or even beat, NVIDIA’s flagship GPU in performance. The Verge has shared a review of the new machine that shows how the M1 Ultra falls far behind the GeForce RTX 3090 in both general performance and games, such as Shadow of the Tomb Raider, which ran 30 FPS faster on Ampere hardware. Will Apple next claim that the Mac Studio 2 with M2 Ultra is faster than the GeForce RTX 4090 Ti? Stay tuned.

APPLE MAC STUDIO REVIEW: FINALLY (The Verge)

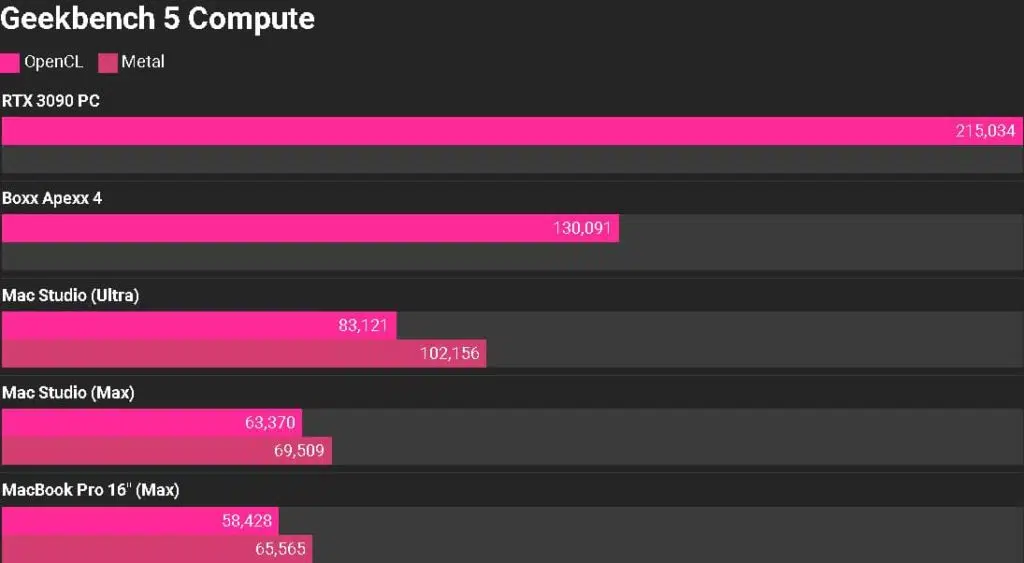

Apple, in its keynote, claimed that the M1 Ultra would outperform Nvidia’s RTX 3090. I have no idea where Apple’s getting that from. We ran Geekbench Compute, which tests the power of a system’s GPU, on both the Mac Studio and a gaming PC with an RTX 3090, a Core i9-10900, and 64GB of RAM. And the Mac Studio got… destroyed. It got less than half the score that the RTX 3090 did on that test — not only is it not beating Nvidia’s chip, but it’s not even coming close.

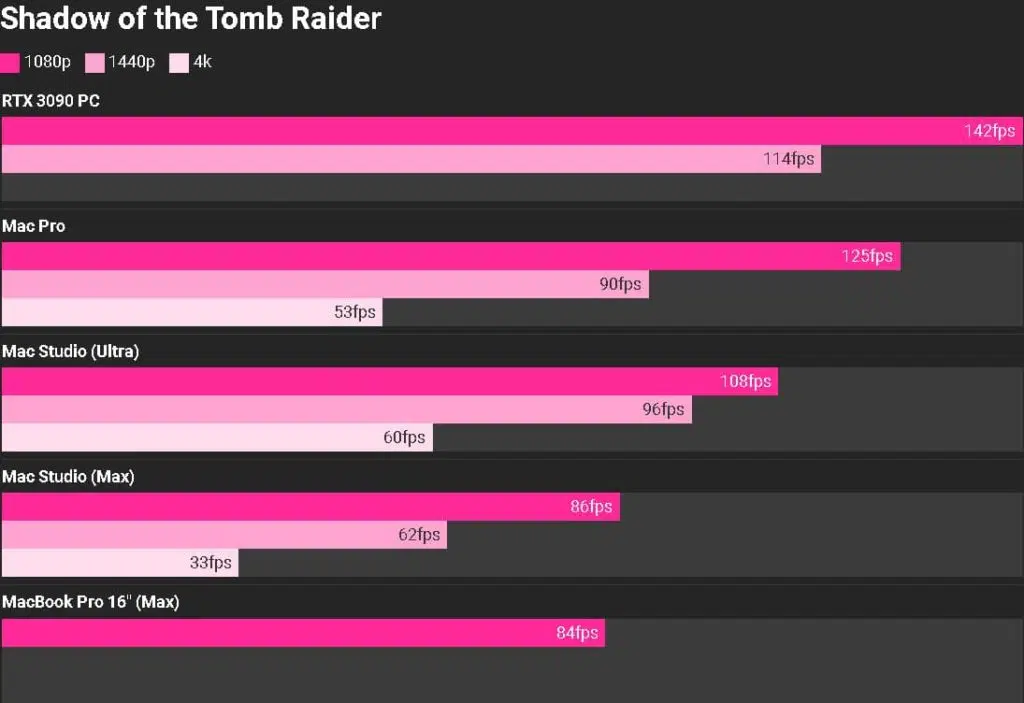

On the Shadow of the Tomb Raider benchmark, the RTX was also a solid 30 frames per second faster. Now, this is Apple gaming, of course, so Tomb Raider was not a perfect or even particularly good experience: there was substantial, noticeable micro stutter at every resolution we tried. This is not at all a computer that anyone would buy for gaming. But it does emphasize that if you’re running a computing load that relies primarily on a heavy-duty GPU, the Mac Studio is probably not the best choice.