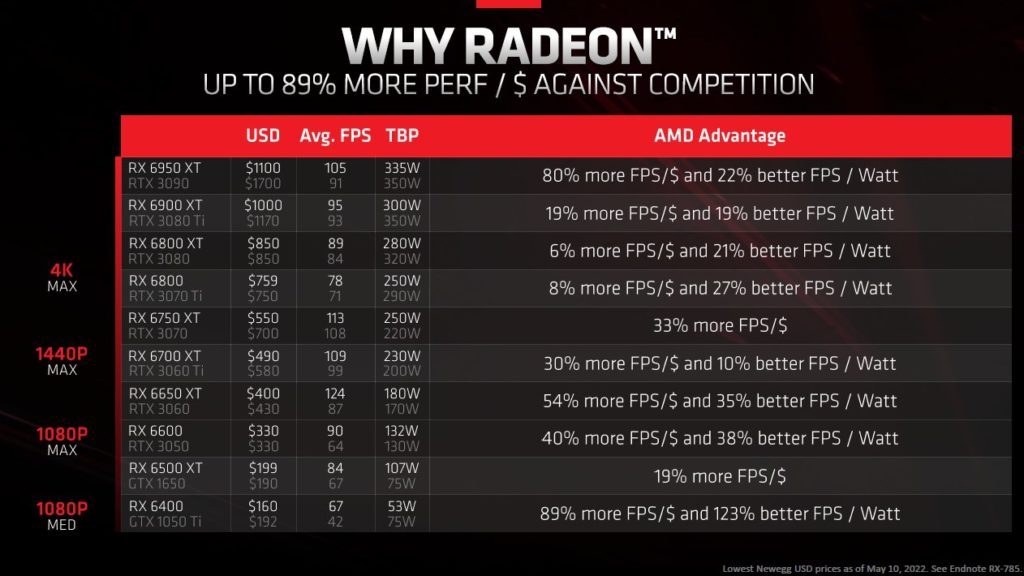

Frank Azor, Chief Architect of Gaming Solutions & Marketing at AMD, has tweeted an image that suggests red team’s family of Radeon RX 6000 Series graphics cards offers better performance per dollar than NVIDIA’s competing GeForce products for gamers. Among the comparisons listed in the chart shared today is AMD’s new Radeon RX 6950 XT ($1,110) flagship, which is depicted as offering 80% more FPS per dollar than the GeForce RTX 3090 ($1,700) based on Newegg’s lowest prices as of May 10, 2022. AMD is also claiming that its most powerful RDNA 2 option for desktop gamers offers 22% better FPS per watt than the non-Titanium version of green team’s Ampere flagship. The comparisons come just a week after the launch of the Radeon RX 6950 XT, Radeon RX 6750 XT, and the Radeon RX 6650 XT, which arrived on shelves last Tuesday, May 10.

As a longtime gamer I’m grateful for the renewed competition in high-end graphics, we all win from it. As an @AMD employee I’m super proud of what our @Radeon team has accomplished.

With a 2.1GHz Game Clock coupled with 16GB of high-speed GDDR6 memory, the AMD Radeon RX 6950 XT graphics card delivers incredible performance and breathtaking visuals for the most demanding AAA and esports titles at 4K resolution with max settings. The AMD Radeon RX 6750 XT graphics card offers a cutting-edge, high-performance gaming experience at 1440p resolution with max settings, while the AMD Radeon RX 6650 XT graphics card offers ultra-smooth, high-refresh rate 1080p gaming with max settings in the latest titles.

Source: Frank Azor