The grin on NVIDIA CEO Jensen Huang’s face is probably only going to get wider. TrendForce has shared a new article regarding cloud companies and the AI arms race, and within it is the projection that GPU demand for ChatGPT is set to rise pretty dramatically in the years ahead, from 20,000 GPUs estimated to have been used to process training data in 2020 to a much higher 30,000 as the GPT (Generative Pre-Trained Transformer) model is more widely commercialized. NVIDIA is speculated to profit immensely on this, being the company behind the A100 Tensor Core GPU, DGX A100, and other AI-relevant hardware.

From a TrendForce article:

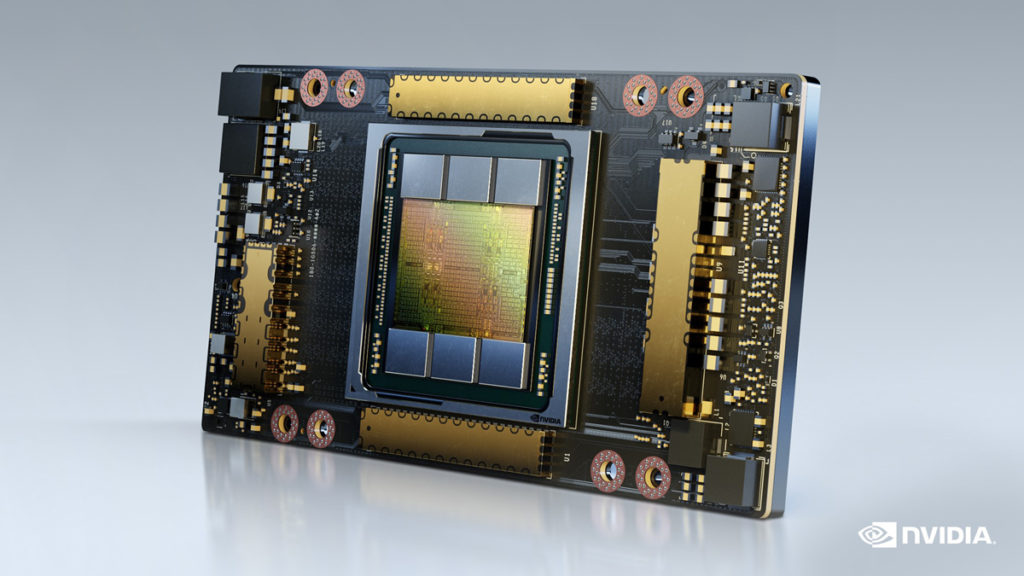

Generative AI requires a huge amount of data for training, so deploying a large number of high-performance GPUs helps shorten the training time. In the case of the Generative Pre-Trained Transformer (GPT) that underlays ChatGPT, the number of training parameters used in the development of this autoregressive language model rose from around 120 million in 2018 to almost 180 billion in 2020. According to TrendForce’s estimation, the number of GPUs that the GPT model needed to process training data in 2020 came to around 20,000. Going forward, the number of GPUs that will be needed for the commercialization of the GPT model (or ChatGPT) is projected to reach above 30,000. Note that these estimations use NVIDIA’s A100 as the basis for calculations. Hence, with generative AI becoming a trend, demand is expected to rise significantly for GPUs and thereby benefit the participants in the related supply chain. NVIDIA, for instance, will probably gain the most from the development of generative AI. Its DGX A100, which is a universal system for AI-related workloads, delivers 5 petaFLOPS and has nearly become the top choice for big data analysis and AI acceleration. Besides NVIDIA, AMD has also successively launched the MI00, MI200, and MI300 series of server chips that are widely adopted for AI-powered applications. Regarding Taiwan-based companies in the related supply chain, TSMC will continue to play a key role as the premier foundry for advanced computing chips. Nan Ya PCB, Kinsus, and Unimicron are the island’s suppliers for ABF substrates that could take advantage of this emerging wave of demand. As for developers of AI chips from Taiwan, examples include GUC, AIchip, Faraday Technology, and eMemory.