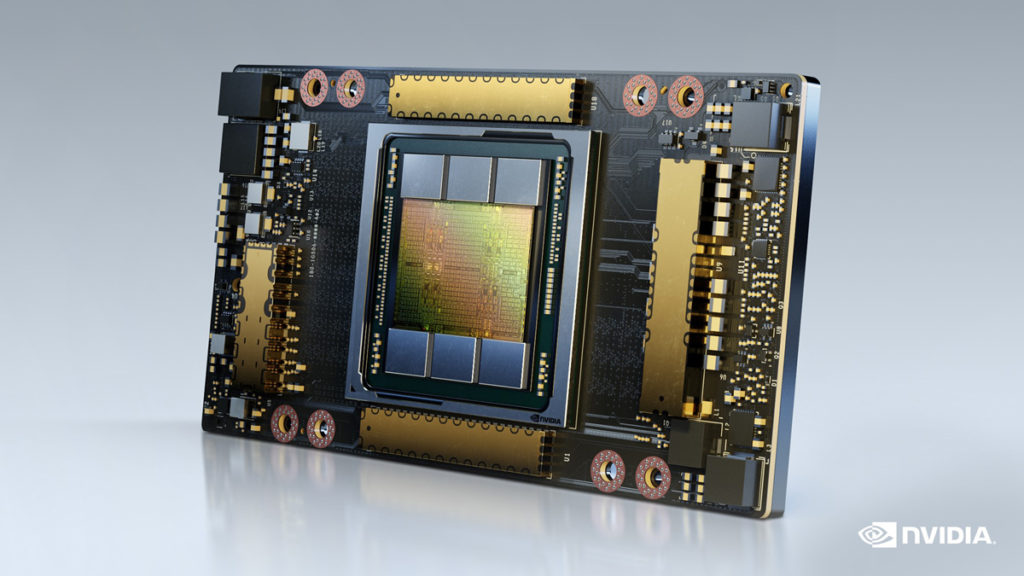

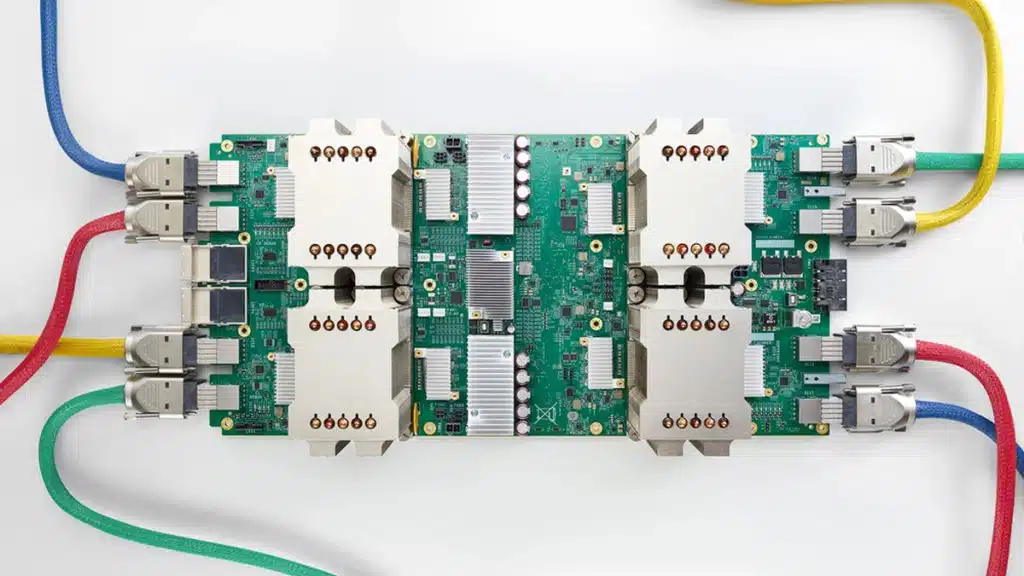

Someone get Jensen out of bed. Google has published a scientific paper that details the latest version of its custom chip that is being strung together to create AI supercomputers, the Tensor Processing Unit (TPU), and apparently, it’s better than the A100, one of NVIDIA’s leading chips for AI, data analytics, and HPC. According to an excerpt from the paper, users will find not only 1.2x to 1.7x faster performance than the A100, but also superior efficiency, with claims of 1.3x to 1.9x less power usage being noted. TPU v4 is a platform of choice for the world’s leading AI researchers and features industry-leading efficiency, Google said in a separate article that discusses what its engineers have achieved.

From the arXiv.org archive:

In response to innovations in machine learning (ML) models, production workloads changed radically and rapidly. TPU v4 is the fifth Google domain specific architecture (DSA) and its third supercomputer for such ML models. Optical circuit switches (OCSes) dynamically reconfigure its interconnect topology to improve scale, availability, utilization, modularity, deployment, security, power, and performance; users can pick a twisted 3D torus topology if desired. Much cheaper, lower power, and faster than Infiniband, OCSes and underlying optical components are <5% of system cost and <3% of system power. Each TPU v4 includes SparseCores, dataflow processors that accelerate models that rely on embeddings by 5x-7x yet use only 5% of die area and power. Deployed since 2020, TPU v4 outperforms TPU v3 by 2.1x and improves performance/Watt by 2.7x. The TPU v4 supercomputer is 4x larger at 4096 chips and thus ~10x faster overall, which along with OCS flexibility helps large language models. For similar sized systems, it is ~4.3x-4.5x faster than the Graphcore IPU Bow and is 1.2x-1.7x faster and uses 1.3x-1.9x less power than the Nvidia A100. TPU v4s inside the energy-optimized warehouse scale computers of Google Cloud use ~3x less energy and produce ~20x less CO2e than contemporary DSAs in a typical on-premise data center.