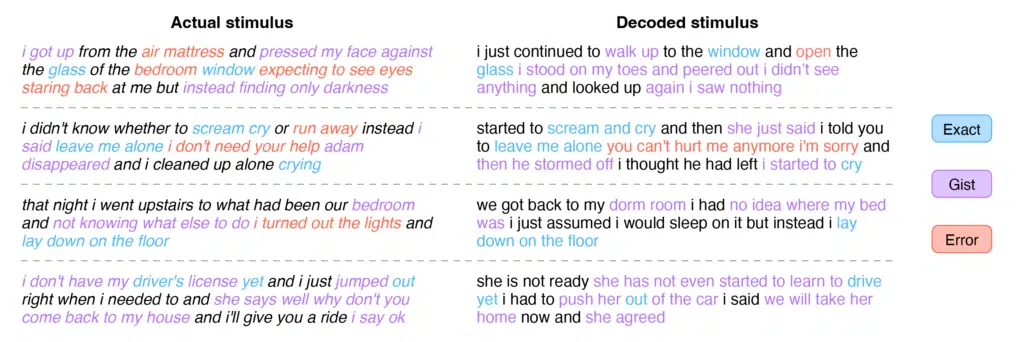

Researchers at the University of Texas at Austin have developed a new artificial intelligence system that is capable of translating people’s thoughts into text. The “semantic decoder,” as it’s called, was designed to help people who are mentally conscious but unable to physically speak (e.g., those debilitated by strokes) to communicate intelligibly again, and while it doesn’t look like the technology will be hitting the mainstream anytime soon in the form of mind-reading keyboards, the development is unique in that it is non-invasive and doesn’t require surgical implants. The AI is capable of translating some thoughts to a T, according to an image that compares actual and decoded stimuli.

“For a noninvasive method, this is a real leap forward compared to what’s been done before, which is typically single words or short sentences,” said Alex Huth, an assistant professor of neuroscience and computer science at UT Austin who co-led the study. “We’re getting the model to decode continuous language for extended periods of time with complicated ideas.”

From a University of Texas at Austin post:

Unlike other language decoding systems in development, this system does not require subjects to have surgical implants, making the process noninvasive. Participants also do not need to use only words from a prescribed list. Brain activity is measured using an fMRI scanner after extensive training of the decoder, in which the individual listens to hours of podcasts in the scanner. Later, provided that the participant is open to having their thoughts decoded, their listening to a new story or imagining telling a story allows the machine to generate corresponding text from brain activity alone.

The result is not a word-for-word transcript. Instead, researchers designed it to capture the gist of what is being said or thought, albeit imperfectly. About half the time, when the decoder has been trained to monitor a participant’s brain activity, the machine produces text that closely (and sometimes precisely) matches the intended meanings of the original words.

For example, in experiments, a participant listening to a speaker say, “I don’t have my driver’s license yet” had their thoughts translated as, “She has not even started to learn to drive yet.” Listening to the words, “I didn’t know whether to scream, cry or run away. Instead, I said, ‘Leave me alone!’” was decoded as, “Started to scream and cry, and then she just said, ‘I told you to leave me alone.’”