YouTube has shared a new blog post discussing its approach to responsible AI innovation, and within it is some good news for AI haters.

Per a section regarding disclosure requirements, YouTube has revealed that creators will soon be forced to fess up whether their content is “synthetic”—or, more specifically, created using AI.

This is because the content can mislead viewers, according to the Google-owned platform:

To address this concern, over the coming months, we’ll introduce updates that inform viewers when the content they’re seeing is synthetic. Specifically, we’ll require creators to disclose when they’ve created altered or synthetic content that is realistic, including using AI tools. When creators upload content, we will have new options for them to select to indicate that it contains realistic altered or synthetic material. For example, this could be an AI-generated video that realistically depicts an event that never happened, or content showing someone saying or doing something they didn’t actually do.

Additionally, AI-generated content that does make it on YouTube will likely be accompanied with a warning label going forward to tell viewers how the video was made:

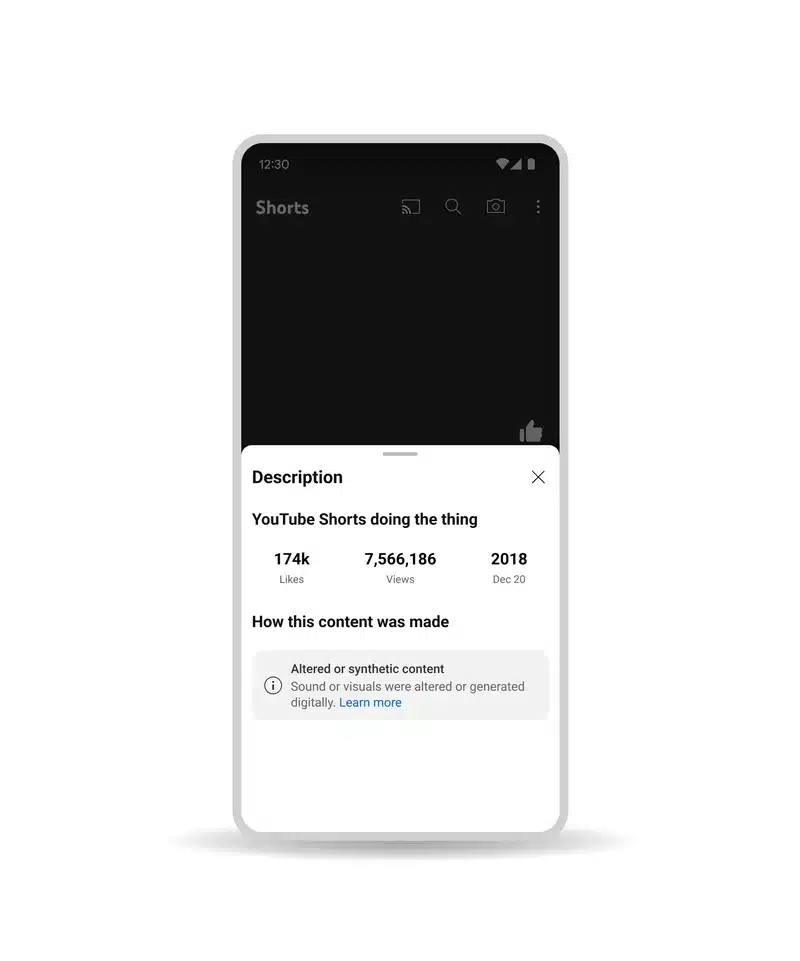

We’ll inform viewers that content may be altered or synthetic in two ways. A new label will be added to the description panel indicating that some of the content was altered or synthetic. And for certain types of content about sensitive topics, we’ll apply a more prominent label to the video player.

YouTube goes to explain that such content will be removed entirely if it violates its Community Guidelines, with “a synthetically created video that shows realistic violence” being just one example.

YouTube still appears to love to AI though, in that the end of post discusses how the company is using AI to help it moderate content:

…generative AI helps us rapidly expand the set of information our AI classifiers are trained on, meaning we’re able to identify and catch this content much more quickly. Improved speed and accuracy of our systems also allows us to reduce the amount of harmful content human reviewers are exposed to.

“We’re tremendously excited about the potential of this technology,” YouTube said.