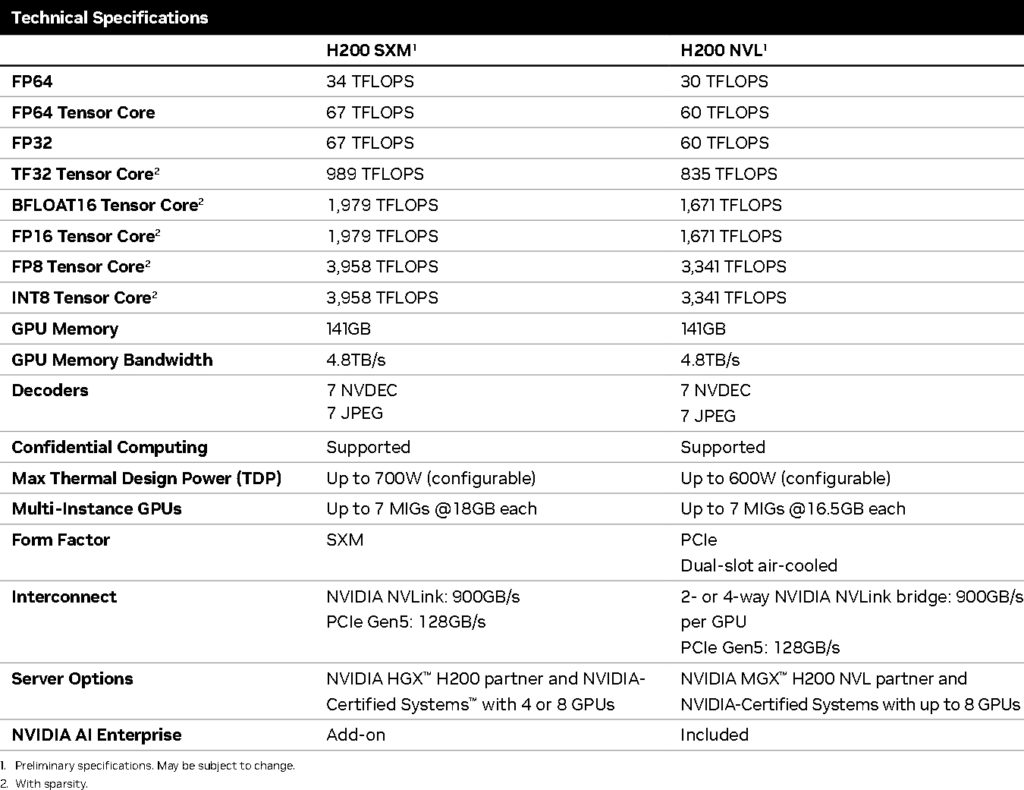

NVIDIA has announced that the H200 NVL, a new addition to the Hopper family that is advertised as delivering a 1.5x memory increase and 1.2x bandwidth increase over the NVIDIA H100 NVL in a PCIe form factor, is now available to organizations with data centers seeking lower-power, air-cooled enterprise rack designs with flexible configurations to deliver acceleration for every AI and HPC workload. See below for the tech specs for NVIDIA’s latest Hopper GPU, which echoes the SXM version’s 141 GB of HBM3e memory, coupled with a TDP rating of up to 600 watts.

Enterprises can use H200 NVL to accelerate AI and HPC applications, while also improving energy efficiency through reduced power consumption. With a 1.5x memory increase and 1.2x bandwidth increase over NVIDIA H100 NVL, companies can use H200 NVL to fine-tune LLMs within a few hours and deliver up to 1.7x faster inference performance. For HPC workloads, performance is boosted up to 1.3x over H100 NVL and 2.5x over the NVIDIA Ampere architecture generation.