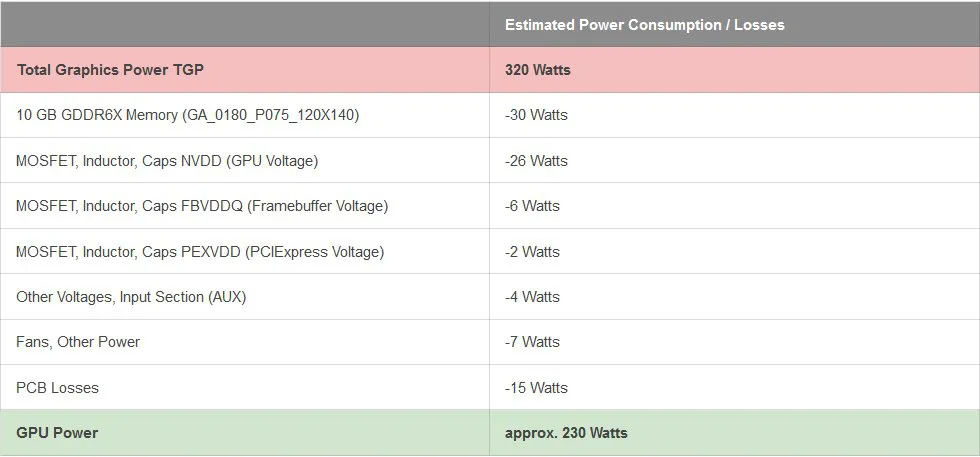

We previously shared a leak from Patrick Schur that suggested AMD’s flagship Radeon RX 6000 Series graphics card would feature a TGP of 255 W. Due to a difference in the way NVIDIA and AMD brand their total power spec (AMD uses Total Board Power [TBP], while NVIDIA uses Total Graphics Power [TGP]), Igor Wallossek has published consumption figures that give us a better idea of how much power red team’s RDNA 2 cards really use.

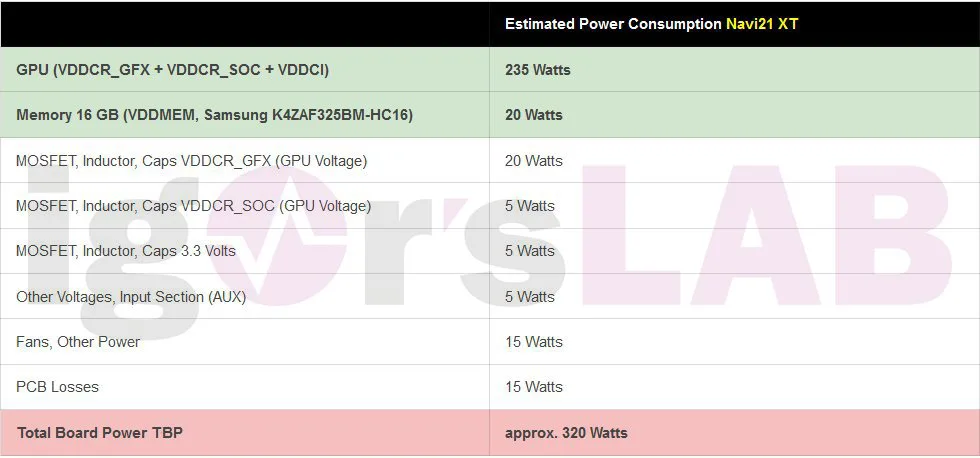

After figuring in the components of the card aside from the GPU (e.g., memory, fans), Wallossek estimates that AMD’s Navi 21 XT model, which many have dubbed the Radeon RX 6900 XT, will feature a TBP of 320 watts.

That happens to be right in line with NVIDIA’s GeForce RTX 3080, which carries a 320 W TGP. The implication is that AMD’s Radeon RX 6000 Series may not be any more efficient than green team’s offerings.

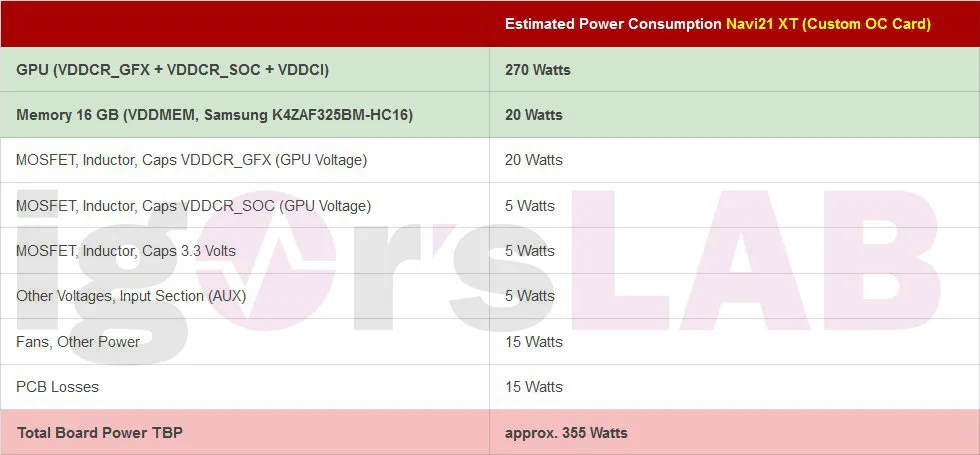

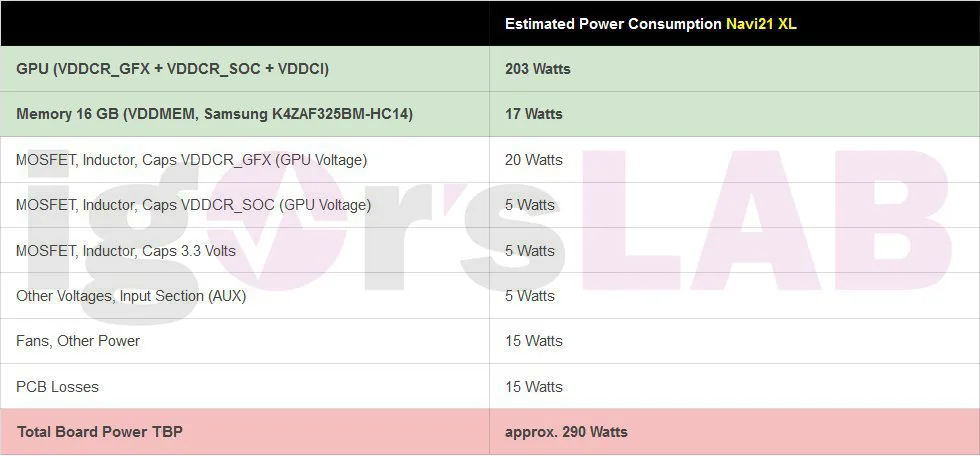

Wallossek goes on to give estimates for custom (overlocked) Navi 21 XT models and Navi 21 XL, the latter of which is believed to be the Radeon RX 6900. These will supposedly feature a TBP of 355 watts and 290 watts, respectively.

We also learn that AMD’s Radeon RX 6000 Series flagship will utilize 16 gigabytes of Samsung’s GDDR6 memory. According to Wallossek, custom RDNA 2 GPUs will be released as early as mid-November.