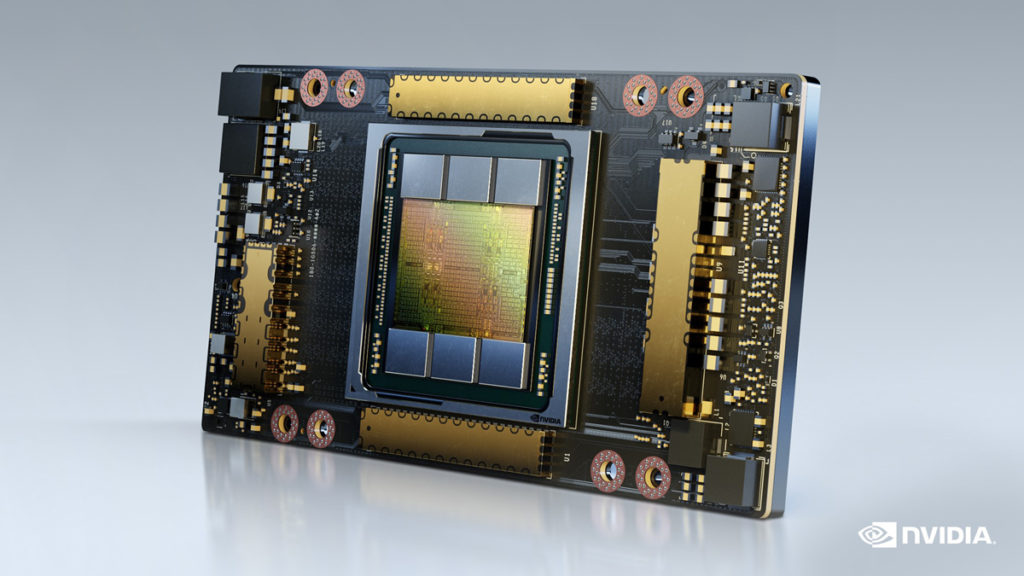

NVIDIA launched its Ampere-based DGX A100 AI System nearly six months ago, and it didn’t take long for it to begin breaking records as it found its way into supercomputers around the world. As we near the end of 2020, NVIDIA has upgraded the A100 GPU with HBM2E technology, taking it to even greater performance levels. As if the incredible speeds of the new memory were not enough, it’s also been doubled from 40 GB to 80 GB. Bryan Catanzaro, vice president of applied deep learning research at NVIDIA, had the following to say about the newest upgrade.

Achieving state-of-the-art results in HPC and AI research requires building the biggest models, but these demand more memory capacity and bandwidth than ever before.

The A100 80 GB GPU provides double the memory of its predecessor, which was introduced just six months ago, and breaks the 2 TB per second barrier, enabling researchers to tackle the world’s most important scientific and big data challenges.

With over 2 TB/s of memory bandwidth, the A100 GPU is now the world’s fastest data center GPU. NVIDIA is already incorporating it into its DGX A100 AI and new DGX Station A100 “data center in a box” systems. By using NVIDIA’s multi-instance GPU (MIG) technology, the A100’s memory can also be partitioned out, allowing it to multitask for smaller projects. It can then be configured to run up to seven MIGs with 10 GB each.

A100 80 GB Technical Specifications

- Third-Generation Tensor Cores: Provide up to 20x AI throughput of the previous Volta generation with a new format TF32, as well as 2.5x FP64 for HPC, 20x INT8 for AI inference, and support for the BF16 data format.

- Larger, Faster HBM2e GPU Memory: Doubles the memory capacity and is the first in the industry to offer more than 2 TB per second of memory bandwidth.

- MIG technology: Doubles the memory per isolated instance, providing up to seven MIGs with 10 GB each.

- Structural Sparsity: Delivers up to a 2x speedup inferencing sparse models.

- Third-Generation NVLink and NVSwitch: Provide twice the GPU-to-GPU bandwidth of the previous generation interconnect technology, accelerating data transfers to the GPU for data-intensive workloads to 600 gigabytes per second.