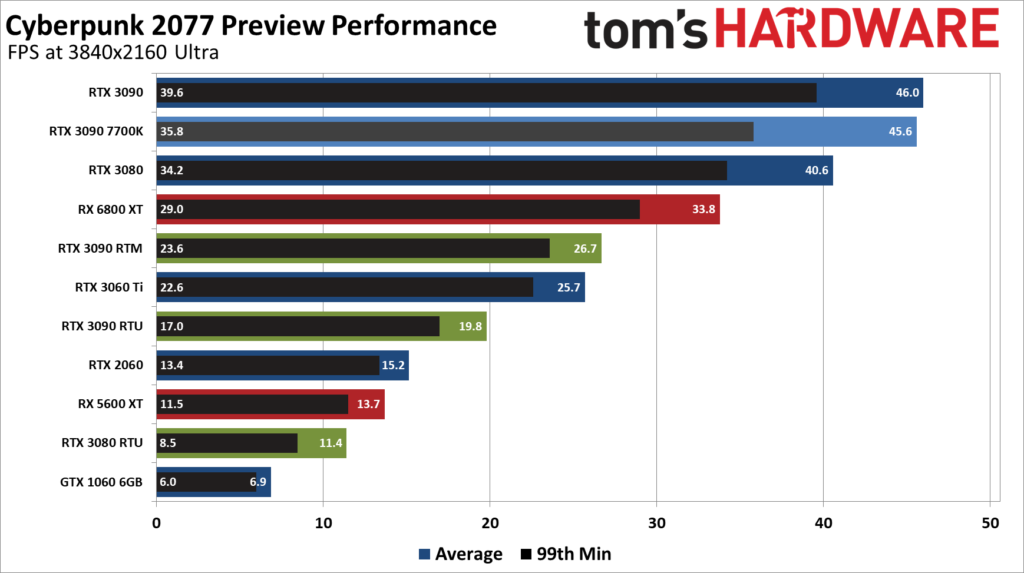

Is Cyberpunk 2077 the modern Crysis? Tom’s Hardware has shared some early benchmarks for CD PROJEKT RED’s latest masterpiece, and it appears to be extremely demanding at the 4K Ultra setting—so much so that NVIDIA’s flagship GeForce RTX 3090 can’t hit 60 FPS, even with ray tracing turned off. If there’s any title that truly requires DLSS, this appears to be it, as green team’s BFGPU is completely crushed at the native maximum preset when RTX is enabled.

You can check out the benchmarks below, which are mildly confusing based on the many variables and how they’re color coded. But here’s how Tom’s Hardware’s Jarrod Walton has explained them:

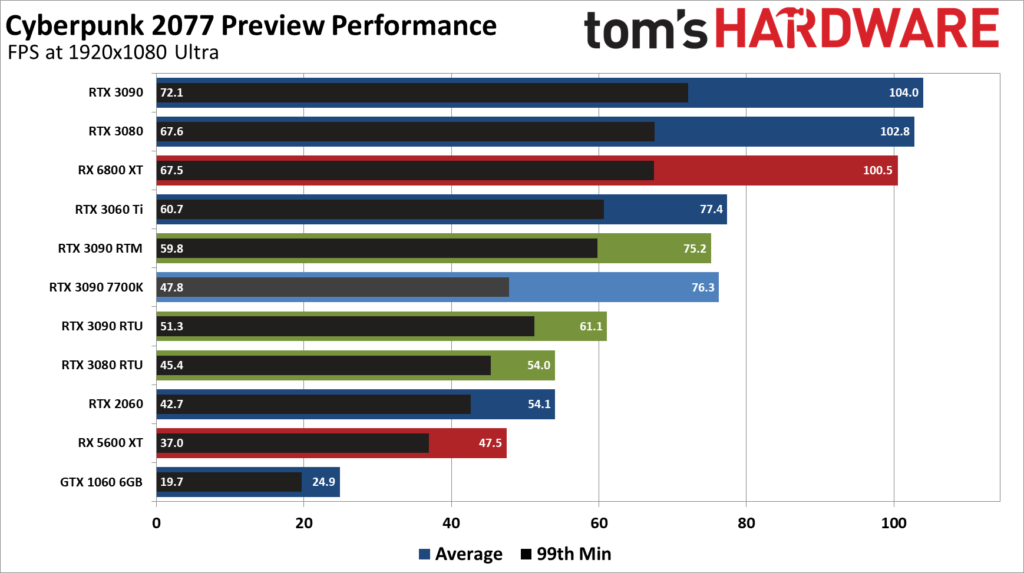

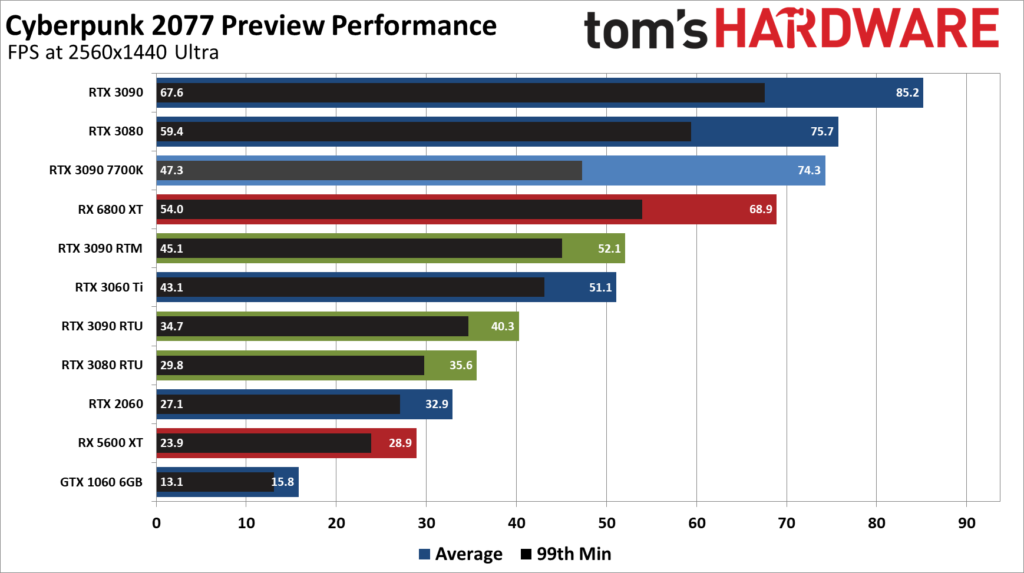

For the charts, we’ve color-coded Nvidia in blue, AMD in red, and Nvidia with ray tracing in green. We also have the simulated i7-7700K in light blue. The ray tracing results shown in the main chart are at native resolution—no DLSS. RTM indicates the use of the Ray Traced Medium preset (basically the same as the ultra preset, but with several ray tracing enhancements turned on), and RTU is for Ray Traced Ultra (nearly maxed out settings, with RT reflections enabled and higher-quality lighting).

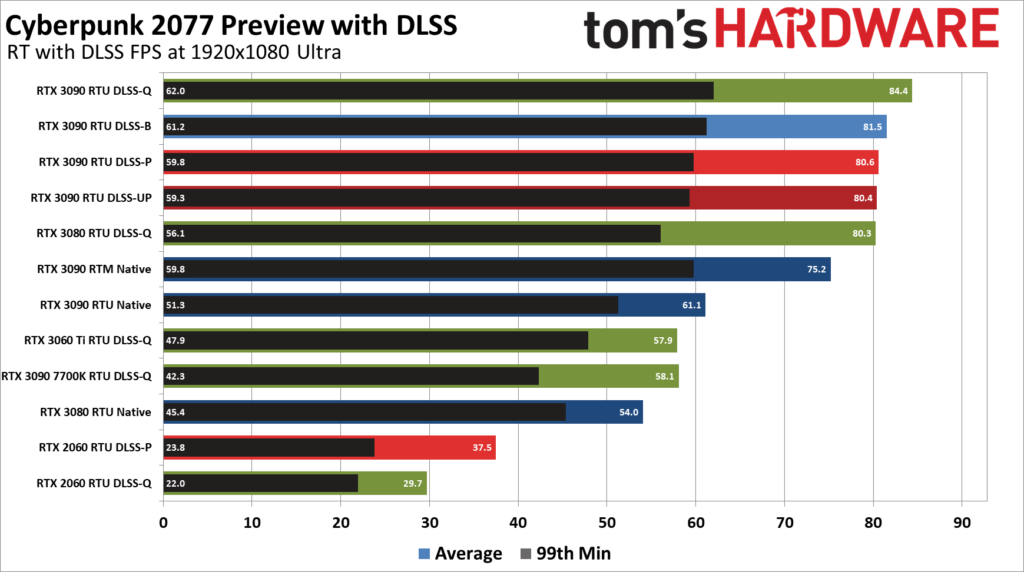

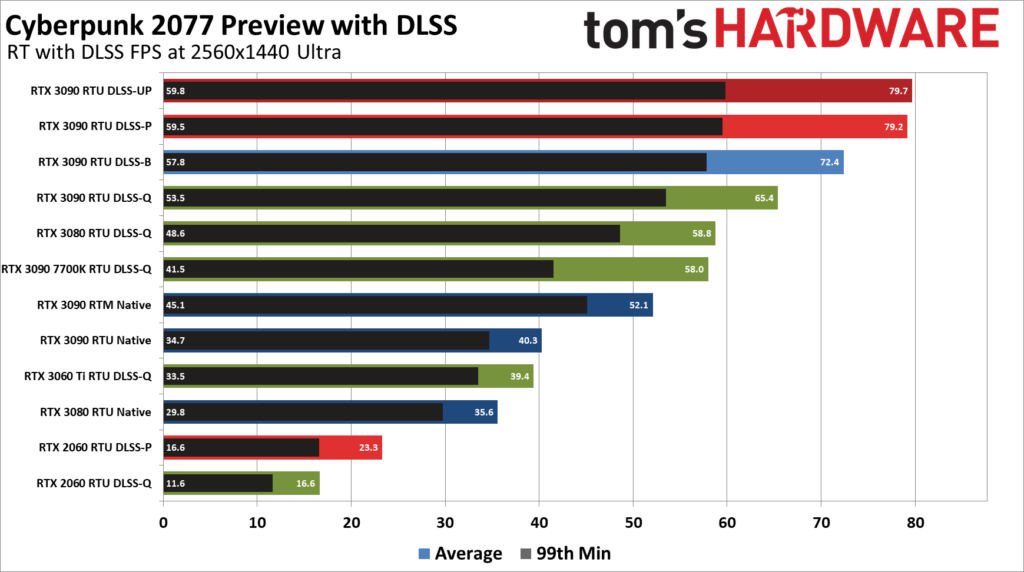

For the ultra settings, we have a second chart showing DLSS performance on the RTX GPUs. We didn’t test every GPU at every possible option (DLSS Auto, Quality, Balanced, Performance, and Ultra Performance), but we did run the full suite of options on the RTX 3090 just for fun. Native results are in dark blue, DLSS Quality results are in green, DLSS Balanced in light blue, DLSS Performance in lighter red, and DLSS Ultra Performance in dark red. (DLSS Auto uses Quality mode at 1080p, Balanced mode at 1440p, and Performance mode at 4K, so we didn’t repeat those results in the charts.) At 4K, we also included a few results running without ray tracing—indicated by ‘Rast’ in the label (for rasterization) and in light blue.

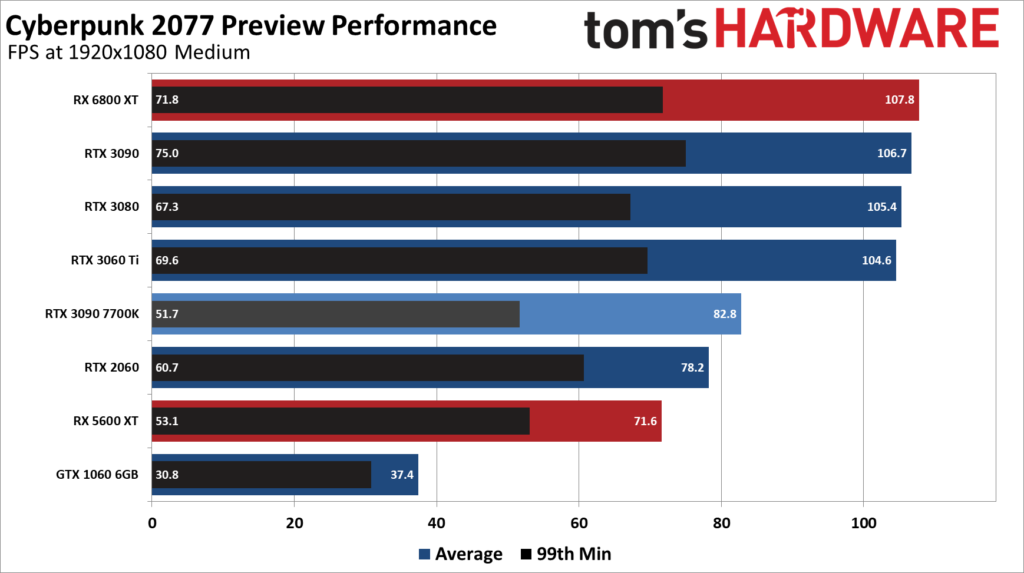

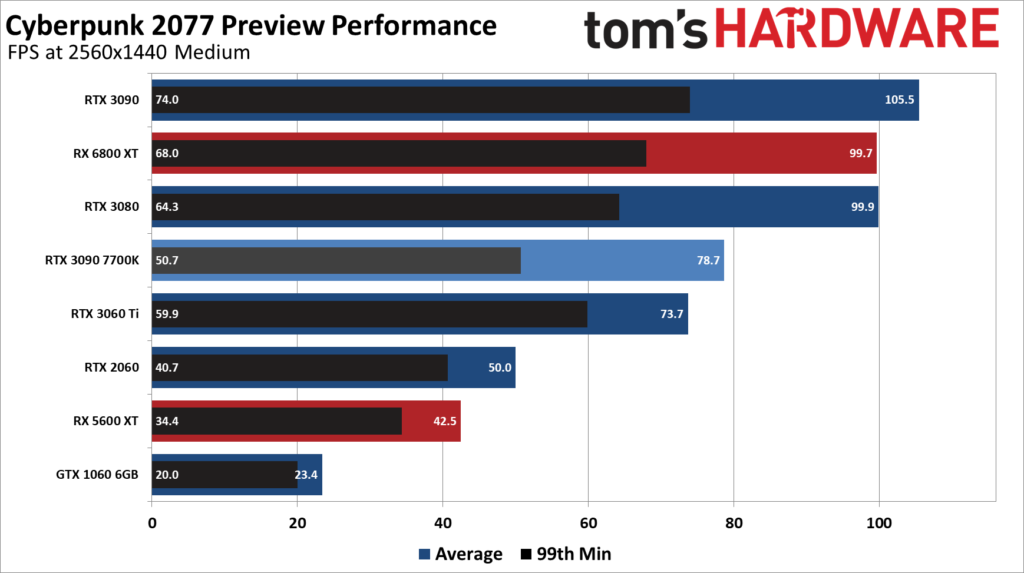

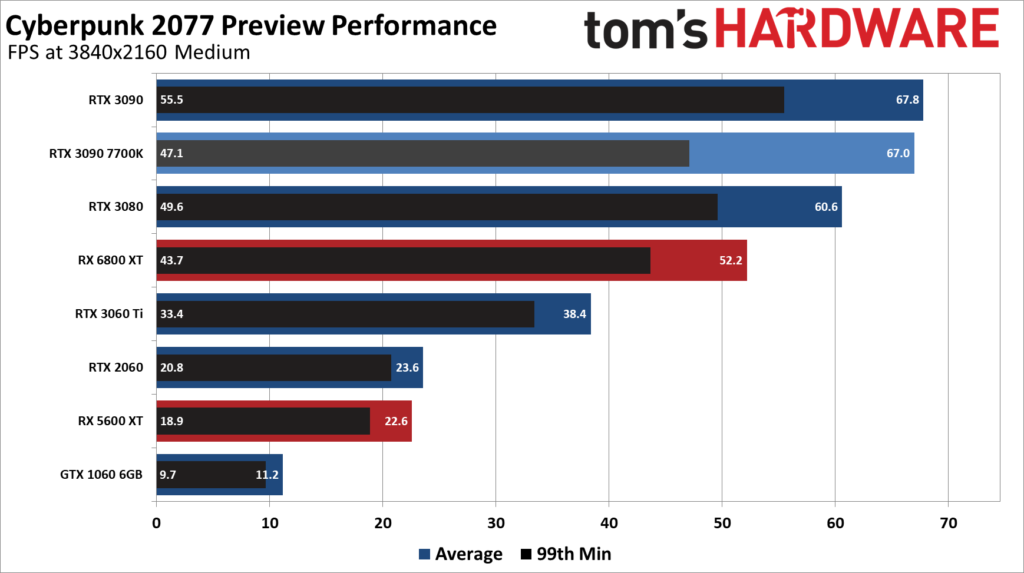

Cyberpunk 2077 Performance: Medium Preset

Cyberpunk 2077 Performance: Ultra Preset

It’s wild how the GeForce RTX 3090 can barely hit 73 FPS in the 4K Ultra + RTX preset even with DLSS’s new Ultra Performance mode enabled, which was primarily designed for 8K gaming. We’re hearing that CD PROJEKT RED will be releasing a massive 50+ GB 0-day patch that should improve performance somewhat, but it’s pretty clear that Cyberpunk 2077 fans who wish to experience the game at its highest fidelity will need some serious hardware.

Cyberpunk 2077 will be released for the PC, Xbox One, PlayStation 4, and Google Stadia on December 10. NVIDIA’s Game Ready Driver, which will presumably land in a day or two, should also help with the performance.