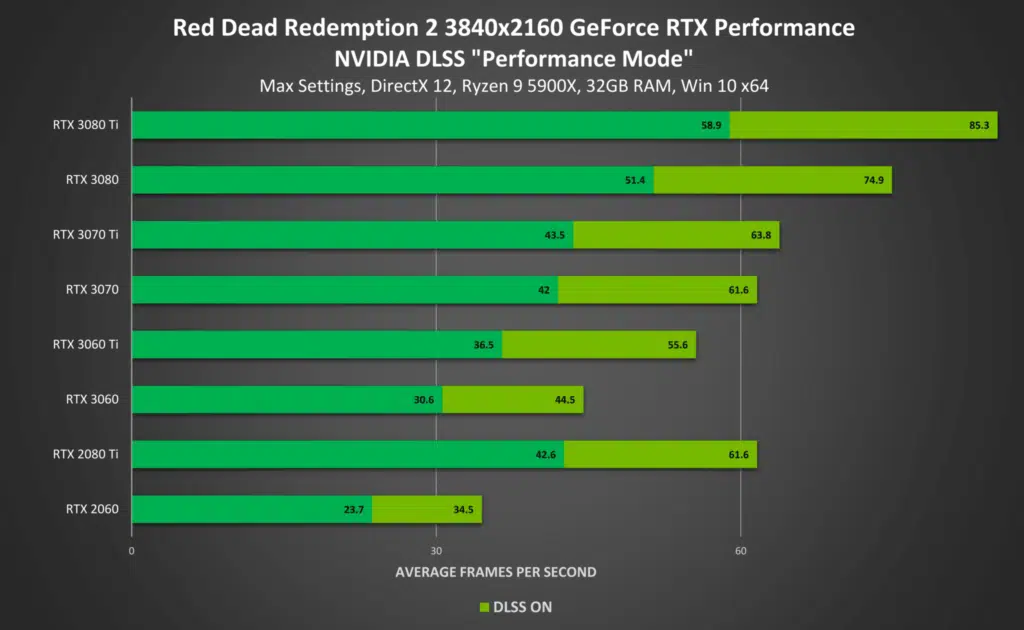

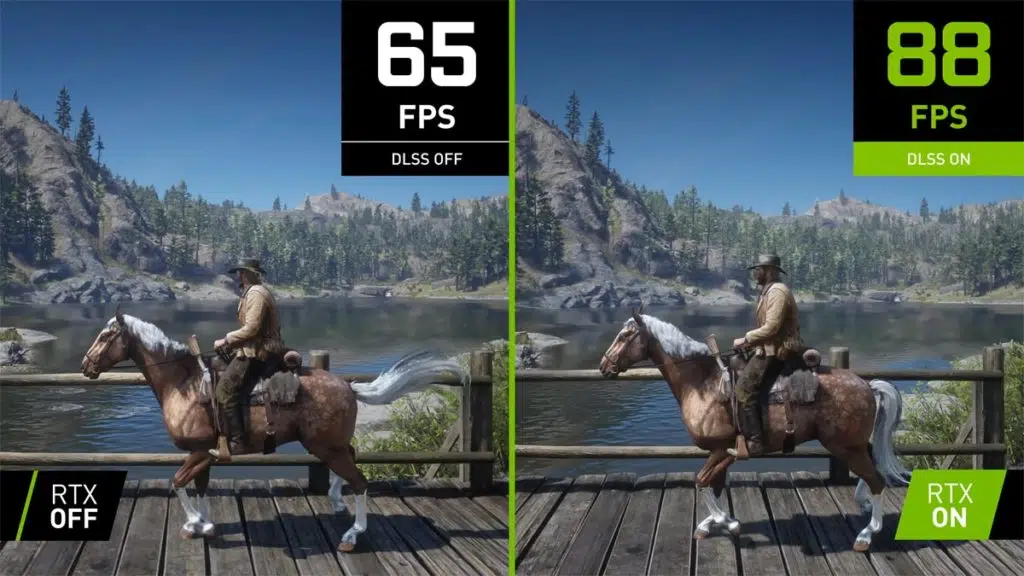

Rockstar Games has released its NVIDIA DLSS update for Red Dead Redemption 2 and its online component, Red Dead Online, enabling green team’s deep learning super sampling technology in the popular action-adventure games and giving them a welcome performance boost. According to NVIDIA’s own testing, Red Dead Redemption 2 and Red Dead Online players with GeForce RTX graphics cards can enjoy accelerated performance by up to 45 percent at 4K. Red Dead Redemption 2 and Red Dead Online’s NVIDIA DLSS update can be enabled in the Settings menu under the Graphics section.

With the aid of NVIDIA DLSS, all GeForce RTX gamers can experience Red Dead Redemption 2’s incredible world with max settings at 1920×1080 at over 60 FPS. At 2560×1440, GeForce RTX 3060 Ti and above users can ride out with over 60 FPS. And at 3840×2160, gamers with a GeForce RTX 3070, or a faster GPU, can enjoy 60 FPS+ max setting gameplay, for the most detailed, immersive, and engrossing Red Dead Redemption 2 and Red Dead Online experience possible.

Source: NVIDIA