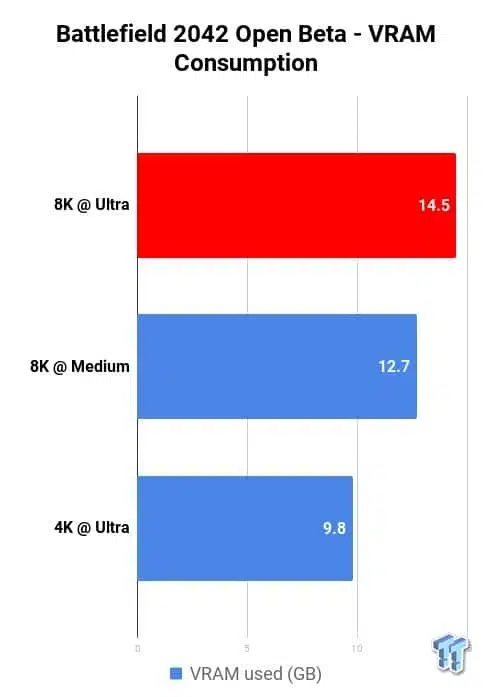

Early benchmarks have shown Battlefield 2042’s open beta requiring 16 GB of VRAM at 8K. A GeForce RTX 3090 and multiple Radeon cards were used in the tests. Up to 13 GB of VRAM was used at 8K in the Medium preset. The GeForce RTX 3090 manages an average of around 30 FPS at this preset. At Ultra, the frame buffer was filled with another 2 GB, bringing AMD cards to their 16 GB limit. At that point, none of the cards could go beyond 28 FPS.

The final game will have DLSS. DLSS could substantially improve the framerate on the GeForce RTX 3090, possibly bringing it closer to 60 FPS at medium. The GeForce RTX 3080 Ti was not used here, but similar results could be expected because of how it compares to the GeForce RTX 3090. There has been no word if FSR will be added to the game, which would also improve performance at 8K.

Other games have shown high VRAM usage at 8K. Even if some graphics cards have enough VRAM, they are a long way from what most would consider acceptable framerates. Though 8K gaming is not mainstream, it points to the trend of new games needing more memory. Graphics card manufacturers are adopting more and more memory.

[…] you will truly need 16GB of VRAM at least to run 8K without DLSS enabled. The 24GB of ultra-fast GDDR6X memory on the GeForce RTX 3090 is fine, as too is the 16GB of GDDR6 memory on the AMD Radeon RX 6800, Radeon RX 6800 XT, and Radeon RX 6900 XT graphics cards.

Source: TweakTown