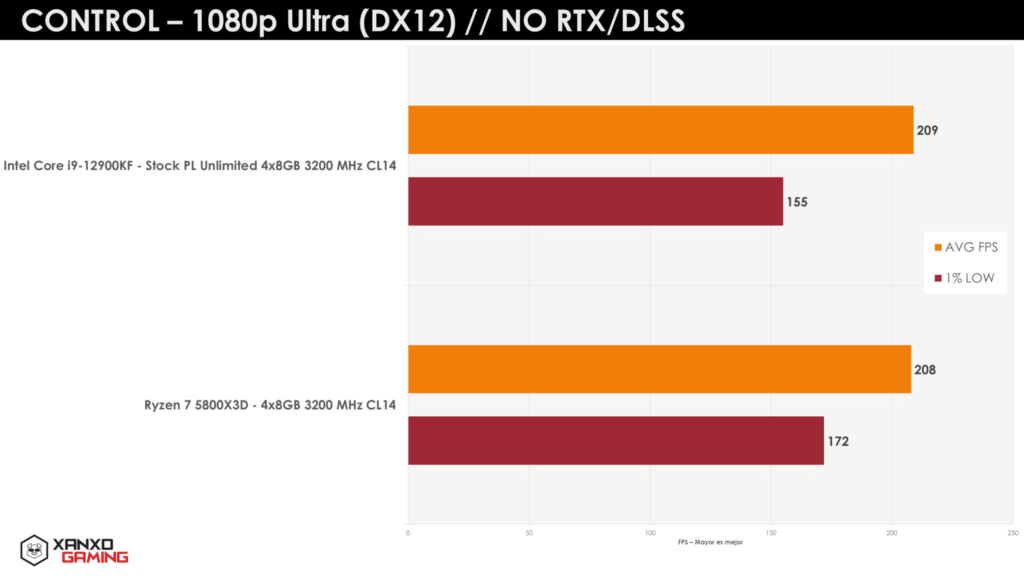

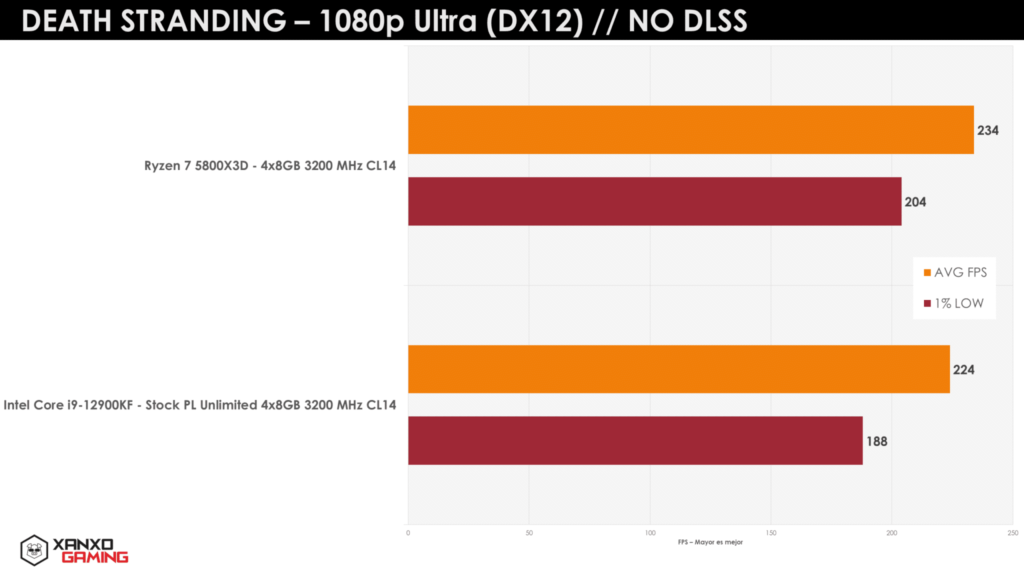

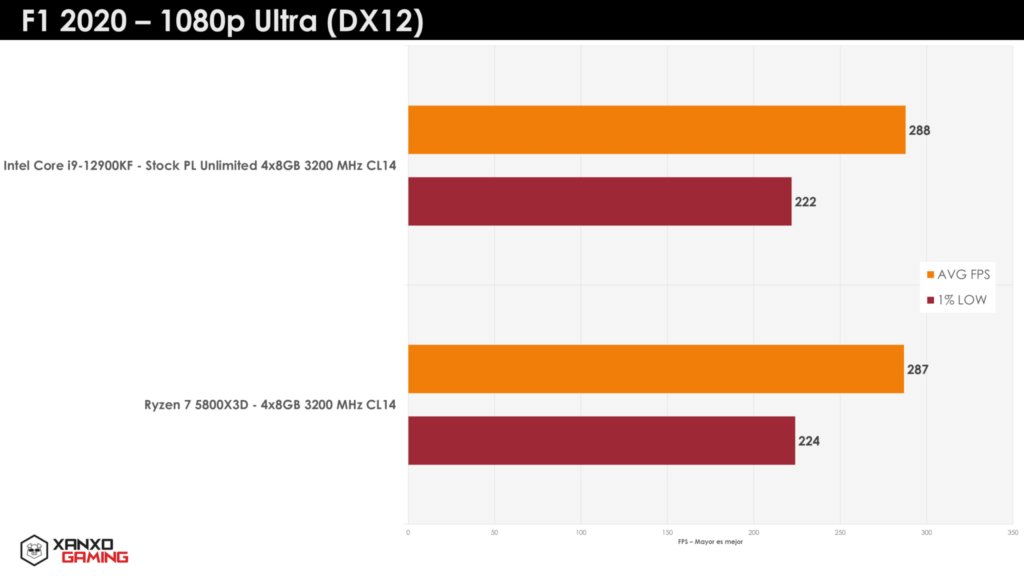

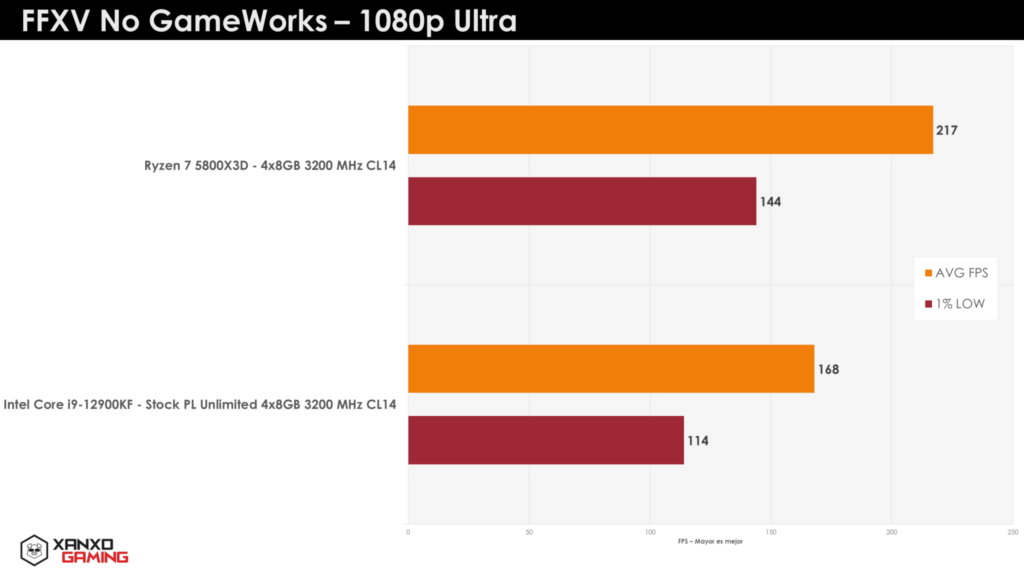

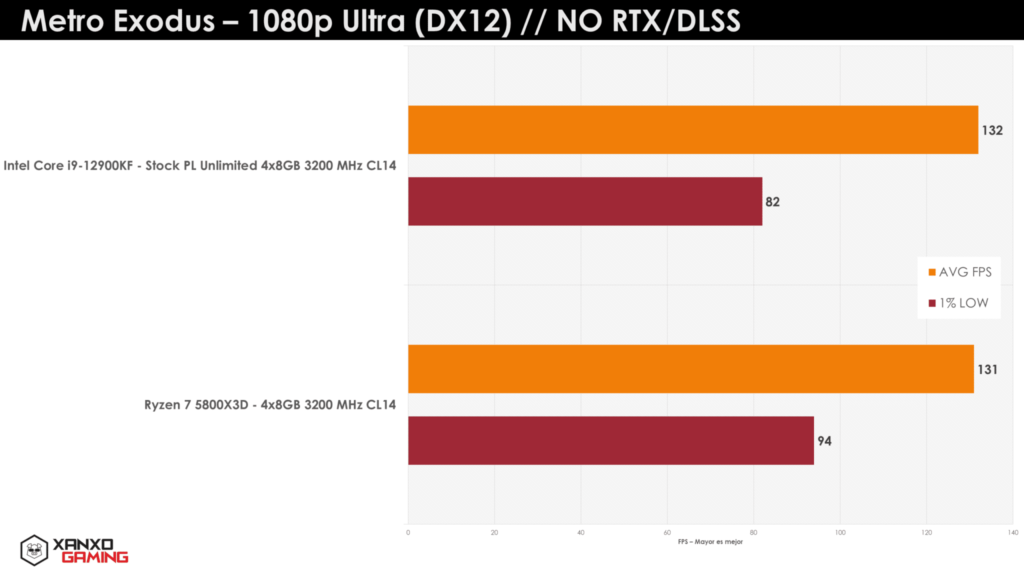

Peruvian hardware site XanxoGaming has returned with a new set of benchmarks that provide greater insight on how AMD’s first Ryzen CPU with 3D V-Cache technology might handle some of today’s most popular titles. Borderlands 3, Control, and Death Stranding are among the titles included in the tests, which were performed on systems equipped with a GeForce RTX 3080 Ti Founders Edition graphics card (full specs of both AMD and Intel rigs can be found below). The benchmarks seem to suggest that the Ryzen 7 5800X3D generally lines up pretty closely with Intel’s standard Alder Lake-S flagship, the Core i9-12900K. AMD’s 8C/16T Ryzen 7 5800X3D with 3D V-Cache technology will be available globally beginning next Wednesday, April 20, at an MSRP of $449.

AMD Test System (For Gaming)

- CPU: AMD Ryzen 7 5800X3D

- Motherboard: X570 AORUS MASTER Rev 1.2 (BIOS F36c)

- RAM: G.Skill FlareX 4x8GB 3200 MHz CL14 (Samsung B-Die)

- Graphics Card: NVIDIA GeForce RTX 3080 Ti Founders Edition

- SSD: Samsung 980 PRO 1TB

- SSD #2: Silicon Power A55 2TB

- AIO: Arctic Liquid Freezer II 360

- PSU: EVGA SUPERNOVA 750W P2

- OS: Windows 10 Home 21H2

Intel Test System (For Gaming)

- CPU: Intel Core i9-12900KF (Power Limits Unlimited, no MCE)

- Motherboard: TUF GAMING Z690-PLUS WIFI D4 (BIOS 1304)

- RAM: G.Skill FlareX 4x8GB 3200 MHz CL14 (Samsung B-Die)

- Graphics Card: NVIDIA GeForce RTX 3080 Ti Founders Edition

- SSD: TeamGroup Delta MAX 250GB

- SSD #2: Silicon Power A55 2TB

- AIO: Lian Li Galahad 360

- PSU EVGA SUPERNOVA 750W P2

- OS: Windows 10 Home 21H2 (Win Game Mode On, RSB On, HAGS OFF). We rather test with Windows 10 for now.

Summary – Gaming benchmarks -1080p (XanxoGaming)

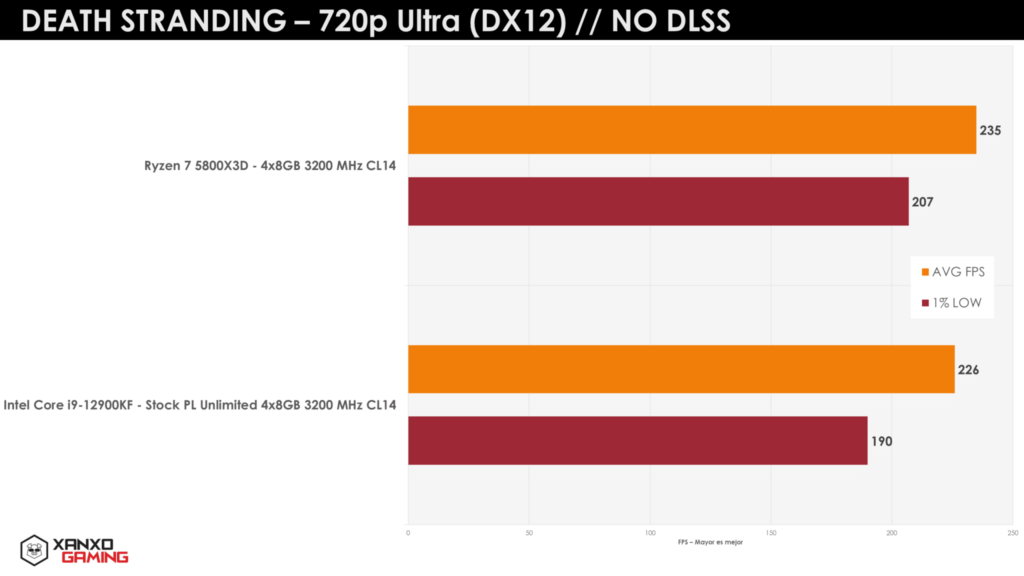

For the most part, on the test suite we have, 1080p results are on par, except some few exceptions. Death Stranding, even though it has a 240 FPS cap, AVG FPS and 1% LOW results are a tad better for the AMD Ryzen CPU.

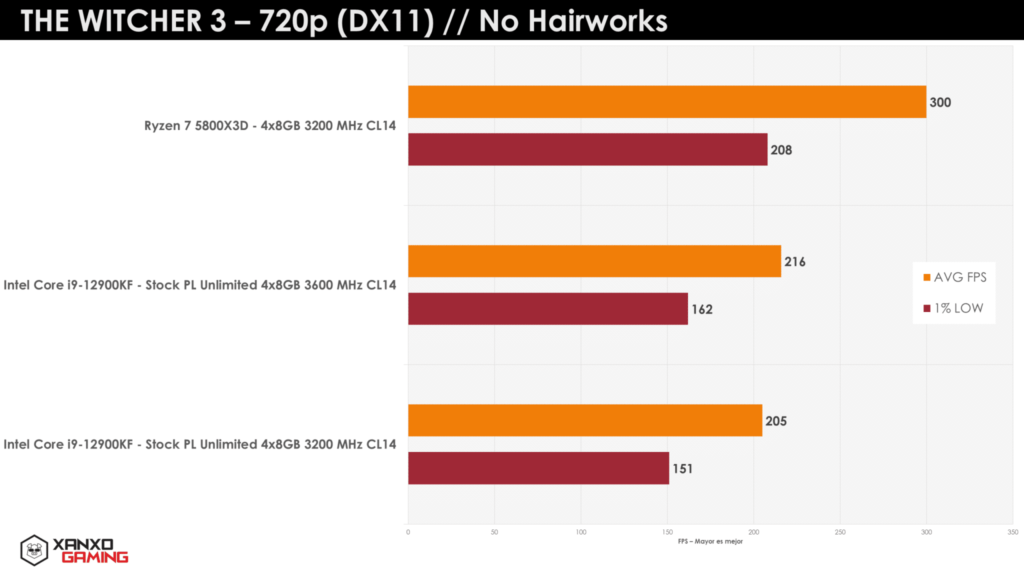

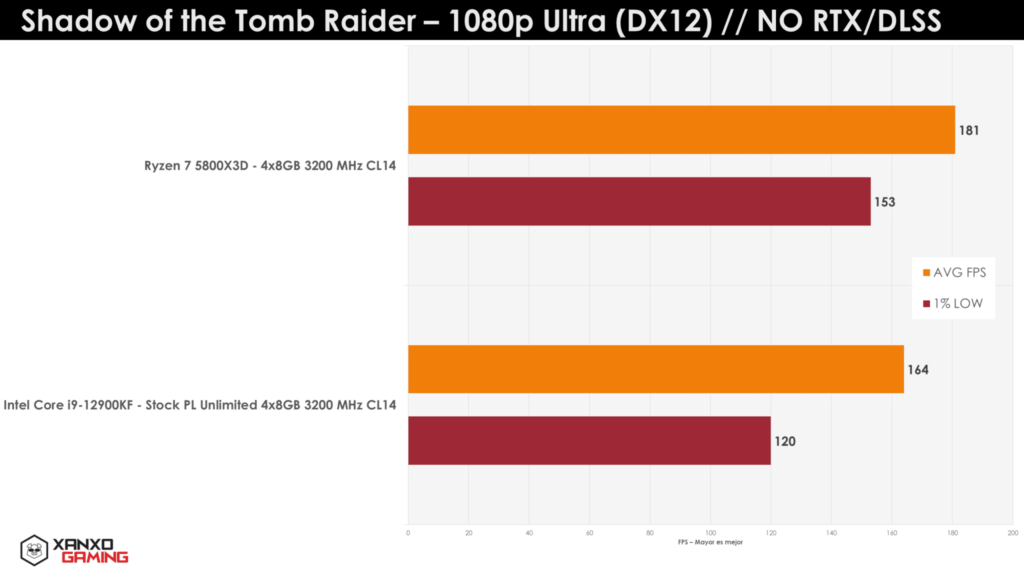

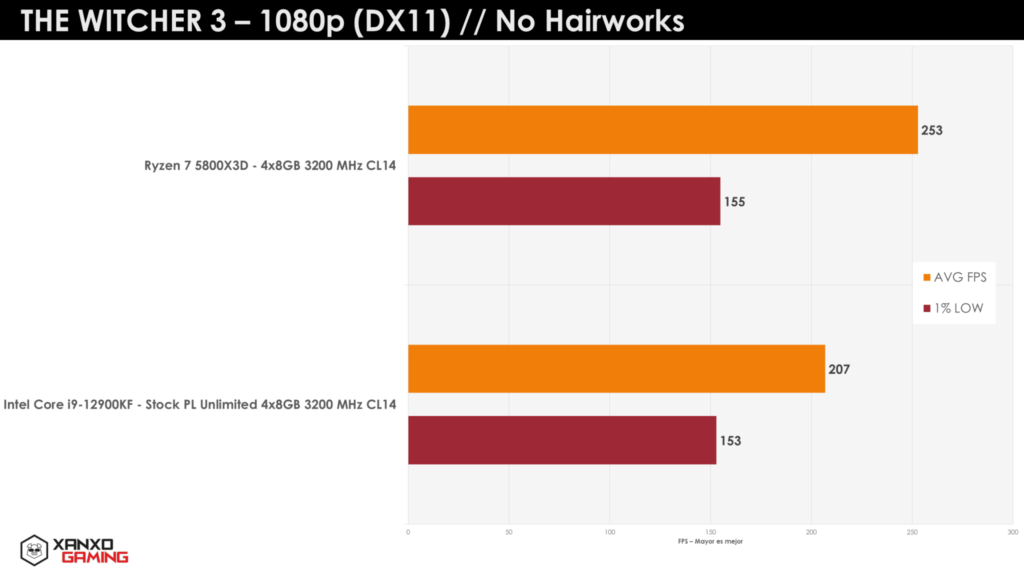

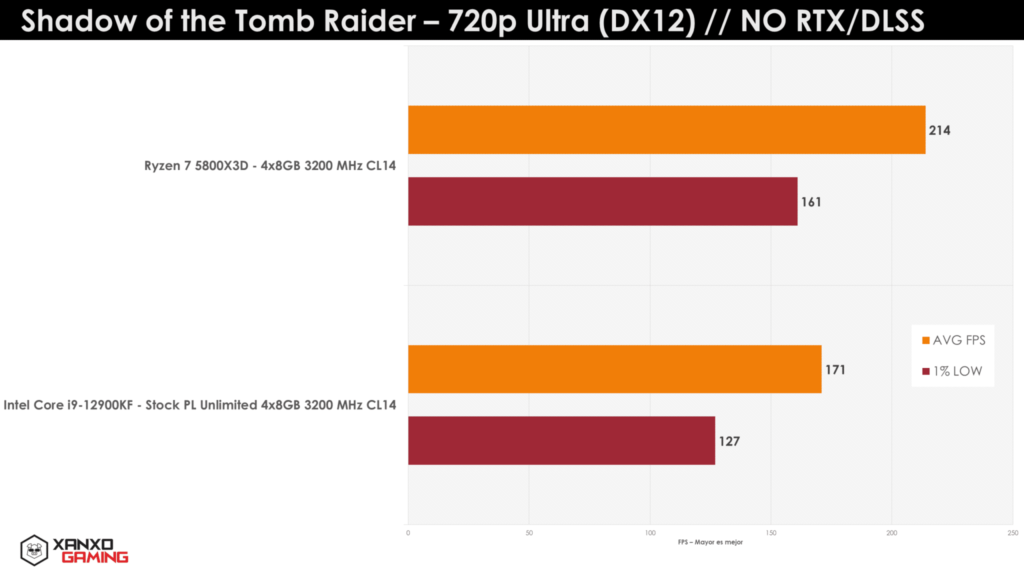

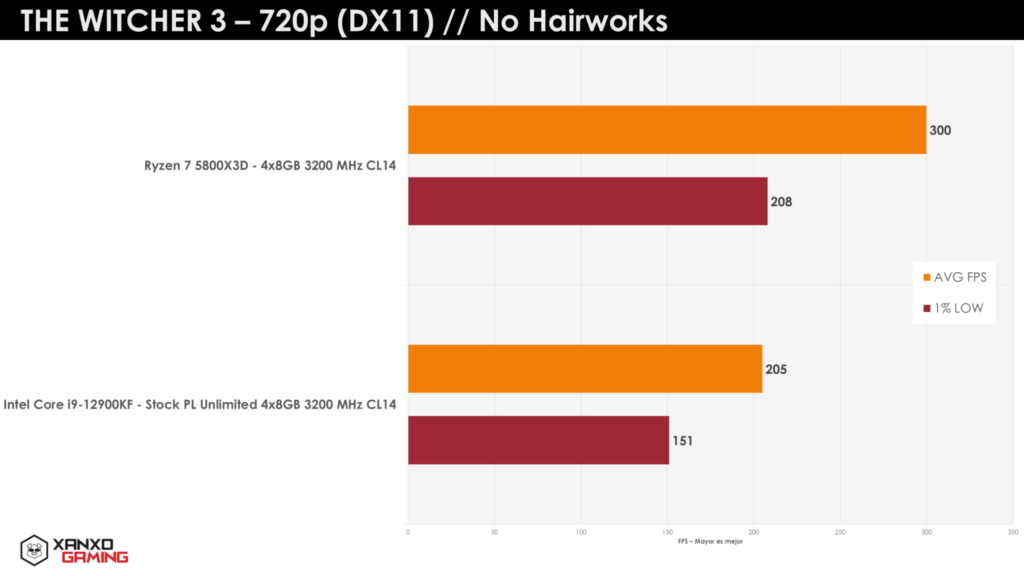

Out of the full test suite, three results stand out, even for 1080p. The point here is, which is the “fastest CPU” and as AMD Marketing showed, there is an actual difference in FFXV, Shadow of the Tomb Raider and The Witcher 3.

Out of those three, The Witcher 3 (Novigrad) stands out with a massive 22%. Checking some past results I did Alder Lake-S using DDR5 (did not put them since I want to retest ADL-S DDR5 6200 C40) it seems there is some bandwidth cap while using DDR4-3200 CL14 kits, or at least scales with faster kits on ADL-S in this title.

We did a real quick test with DDR4-3600 CL14 with 720p in The Witcher 3 that you will see later.

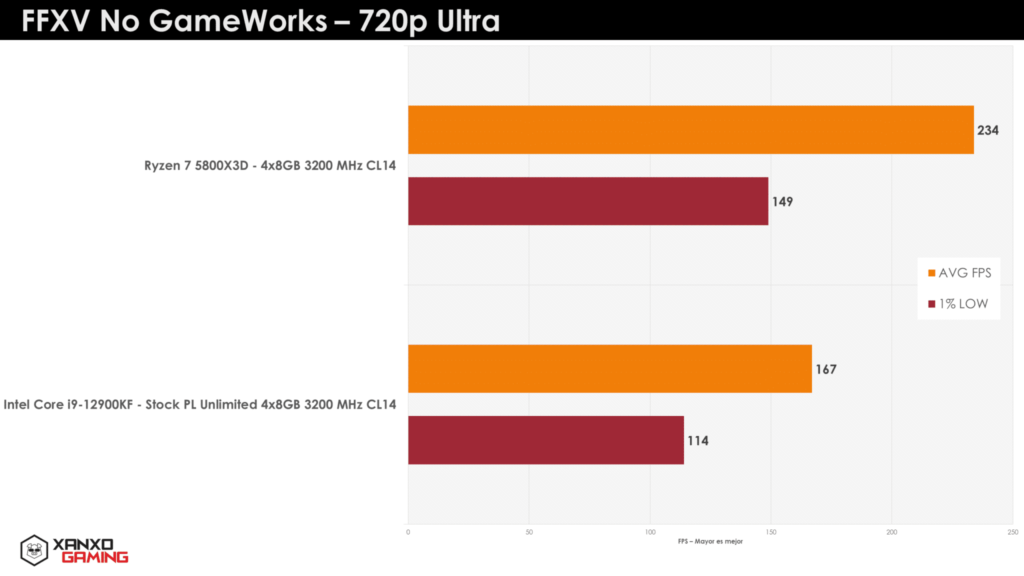

Another massive advantage comes in FFXV with a 29.16% advantage for the Ryzen 7 5800X3D.

In a lesser degree (but no smaller feat) team Red has an 10.36% advantage in Shadow of the Tomb Raider.

It seems that in 1080p, most games will see ties comparing Ryzen 7 5800X3D versus 12900K-DDR4 and some games giving a substantial win for the new 3D V-CACHE technology. 12900K-DDR5 results are to be seen, but we do expect better performance (compared to ADL-S DDR4) based on prior results we had.

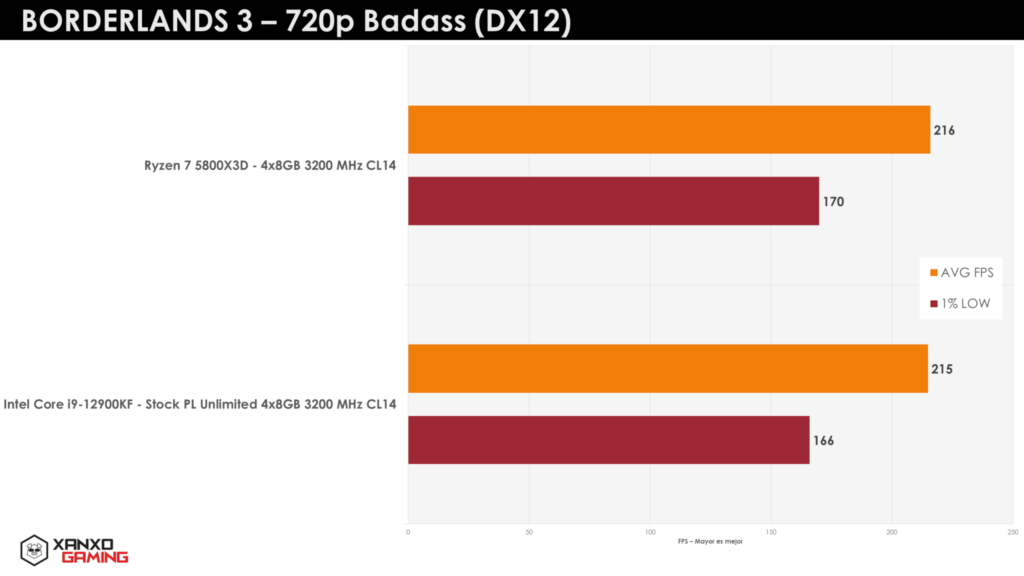

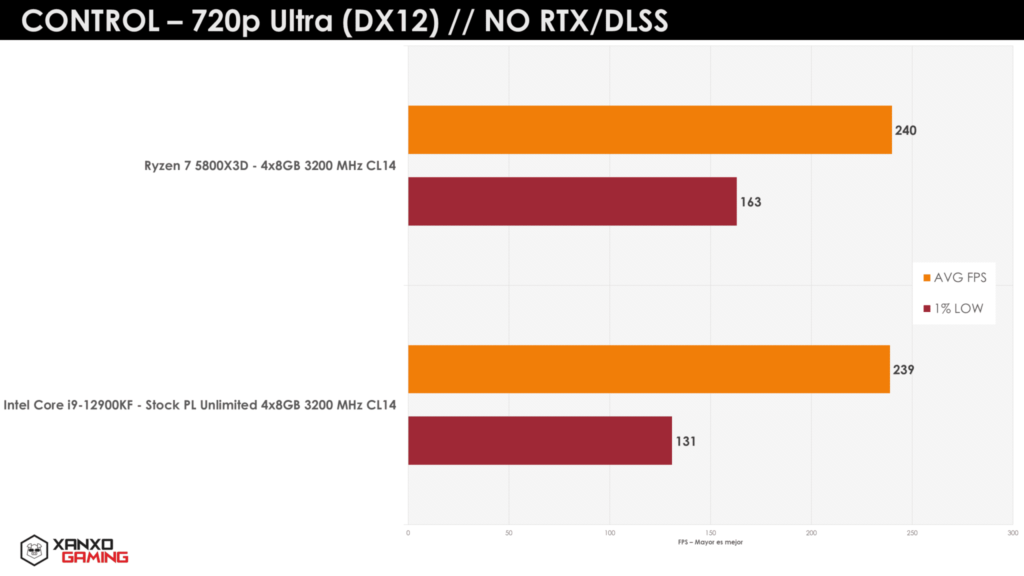

Gaming Benchmarks – 720p – AMD Ryzen 7 5800X3D (XanxoGaming)

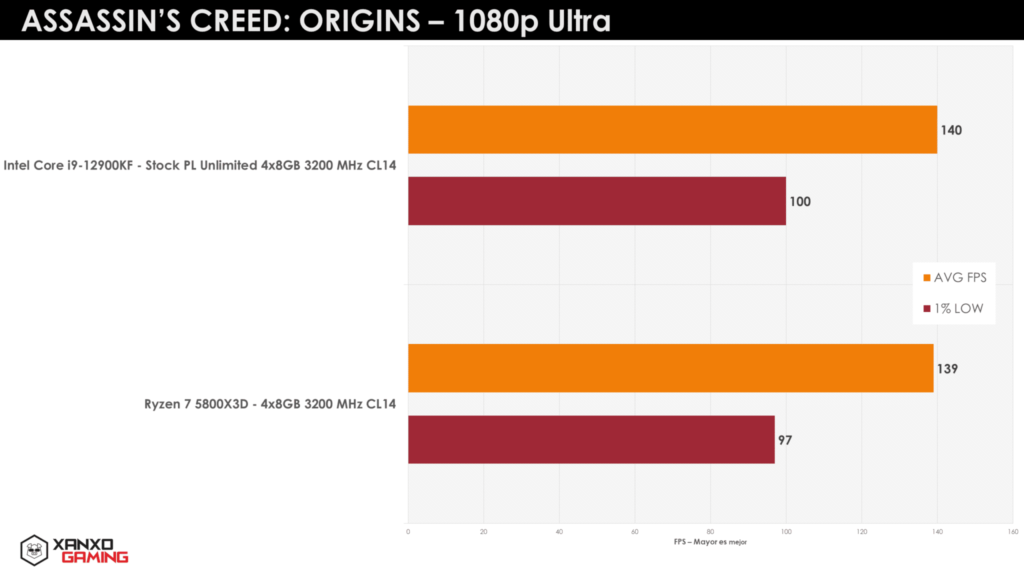

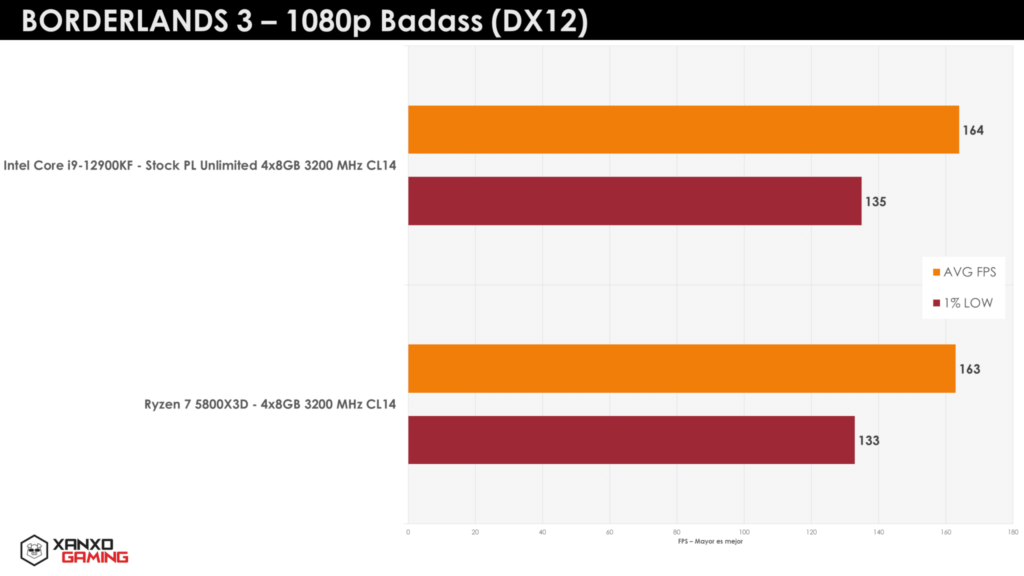

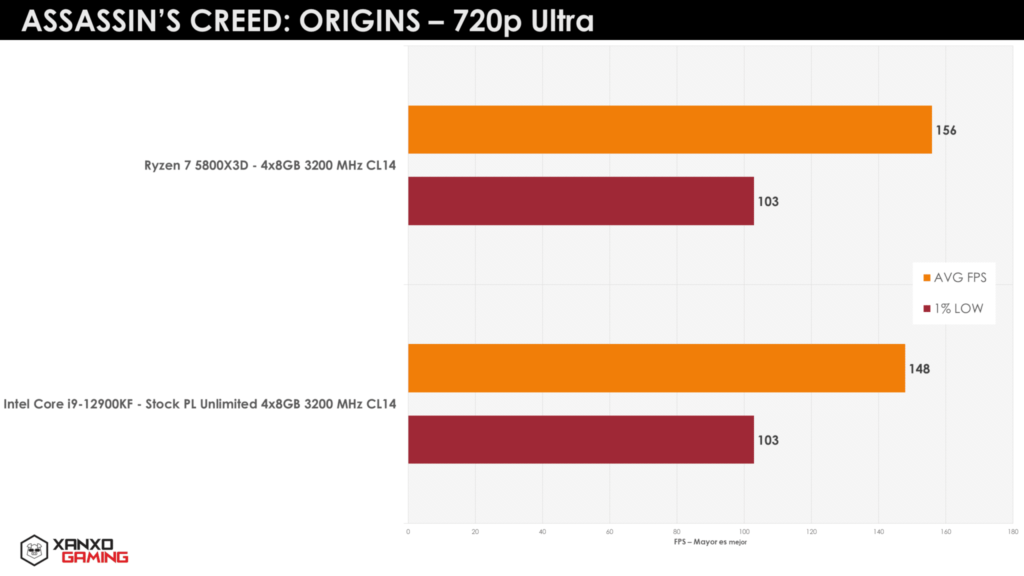

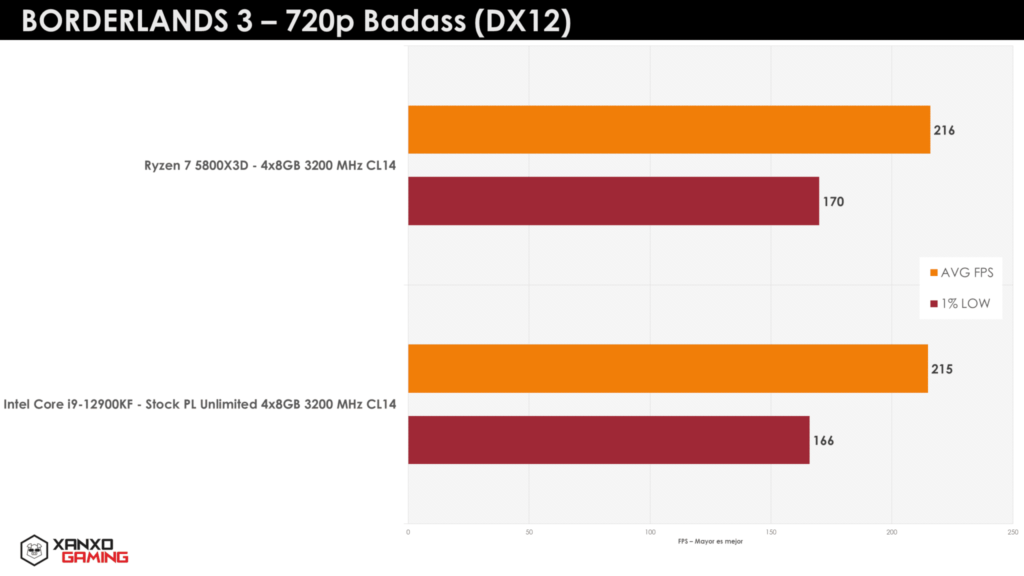

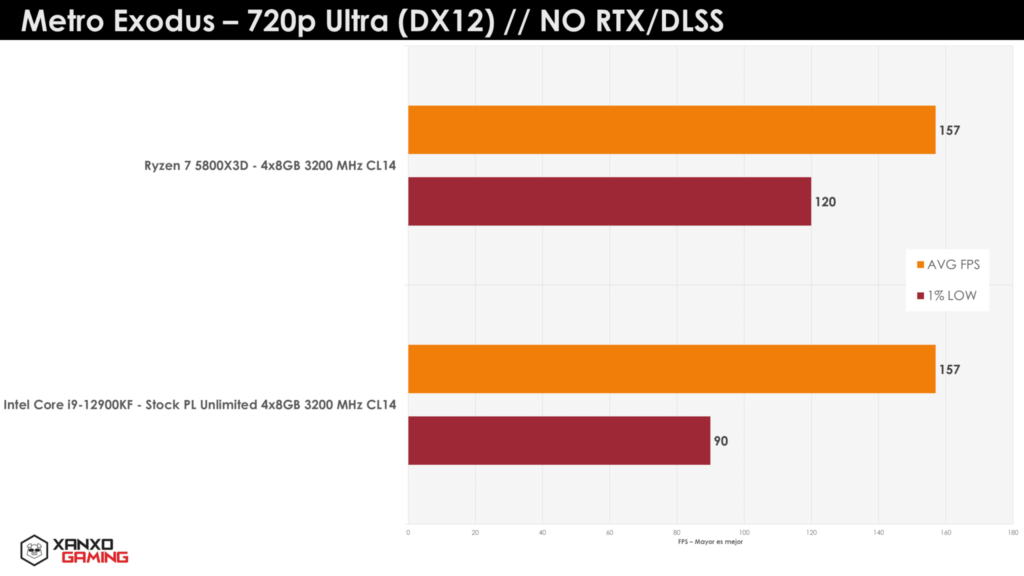

To sum it up, in our test suite, three games are tied up using DDR4-3200 CL14 kits on both systems. Assassin’s Creed Origins has a small victory by 5.4%. Death Stranding difference is small, but as seen in 1080p and even with the game’s 240 FPS cap, AVG FPS and 1% LOW are a little higher.

The same “phenoma” that we wrote about The Witcher 3 in 1080p, does occur in FFXV (both 1080p and 720p). Results for the Core i9-12900KF DDR4-3200 CL14 does not improve by lowering resolution in our custom scene, while it does for the AMD Ryzen 7 5800X3D.

What we presume is bandwidth cap and is very punishing for the 12900K DDR4-3200 CL14 in some titles and AMD’s solution (AMD 3D V-CACHE) pulls ahead in some titles.

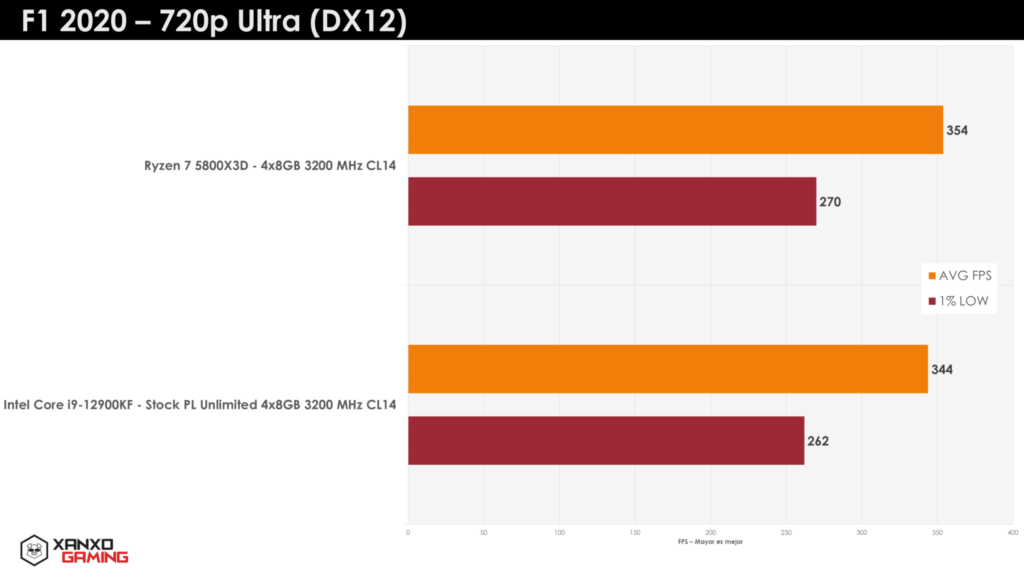

Some other games see a small increase with AMD 3D V-CACHE, such as F1 2020.

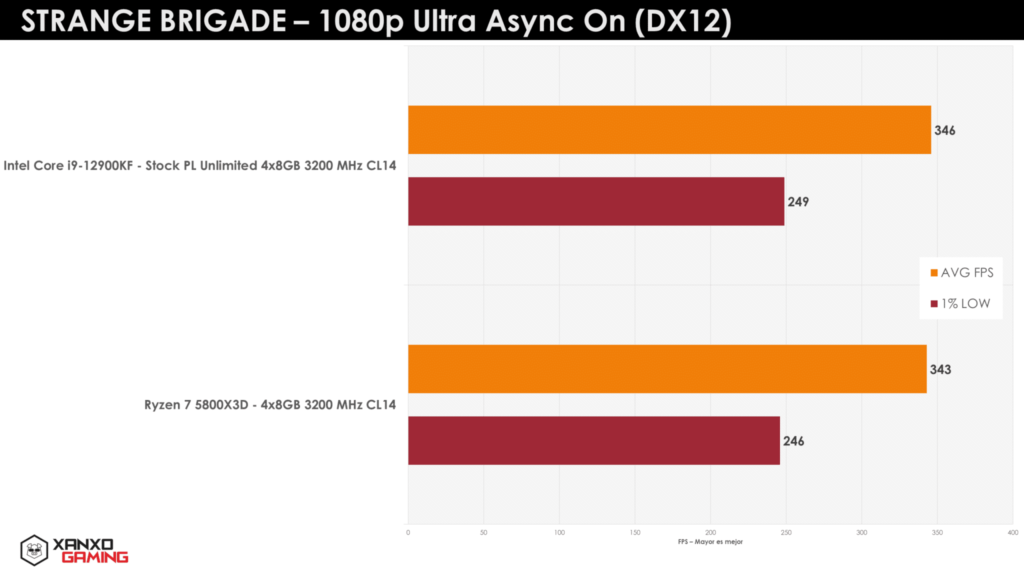

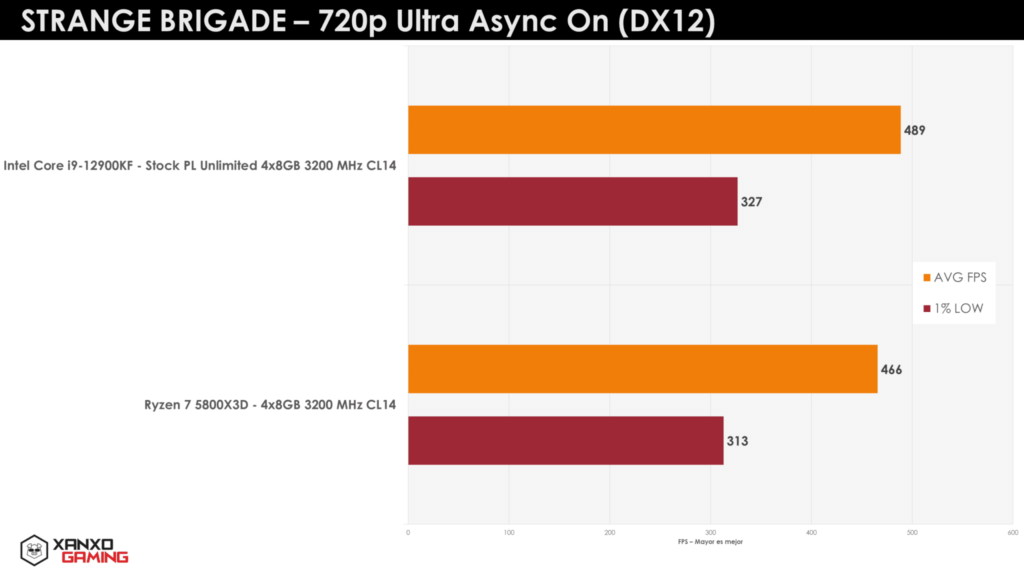

Intel has a small win by 5% in Strange Brigade (DX12 – Async On) which I believe it is Alder Lake-S IPC (which is way better as seen in productivity benchmarks).

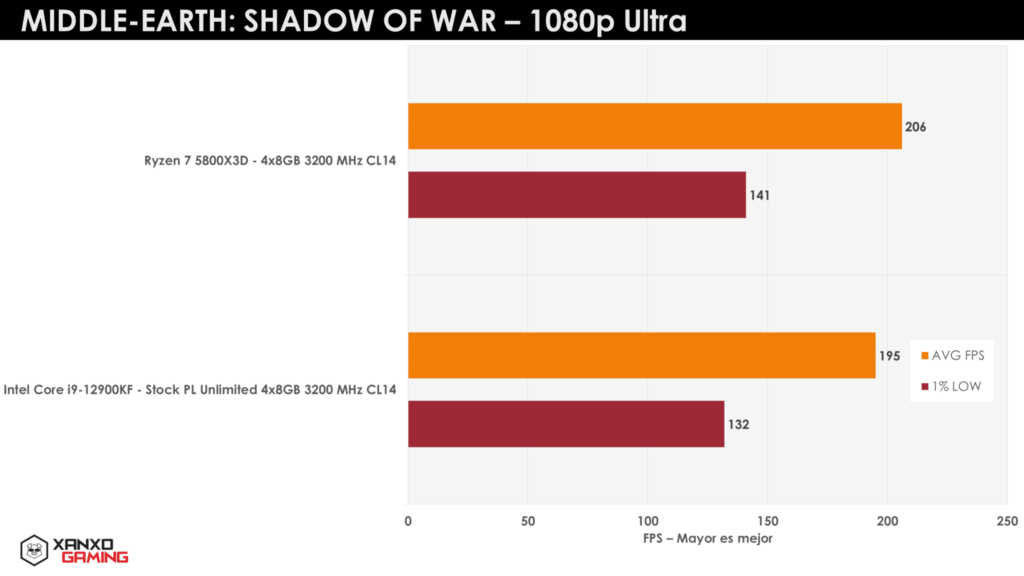

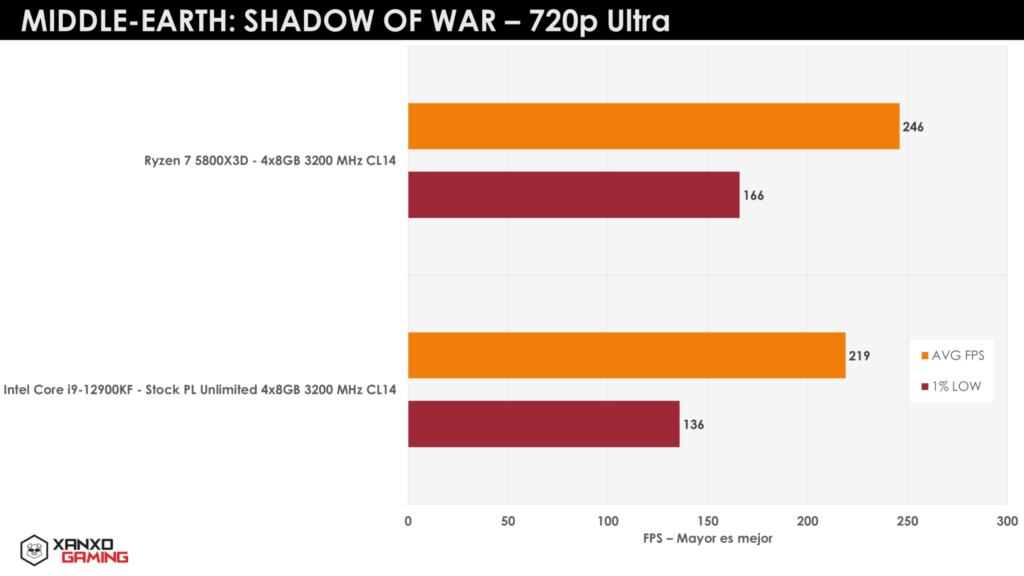

AMD Ryzen 7 5800X3D also has a victory by 12.32% in Shadow of War at 720p.

Bonus: The Witcher 3 – Intel Core i9-12900K DDR4-3600 CL14 data (XanxoGaming)

To check if there was any scalability with this title in particular, we installed 4 DIMMs of DDR4-3600 CL14 kits in our system. There was definitely an improvement over 3200C14 so next step is benchmark 12900K DDR5-6200 C40.