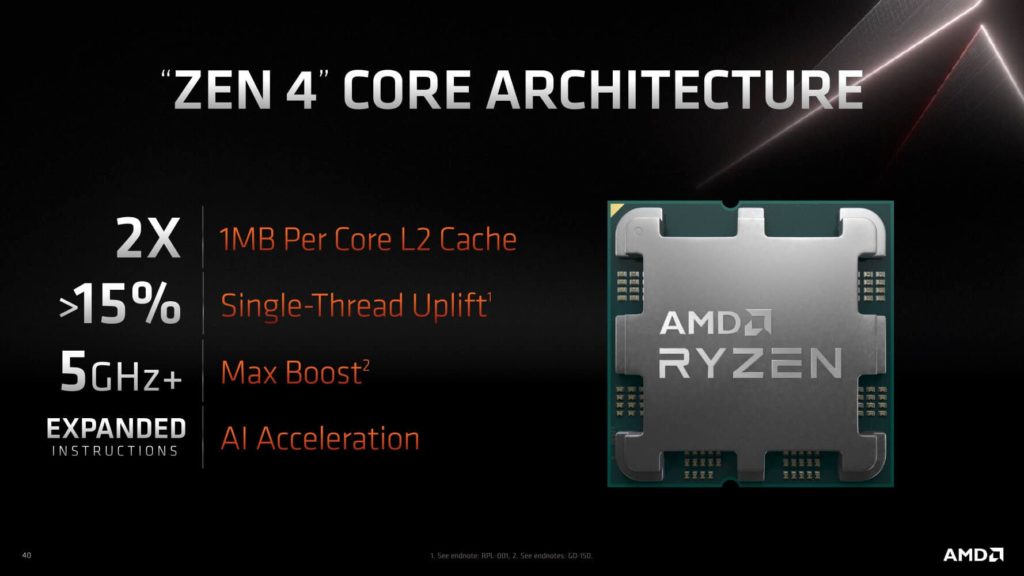

AMD users who are planning to pick up red team’s next flagship desktop processor later this year should expect a similar core and thread configuration as the current flagship. This is based on new information shared by Robert Hallock, AMD’s Senior Technical Marketing Manager, who confirmed in a new interview with TechPowerUp that the maximum core configuration for the Ryzen 7000 Series at launch would be 16 cores and 32 threads, just like the Ryzen 9 5950X. They should differ pretty substantially in regard to TDP, however—echoing an official statement that AMD shared yesterday, Hallock reiterated that the most power-hungry Ryzen 7000 Series processors could feature TDPs of up to 170 watts. Some of the topics that Hallock touches upon in the interview include the purpose of the new heat spreader design, potential for overclocking, his thoughts on the transition from DDR4 to DDR5, and AMD’s future plans regarding the 3D Vertical Cache technology that it introduced with the Ryzen 7 5800X3D.

16-core, 32-thread is the maximum core configuration for the Ryzen 7000 at launch?

That is correct.What are your thoughts on 3D Vertical Cache (3DV Cache) for Zen 4?

3DV Cache will absolutely be a continuing part of our roadmap. It is not a one-off technology. We are a big believer in packaging as a competitive advantage for AMD, something that could meaningfully enhance performance for people, but we have nothing specific to announce for Zen 4 yet.Why the new heatspreader design? Why the holes on the sides?

It’s actually how we achieve cooler compatibility. If you flip over one of the AM4 processors, you’ll find a blank spot in the middle without pins, which has space for capacitors. That blank space is not available on Socket AM5, it has LGA pads across the entire bottom surface of the chip. We had to move those capacitors somewhere else. They don’t go under the heatspreader due to thermal challenges, so we had to put them on top of the package, which required us to make cutouts on the IHS to make room. Because of those changes we’re able to keep the same package size, length and width, same z-height, same socket keep-out pattern, and that’s what enables cooler compatibility with AM4.What can we expect from the processors in terms of CPU overclocking?

I’m not gonna make a commitment yet on frequency, but what I will say is that 5.5 GHz was very easy for us. The Ghostwire demo was one of many games that achieved that frequency on an early-silicon prototype 16-core part with an off-the-shelf liquid cooler. We’re very excited about the frequency capabilities of Zen 4 on 5 nanometer; it’s looking really good, more to come.How do you feel about the transition from DDR4 to DDR5?

AMD is betting on DDR5, there’s no DDR4 support in Zen 4. In the last months, we talked to many component vendors, module makers, etc., to confirm their supply roadmap to confirm timings and avoid shortages. Everybody is coming back with very optimistic answers. DDR5 will be abundant in the lifetime of Socket AM5. The abundance and new demand from Socket AM5 will help bring pricing down.

Source: TechPowerUp