NVIDIA CEO Jensen Huang is aware that gamers and other enthusiasts aren’t thrilled with the pricing of the GeForce RTX 40 Series, but they should probably get used to it because prices will only continue to increase, according to remarks that the executive made during a recent conference call Q&A with reporters.

“Is there anything you would like to say to the community regarding pricing on the new generation of parts, as well as, can they expect to see better pricing at some point and basically address all the loud screams that I’m seeing everywhere?” asked PC world’s Gordon Ong.

“Moore’s law is dead,” Huang responded. “And the ability for Moore’s Law to deliver twice the performance at the same cost, or the same performance at half the cost every year and a half is over. It’s completely over.”

“And so the idea that the chip is going to go down in cost over time, unfortunately, is a story of the past,” the executive added.

From a PC Gamer report:

To be fair to Nvidia, we are looking at a complete switch in chip supplier—from Samsung to TSMC—between the 30-series and 40-series cards. Jensen points out that “a 12-inch wafer is a lot more expensive today than it was yesterday. And it’s not a little bit more expensive; it is a tonne more expensive.”

Since TSMC’s 4nm production capacity is highly sought after right now, we can only speculate just how much Nvidia is being charged for manufacturing the Ada Lovelace processors. Ultimately, we can’t expect Nvidia to swallow all the extra manufacturing costs if that’s been the case; some of it will inevitably trickle down to the consumer.

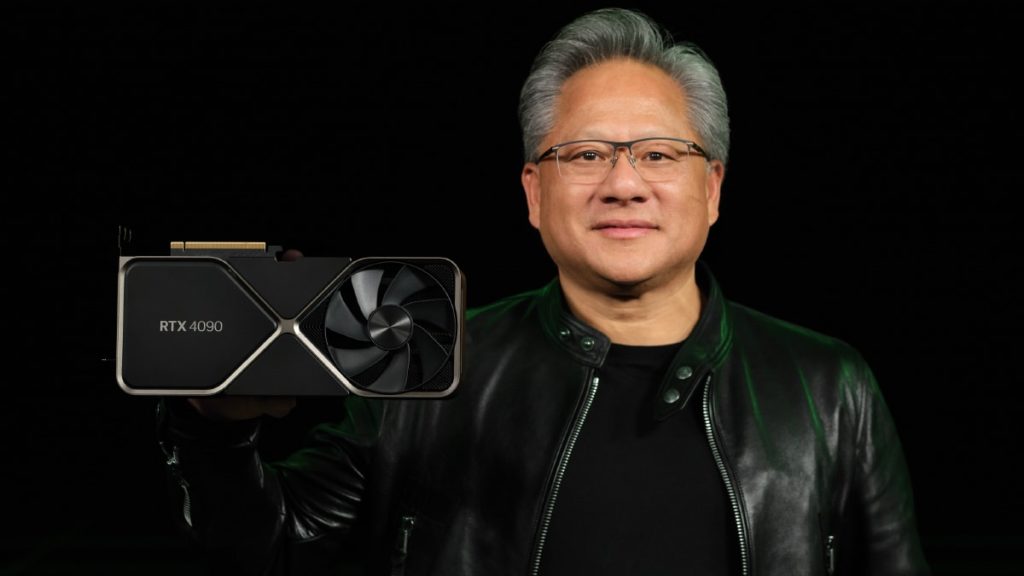

NVIDIA launched the GeForce RTX 40 Series this week, revealing new graphics cards in the form of the GeForce RTX 4090 and GeForce RTX 4080, which will be available in 16 and 12 GB options. The GeForce RTX 4090 will be the first to launch, releasing on October 12 with a starting MSRP of $1,599.