Introduction

On September 16th, 2020 NVIDIA launched the new GeForce RTX 3080 Founders Edition video card based on the next-gen Ampere architecture. In fact, this was also the announcement of the GeForce RTX 30 Series itself, with two cards being announced alongside the launch. The GeForce RTX 3090, and GeForce RTX 3070. The Ampere architecture is what powers the GeForce RTX 30 Series.

The GeForce RTX 3080 Founders Edition launched at an MSRP of $699 and replaces the GeForce RTX 2080 and GeForce RTX 2080 SUPER. Those video cards also launched at $699 when they were released in 2018 and 2019 respectively.

In our review, we found the GeForce RTX 3080 Founders Edition provided an over 50% performance bump over the GeForce RTX 2080 FE at 1440p and an even bigger 70% advantage at 4K. We also found the GeForce RTX 3080 provided a performance bump over the GeForce RTX 2080 Ti FE (NVIDIA’s previous flagship) by over 20% at 1440p and over 25% at 4K. This means faster than GeForce RTX 2080 Ti FE performance (a card that is $1200) for now $699 with the GeForce RTX 3080 Founders Edition, saving you $500 and giving you more performance.

Enter the RTX 3070

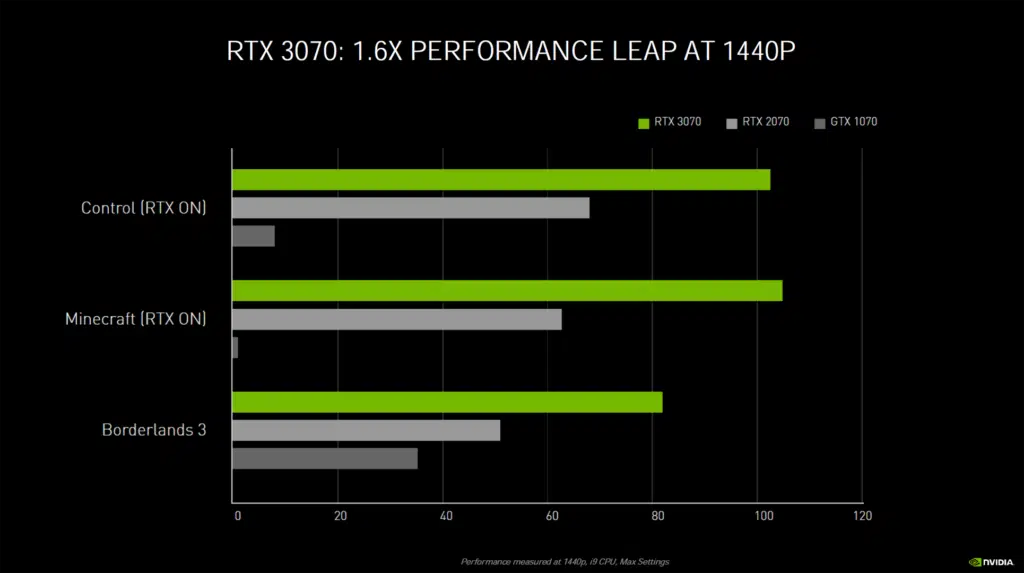

Now we enter NVIDIA’s next entrant into the GeForce RTX 30 Series lineup, the GeForce RTX 3070 Founders Edition video card. This is the one most of you have been waiting to see about. At an MSRP of $499, it is at a much more appealing, and reachable price point for gamers. Note that add-in-board partner custom video cards will be available on October 29th.

The question on everyone’s mind is if this beast is going to provide faster than GeForce RTX 2080 Ti Founders Edition performance, for a much lesser price. Prior to its launch, the rumor mill was churning indicating that this video card would offer faster than GeForce RTX 2080 Ti FE’like performance. We will find out if that is true or not.

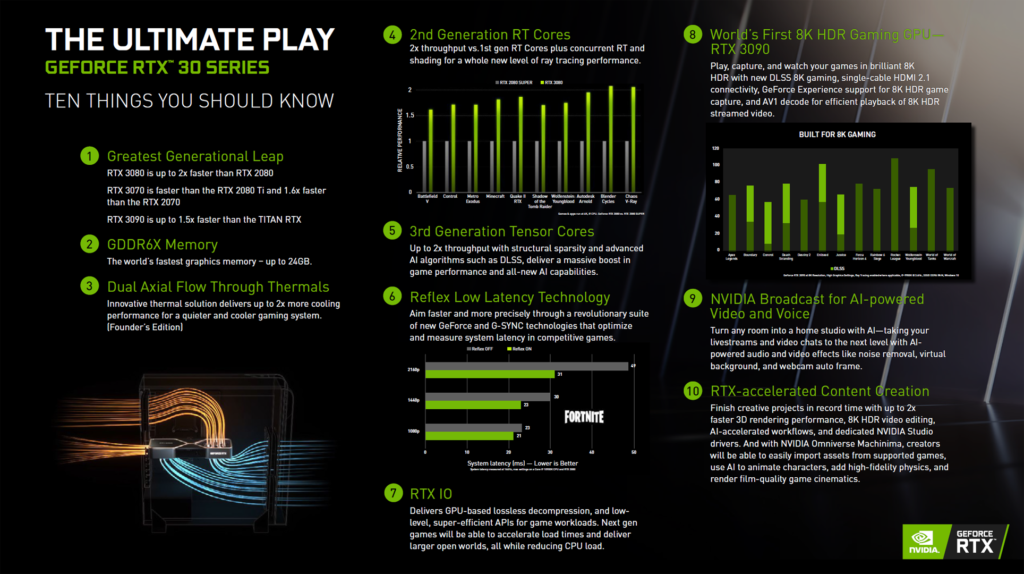

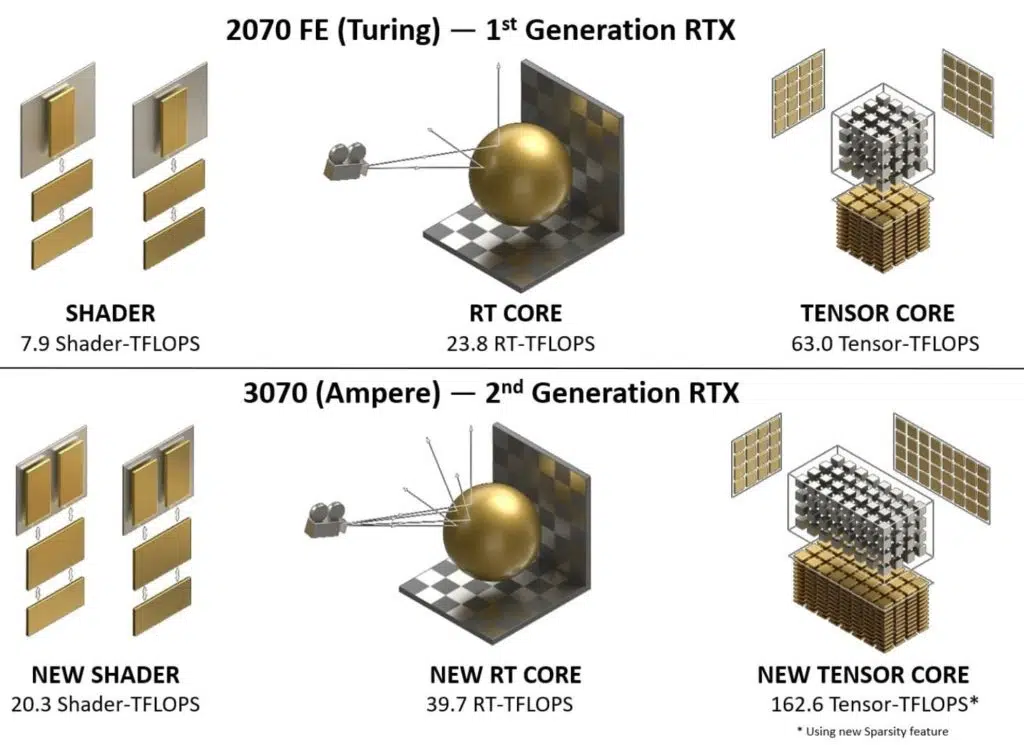

We are not going to dive into the architecture explanation in this review. If you want to see what makes the new next-gen Ampere architecture tick, and what it offers, please read our GeForce RTX 3080 Founders Edition review. We do suggest you do this before reading this review, as it goes over the advantages in new RT Core performance, Tensor Core performance, and extra FP32 power in the CUDA Cores. Those advancements are at the heart of the GeForce RTX 3070 as well. In addition, there are new technologies to look at like NVIDIA Reflex Low Latency Technology, RTX IO, and NVIDIA Broadcast.

GeForce RTX 3070 Founders Edition Specs

| GeForce RTX 3070 FE | GeForce RTX 2070 FE | GeForce RTX 2070 SUPER FE | GeForce RTX 2080 Ti FE | |

|---|---|---|---|---|

| Architecture Process | Ampere 8nm | Turing 12nm FinFET | Turing 12nm FinFET | Turing 12nm FinFET |

| CUDA Cores | 5888 | 2304 | 2560 | 4352 |

| Tensor Cores | 184 | 288 | 320 | 544 |

| RT Cores | 46 | 36 | 40 | 68 |

| Texture Units | 184 | 144 | 160 | 272 |

| ROPs | 96 | 64 | 64 | 88 |

| GPU Boost | 1725MHz | 1710MHz | 1770MHz | 1545MHz |

| Memory Clock | 8GB 14GHz GDDR6 448GB/s | 8GB 14GHz GDDR6 448GB/s | 8GB 14GHz GDDR6 448GB/s | 11GB 14GHz GDDR6 616GB/s |

| TGP | 220W | 185W | 215W | 250W |

| MSRP | $499 | $499/$599 FE | $499 | $1199 |

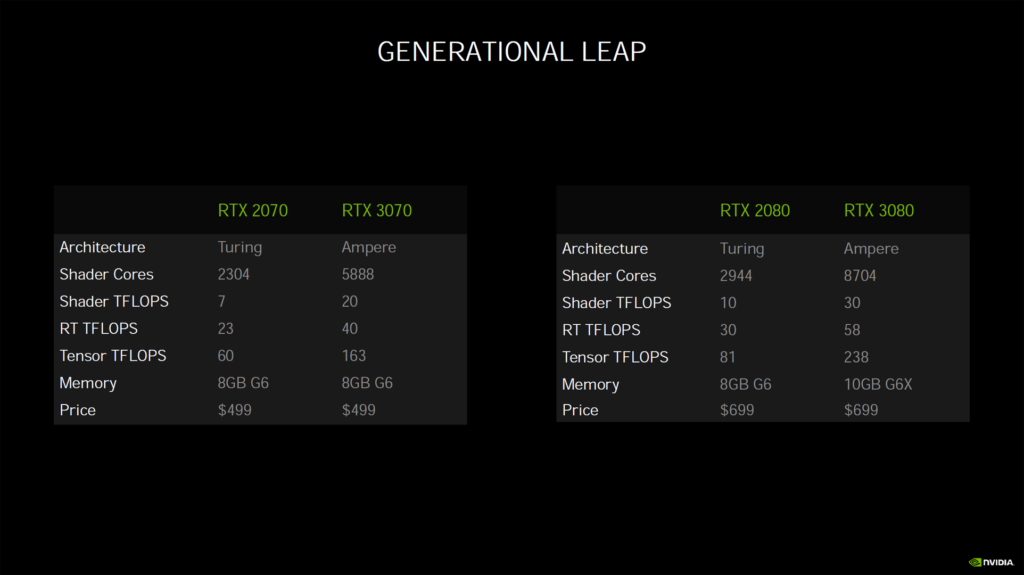

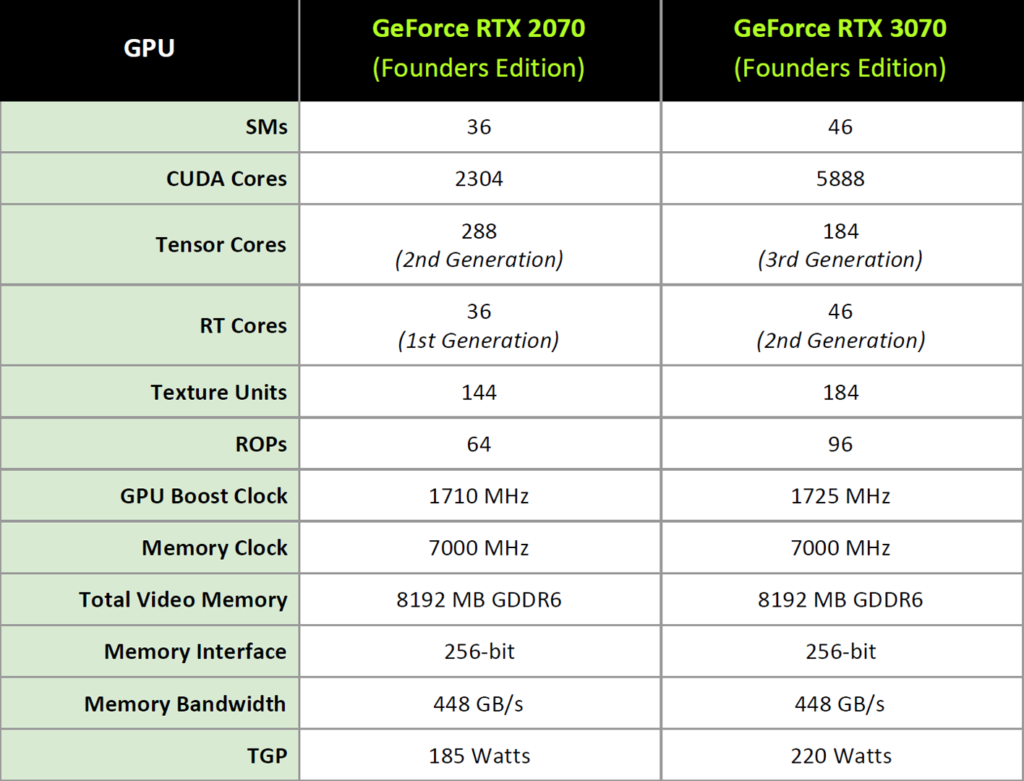

The GeForce RTX 3070 Founders Edition has an MSRP of $499. It is based on the Samsung 8nm manufacturing process, and Ampere architecture. It has 46 SMs, 5,888 CUDA Cores, 184 Tensor Cores (3rd gen), 46 RT Cores (2nd gen), 184 Texture Units, and 96 ROPs. It has a GPU Boost Clock of 1725MHz. It has 8GB of GDDR6 memory at 14GHz on a 256-bit bus providing 448GB/s of memory bandwidth. TGP is 220 Watts.

Price Comparison

When we look at what the price comparison from the last generation would be, it is actually the GeForce RTX 2070, but not the Founders Edition. You see, when the GeForce RTX 2070 launched in 2018 NVIDIA actually had two variants of it. There was the regular, or base GeForce RTX 2070 video card which had an MSRP of $499. It had a GPU Boost Clock of 1620MHz. Then, NVIDIA sold its own variant of the GeForce RTX 2070 called the GeForce RTX 2070 Founders Edition for $599. The Founders Edition had a higher 1710MHz GPU Boost Clock, but that was the only difference.

Therefore, the Founders Edition had slightly higher performance, but a $100 higher price. However, with add-in-board manufacturers customizing cards, their factory boost clocks rose to or surpassed the Founders Edition boost clock anyway. Those video cards started with the base price and reference design of $499 and went up from there. Then came the GeForce RTX 2070 SUPER in 2019 and it cleared up this mess and debuted with an MSRP of $499 as well. However, it was a much faster video card than the original RTX 2070/Founders Edition.

Therefore, the appropriate price comparison to the last generation is the GeForce RTX 2070, either the base one or the Founders Edition. And, the GeForce RTX 2070 SUPER Founders Edition is also a comparison point, since it also debuted at $499 in 2019. Therefore, we must include in this review both the GeForce RTX 2070 Founders Edition and the GeForce RTX 2070 SUPER Founders Edition since both debuted at $499.

RTX 2070 and SUPER Comparison Specs

The GeForce RTX 2070 FE, by comparison, had 36 SMs, 2,304 CUDA Cores, 288 Tensor Cores (2nd gen), 36 RT Cores (1st gen), 144 Texture Units, and 64 ROPs. It was clocked at 1710MHz GPU Boost Clock (Founders Edition). Interestingly, it also had 8GB of GDDR6 at 14GHz on a 256-bit bus offering 448GB/s. The TGP was 185W. Launched at $499 for the base and $599 for FE.

The GeForce RTX 2070 SUPER FE by comparison, had 40 SMs, 2,560 CUDA Cores, 320 Tensor Cores (2nd gen), 40 RT Cores (1st gen), 160 Texture Units, and 64 ROPs. It was clocked at 1770MHz GPU Boost Clock. It also had 8GB of GDDR6 at 14GHz on a 256-but bus offering 448GB/s of bandwidth. The TGP was 215W. Launched at $499.