Introduction

It’s here! It’s here! It finally came! We now have Ray Tracing support in Cyberpunk 2077 from cd Projekt Red on AMD Radeon RX 6000 series video cards! It only took more than 6 months for that support, something that should have been ready at the game launch. With the new patch 1.2 Ray Tracing support for Radeon RX 6000 series has been added. This means you can turn on Reflection, Shadow, and Lighting Ray Tracing in Cyberpunk 2077 on the Radeon RX 6900 XT, Radeon RX 6800/XT, and Radeon RX 6700 XT.

This new patch 1.2 actually has a ton of change in the game. There are a ton of fixes, it’s really quite extensive. There are also updates to the game in other areas in terms of graphics. There are improvements in textures rendering from afar. Visual quality adjustments of elements when underwater. Improvements in materials details quality. Improvements for interior and exterior light sources. Adjust dirt quality. Improved foliage destruction visuals. Various optimizations to shadows, shaders, physics, animation system, occlusion system, and facial animations. There’s really a whole lot more here.

To find out how demanding, and what kind of performance you’ll experience, we decided to go test it. This is a quick, no-frills, straight to the point, down and dirty simple performance comparison. We are taking the AMD Radeon RX 6800 XT, and the NVIDIA GeForce RTX 3080 FE and putting them head-to-head in Ray Tracing in Cyberpunk 2077.

We are also going to find out what kind of performance drop you get enabling the features on each video card. We are going to test the built-in preset options, as well as manually just enabling Reflections, then Shadows then Lighting to find out how each one individually performs.

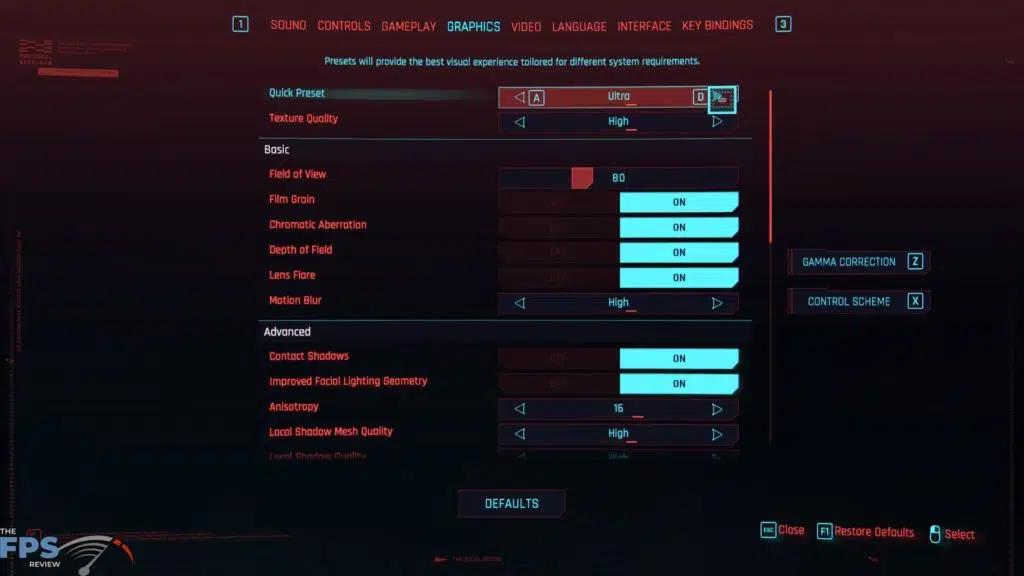

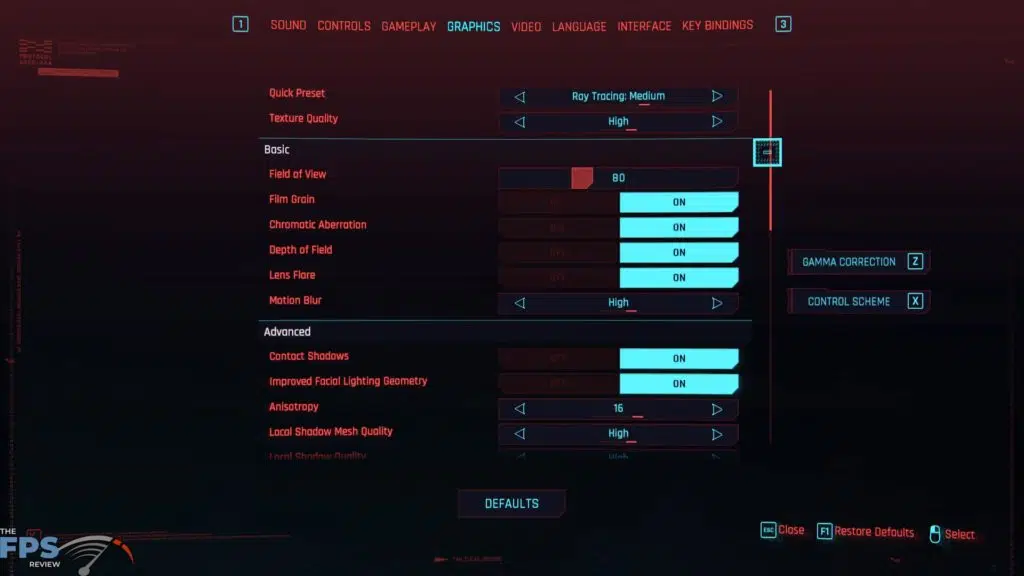

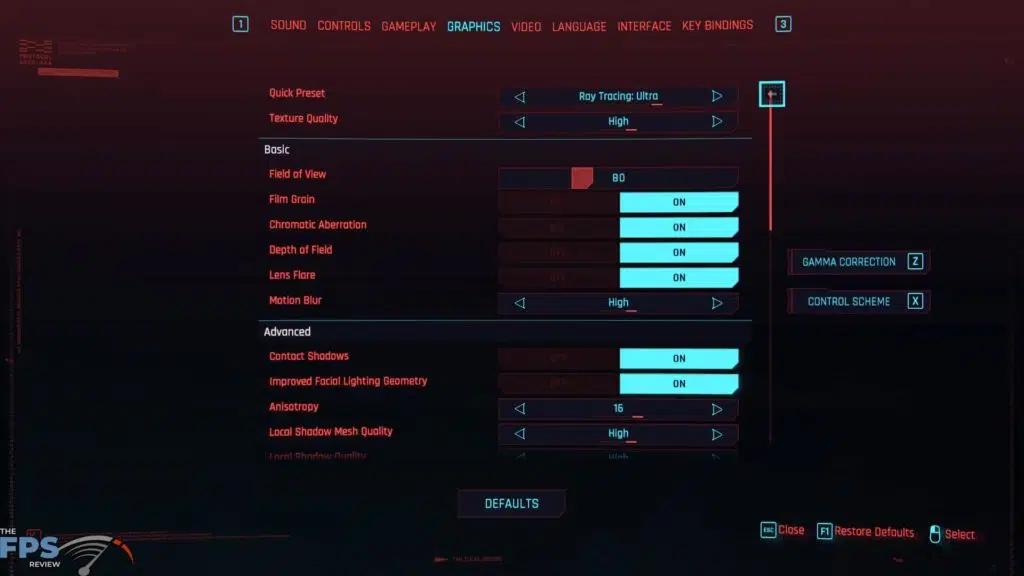

Cyberpunk 2077 Ray Tracing Menu

Ok, let’s look at how you can enable Ray Tracing on the Radeon RX 6800 XT. Similar to the GeForce RTX 3080 FE, you can select different “Quick Preset” options at the top. If you put this on “Ultra” that means you will have the highest game settings, but no Ray Tracing.

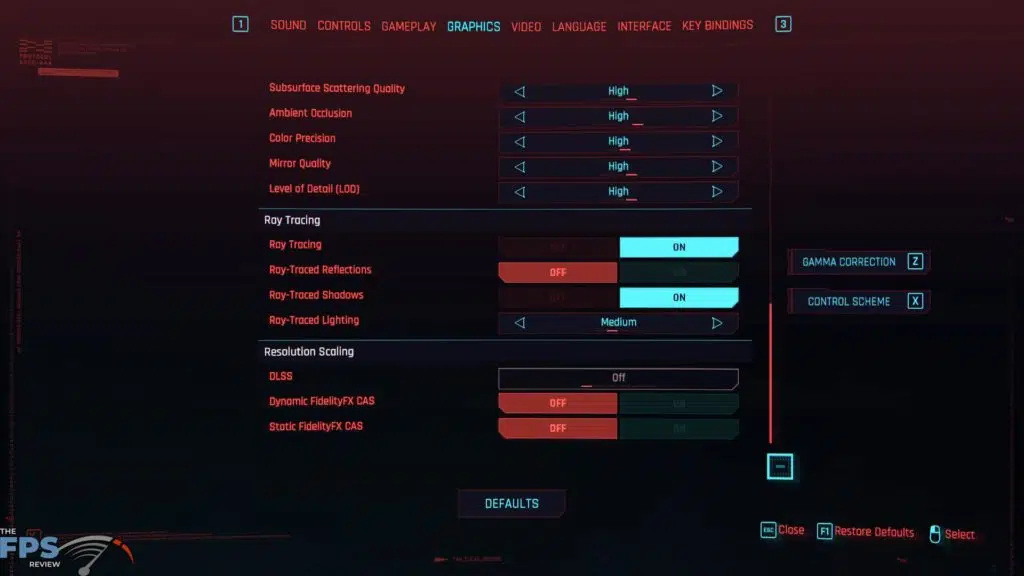

There are two built-in presets for Ray Tracing. Ray Tracing Medium and Ray Tracing Ultra. In Ray Tracing Medium Ray-Traced Reflections are kept OFF, while Ray-Traced Shadows is ON and Ray-Traced Lighting is on “Medium.” When you select Ray Tracing Ultra then everything is enabled, you get Ray-Traced Reflections, Ray-Traced Shadows and Ray-Traced Lighting is on “Ultra.” The Ray-Traced Lighting option actually has one higher setting called “Psycho” but don’t dare turn this on, it kills performance on pretty much everything.

Since you can also just manually go into each option and turn on just one Ray-Traced feature, to save performance, we are also going to test each one individually. In this way, we can see which ones take the most burden on GPU performance to run and how they compare.

Resizable BAR

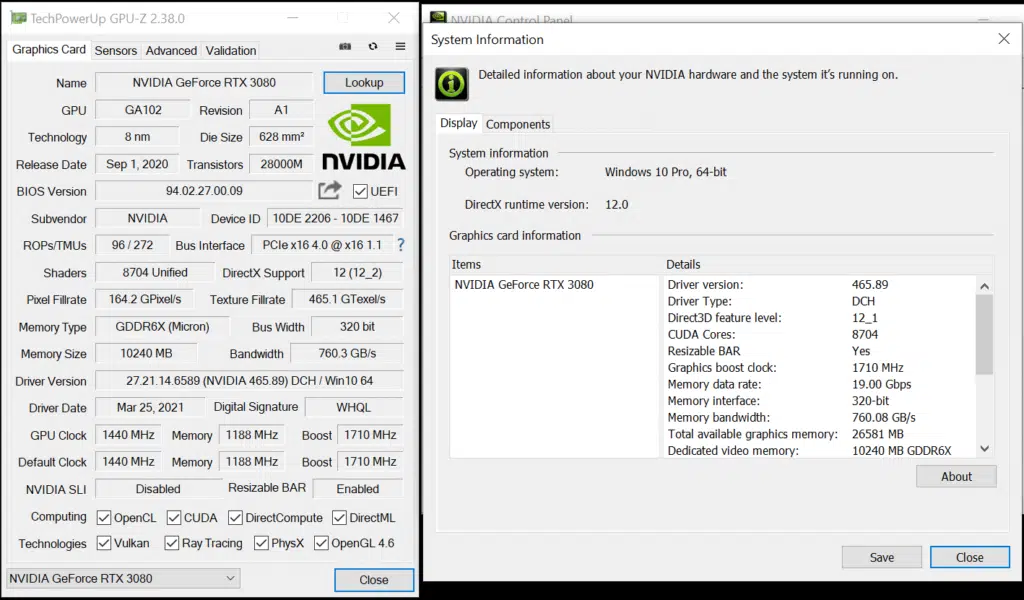

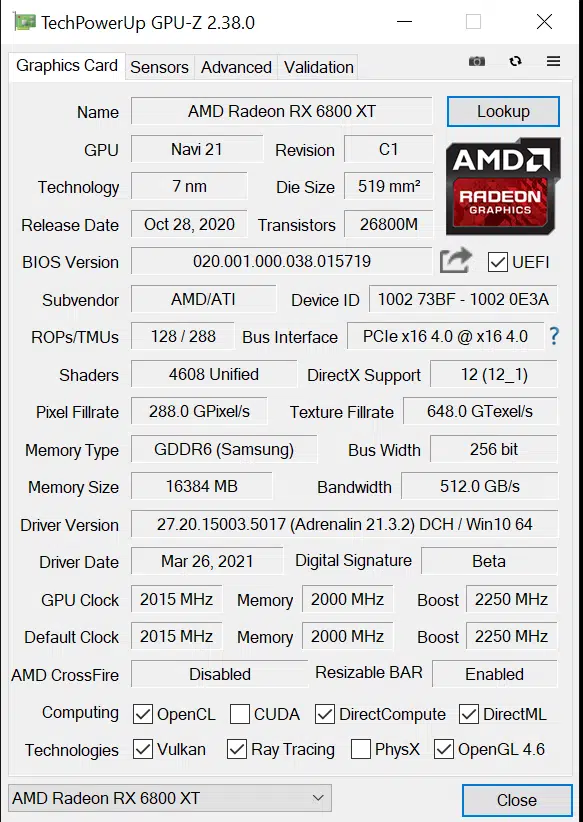

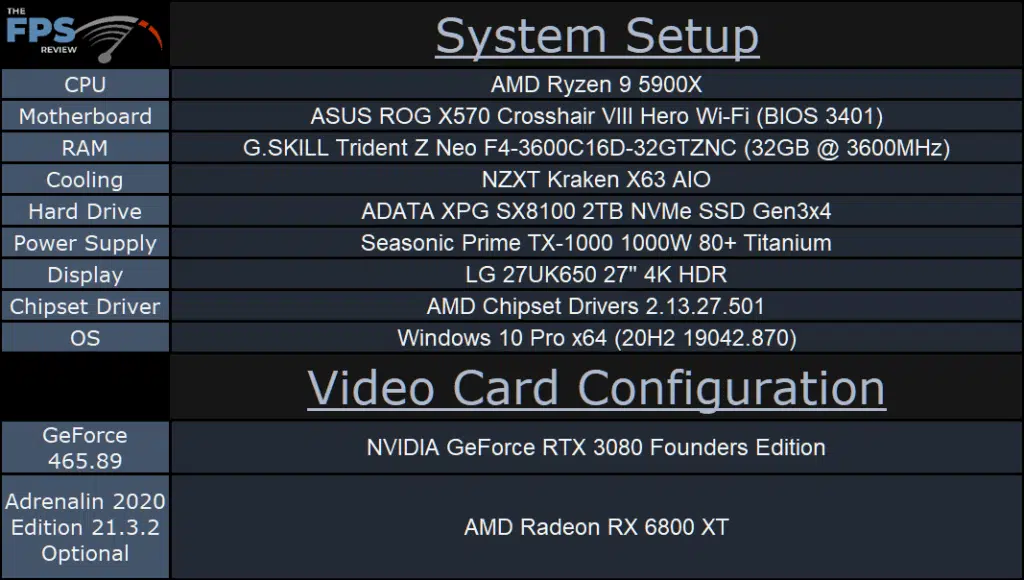

Before we begin, just a couple of notes on the system setup. For this review, we are using a Ryzen 9 5900X. We also have enabled Resizable BAR and AMD Smart Access Memory on each video card. We have applied the latest motherboard BIOS, enabled Resizable BAR in it, and also applied the new VBIOS for the GeForce RTX 3080 FE video card, and we are using the latest driver. This means Resizable BAR is enabled on both video cards here today as you can see above. We are using the latest drivers for each video card, GeForce 465.89 and AMD Adrenalin 2020 Edition 21.3.2 Optional.