Gaming Performance

Now we come to the interesting testing we are sure you all can’t wait for, we were also anxious to get to this part and see how it all ends up for gaming performance. In order to test this properly, and really get a good feel for it, and cover all the bases for the big picture, we decided to test 1080p, 1440p, and 4K resolutions, all three resolutions to see from top to bottom how these CPUs affect performance games. For testing, we are using an NVIDIA GeForce RTX 3080 Ti Founders Edition video card. This will ensure we are CPU bound at 1080p, but also some games will still be GPU bound at 4K. Since we are testing all 3 resolutions, it’ll be a good mix to see where the CPU matters and where it doesn’t. Oh, and yes, we are testing the new Battlefield 2042 as well!

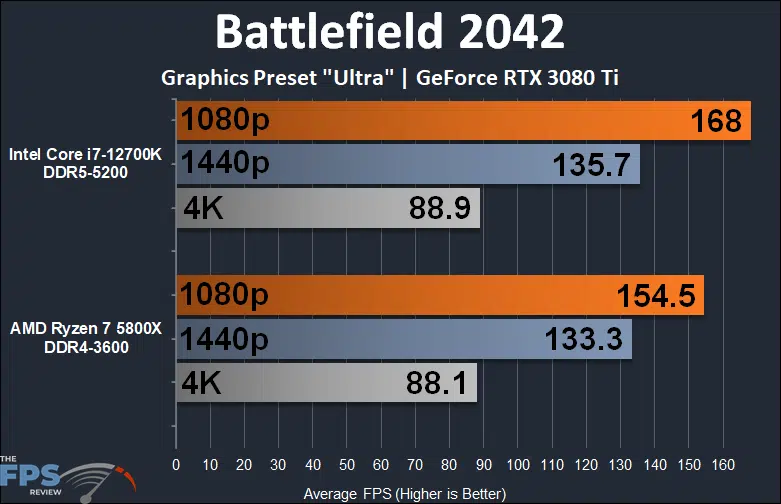

Battlefield 2042

Let’s start with the newest game on the block, Battlefield 2042. Now, since this game is a multiplayer game, it is hard to test in a live environment and have consistency. Therefore, we chose to create a private Portal server, and test with no players, it’s the only way we can assure a consistent run-through. We created a custom team deathmatch and a manual run-through in the whole map.

This graph may not show what you expected. We are actually seeing a game performance difference at 1080p here, and slightly at 1440p in this game between the CPUs. With the Intel Core i7-12700K at 1080p, we find it performs 9% faster than with the AMD Ryzen 7 5800X. This is actually not an insignificant amount. There is even a smaller difference at 1440p with 2% towards the 12700K. Once we get to 4K in this game, we are GPU bound completely, even with the RTX 3080 Ti.

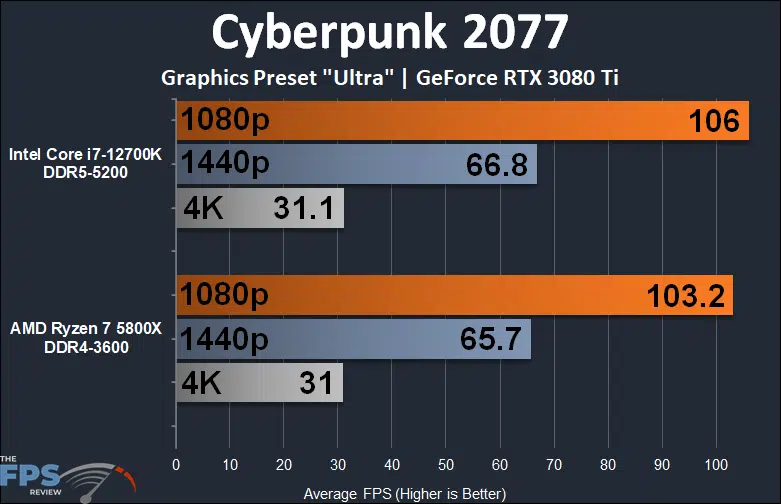

Cyberpunk 2077

When you think of Cyberpunk 2077 you typically think this game is going to completely be GPU dependent. However, at 1080p we do find a difference between these CPUs. The new Intel Core i7-12700K is once again faster, by 3% compared to the 5800X. It isn’t much, but if you had an even faster GPU this difference would be even greater. At 1440p and 4K we are GPU dependent, and there isn’t any real-world difference.

Far Cry 6

Far Cry 6 is another game where wow, we did not expect these much different results. The Intel Core i7-12700K is simply faster in this game, at every resolution, even with the RTX 3080 Ti. At 1080p the 12700K is 22% faster than the Ryzen 7 5800X which is a very major difference. This is nothing to scoff at, this is a real performance difference that can make or break fast refresh rate gaming. Even at 1440p, the 12700K is 15% faster than the 5800X, another significant difference that can make or break fast refresh rate gaming. At 4K is the least difference, but still a 3% difference at any rate.

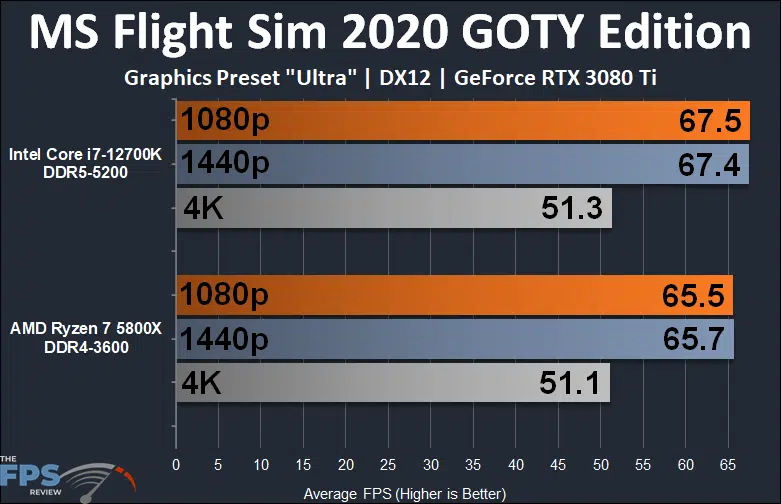

Microsoft Flight Simulator 2020 Game Of The Year Edition

We have installed the latest huge patch to Microsoft Flight Sim 2020 which brings it up to the new Game of the Year Edition. This new edition has major performance changes, including a new DX12 API mode we are going to use for today’s testing.

We were surprised to find no differences in Flight Sim 2020, to be honest. We are running the game in the new DX12 API mode, and it seems here there are no differences at any resolution between the CPUs. It is a new API for this game and is still in Beta, so this could change in the future with further optimizations either way.

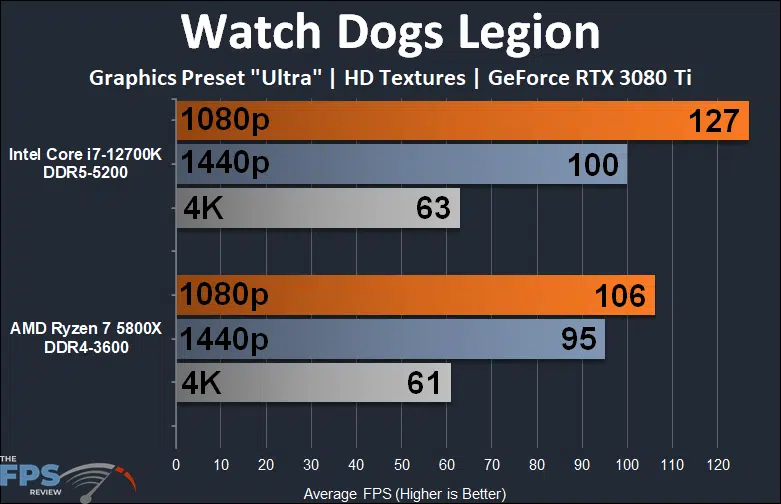

Watch Dogs Legion

Watch Dogs Legion is another game where we are seeing a performance difference at 1080p and 1440p between the CPUs. At 1080p the new Intel Core i7-12700K is 20% faster than the AMD Ryzen 7 5800X. This again is a significant difference for a game. Even at 1440p, we find the 12700K to be 5% faster than the 5800X. Only at 4K are they much closer, but the 12700K is still 3% faster.

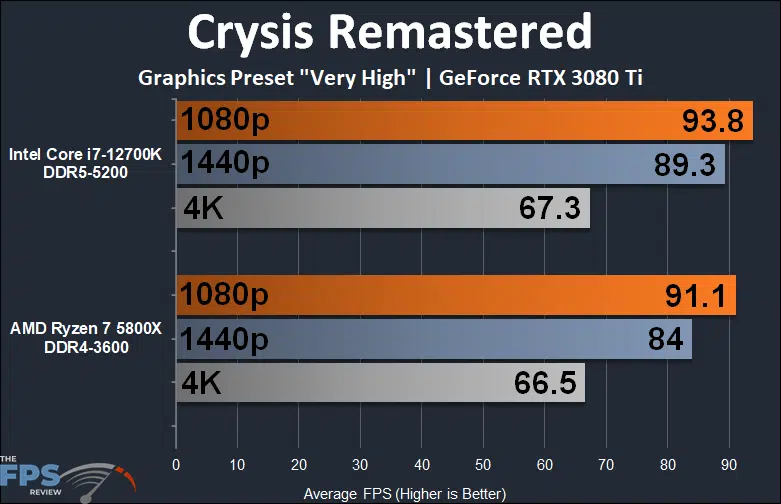

Crysis Remastered

In Crysis Remastered we find there to be a little difference at 1080p and 1440p as well. At 1080p the 12700K is 3% faster and at 1440p it is 6% faster.