Introduction

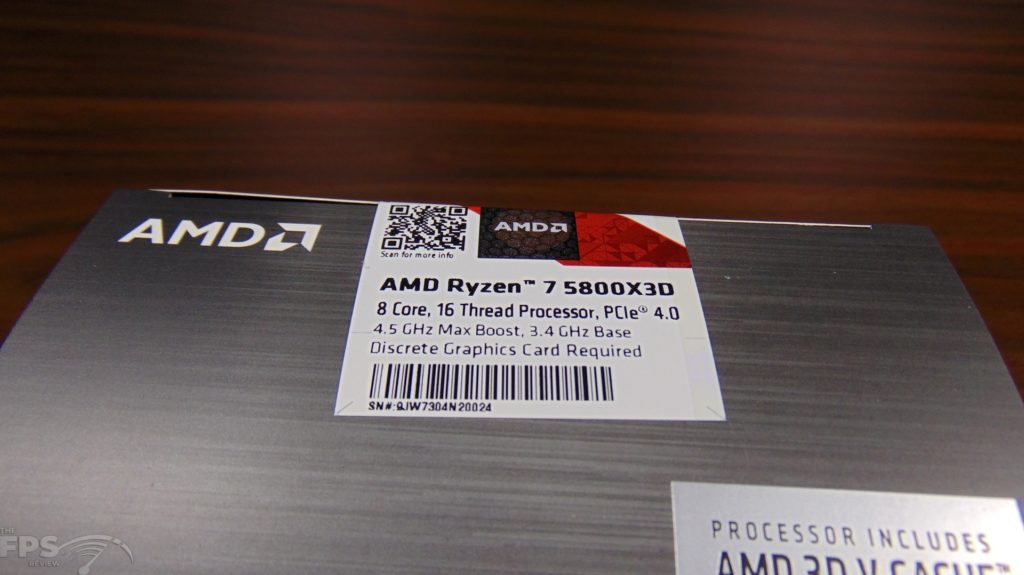

AMD is not taking a back seat to gaming performance, with the recent launch of Intel’s Alder Lake CPUs Intel has proven they are back in the game. AMD isn’t taking this lightly. By innovating in unique ways AMD is finding ways that can improve gaming performance on CPUs to stay on top of gaming performance. One of those ways is increasing the L3 cache size on a CPU, which if implemented well, has the potential to specifically improve gaming performance, especially where you are more CPU limited. This is where the AMD Ryzen 7 5800X3D CPU comes in, this is AMD’s $449 MSRP CPU that uses 3D-stacked L3 cache to make a total of 96MB of L3 cache for a gaming processor.

In a traditional CPU design, the L3 cache is integrated into the die and has a fixed amount, you cannot increase the cache unless you literally produce a new CPU architecture. This all increases die size, transistor count, package size, and the works. What if you could add more L3 by stacking it on the die? This is what the new AMD 3D V-Cache Technology is all about. It’s about boosting a CPU’s L3 cache in size by quite a large amount, without having to design a new CPU. In this stacking sense, AMD is literally connecting the L3 cache atop the CPU, in a copper-to-copper unique bonding process that facilitates the connection. Latency is an obvious problem, but it is something AMD has been working hard on to make sure this technology actually provides a benefit in gaming performance.

We need to be specific here, this additional L3 is meant to improve gaming performance, that is its purpose, and with that goal in mind, there may be other things that don’t take advantage of the increased L3 cache size, and because of other decisions that had to be made in making the Ryzen 7 5800X3D CPU performance could be even slower. You can think of the 3D V-Cache as a way to extend the usefulness and capabilities of the already established Zen 3 Ryzen CPUs, well, in this case, just one, as of right now there is only one with 3D V-Cache, the 5800X3D.

AMD Ryzen 7 5800X3D Specs

| Ryzen 7 5800X3D | Ryzen 7 5800X | |

|---|---|---|

| Architecture/Process Node | Zen 3 (Vermeer-X) /TSMC N7 | Zen 3 (Vermeer) / TSMC N7 |

| Cores/Threads | 8/16 | 8/16 |

| L3 Cache | 96MB | 32MB |

| Base Clock | 3.4GHz | 3.8GHz |

| Turbo Clock | 4.5GHz | 4.7GHz |

| TDP | 105W | 105W |

| MSRP | $449 | $449 |

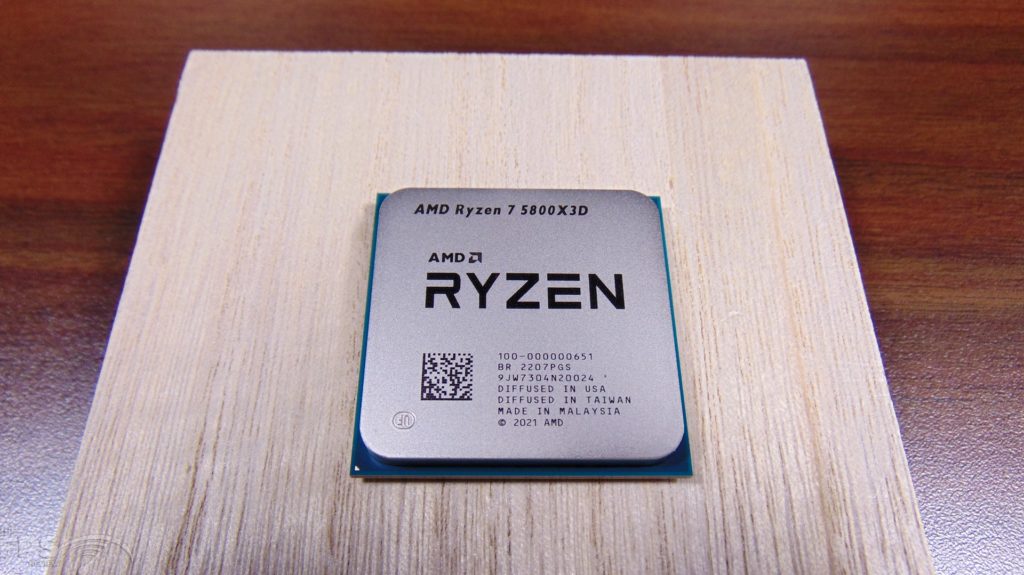

One of the interesting, and welcomed pieces of information is first the MSRP. The new Ryzen 7 5800X3D has an MSRP of $449, which is exactly the same as the Ryzen 7 5800X. This is impressive, AMD could have charged a premium for it, but AMD is asking the same price as the 5800X, so it at least won’t cost you an arm or a leg more. And that’s a good thing seeing as the Ryzen 7 5800X3D is architecturally identical to the Ryzen 7 5800X. It’s similar in almost every single way, only two things separate them, the L3 cache size and the Boost Frequency. Both CPUs are based on AMD’s Zen 3 architecture (Vermeer) and manufactured at TSMC N7.

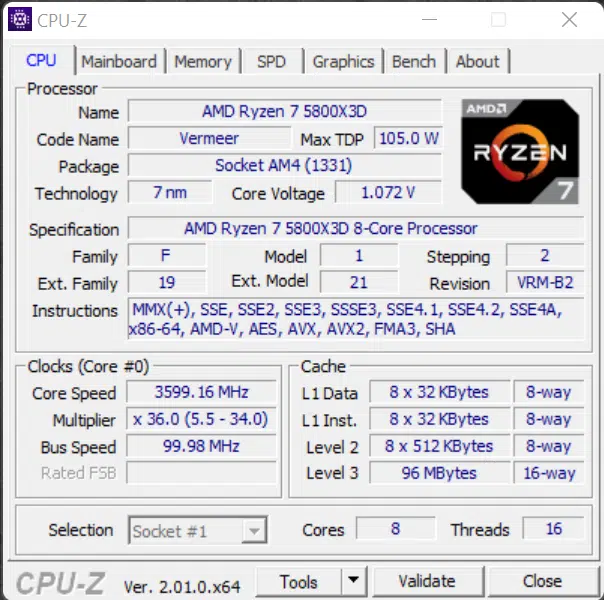

The Ryzen 7 5800X and 5800X3D are 8-core/16-thread CPUs based on AMD’s Socket AM4. The L1 cache size is 64K per-core, and L2 cache size is 512K per-core on both CPUs. The difference is, of course, the L3 cache size where the Ryzen 7 5800X3D has 96MB and the Ryzen 7 5800X has 32MB, that’s a 200% increase in cache size.

The other difference is the Turbo Clock, AMD had to lower it on the new 5800X3D, but not only that but also Voltage, the Turbo Clock is running at up to 4.5Ghz on the Ryzen 7 5800X3D at 1.35v. On the Ryzen 7 5800X, the Turbo Clock runs up to 4.7GHz at 1.5v. That’s a 200MHz decrease on the 5800X3D, so it has a lower Turbo Clock, but much more L3 cache. This turbo clock frequency will affect single-core and all-core maximum frequencies.

With that clock speed decrease, AMD is able to keep the TDP of the CPUs the same at 105W. The Ryzen 7 5800X3D has another limitation, it is multiplier locked, and it does not support Precision Boost Overdrive (PBO) at all. It is meant to only operate at the provided clock speed and not be overclocked by any means. The Ryzen 7 5800X, does support PBO, which can overclock it by 200MHz, which could in theory set the two CPUs apart by as much as 400MHz.

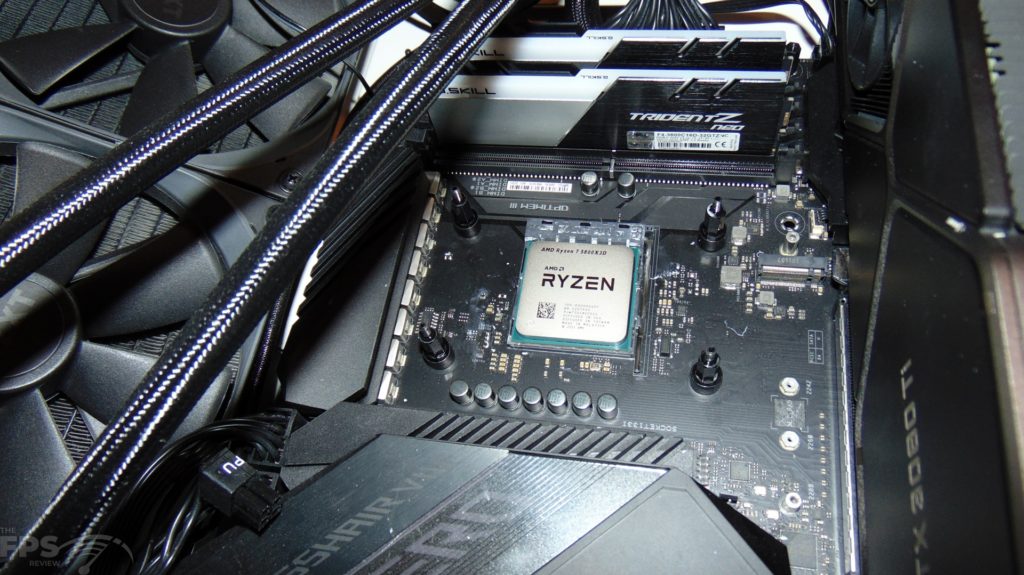

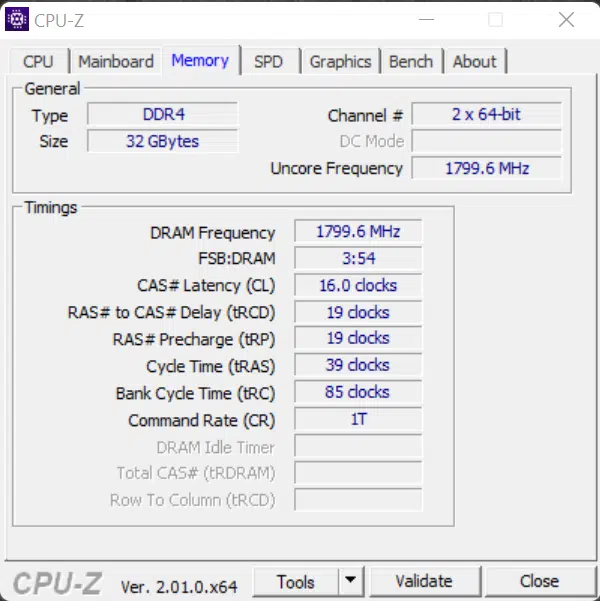

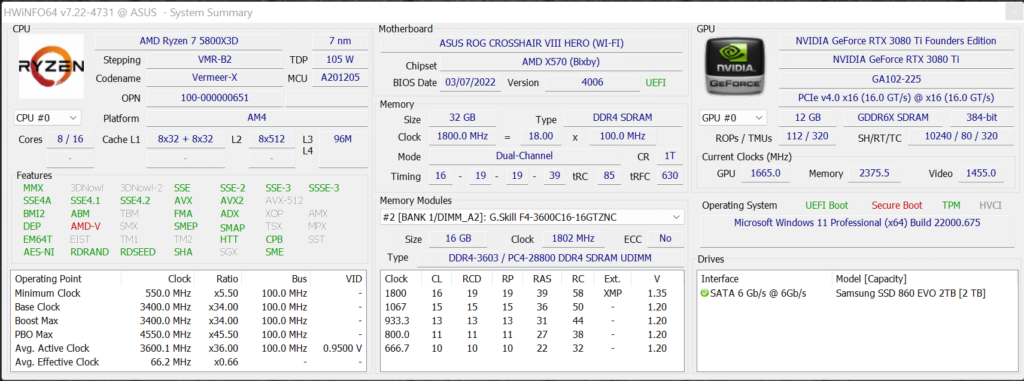

Installation went without a hitch. HWiNFO64 recognizes the CPU as Vermeer-X Stepping VMR-B2. On our ASUS ROG X570 Crosshair VIII Hero Wi-Fi motherboard we have BIOS 4006 applied, which utilizes AMD AM4 AGESA V2 PI 1.2.0.6b and supports AMD Ryzen 7 5800X3D CPU. We are using the default motherboard settings with D.O.C.P enabled.