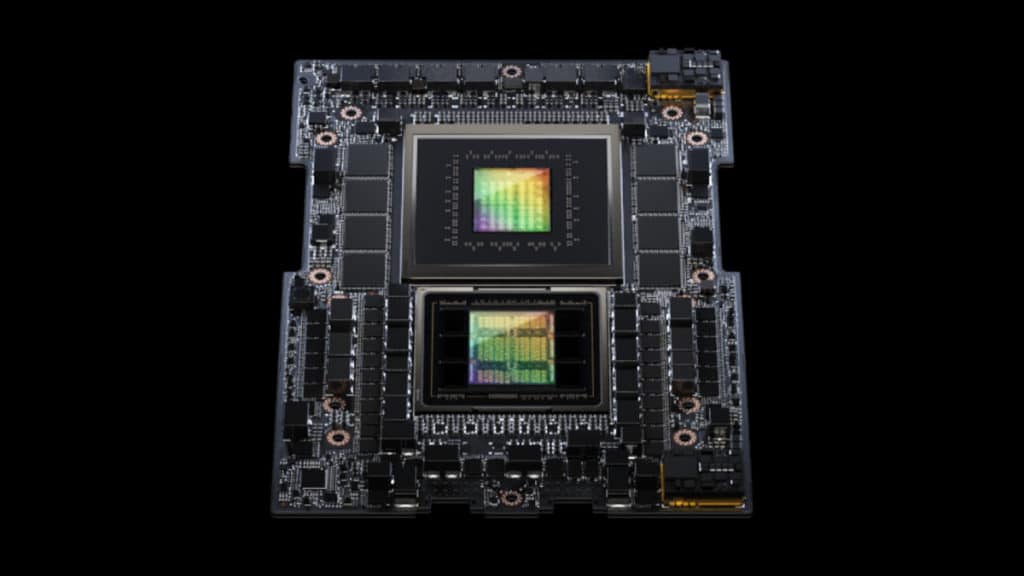

NVIDIA has announced that leading system manufacturers are expected to deliver systems based on its new GH200 Grace Hopper Superchip platform in Q2 of calendar year 2024. Unveiled during this morning’s SIGGRAPH 2023 event, this new platform, which is designed to further accelerate computing and generative AI, is powered by a new version of green team’s previously announced superchip that features the world’s first HBM3e processor. According to the press release from NVIDIA, this new memory technology offers 50% faster performance than HBM3, and users can find 282 GB of it as part of the new dual-configuration platform.

“To meet surging demand for generative AI, data centers require accelerated computing platforms with specialized needs,” said Jensen Huang, founder and CEO of NVIDIA. “The new GH200 Grace Hopper Superchip platform delivers this with exceptional memory technology and bandwidth to improve throughput, the ability to connect GPUs to aggregate performance without compromise, and a server design that can be easily deployed across the entire data center.”

Created to handle the world’s most complex generative AI workloads, spanning large language models, recommender systems and vector databases, the new platform will be available in a wide range of configurations.

The dual configuration — which delivers up to 3.5x more memory capacity and 3x more bandwidth than the current generation offering — comprises a single server with 144 Arm Neoverse cores, eight petaflops of AI performance and 282GB of the latest HBM3e memory technology.

The new platform uses the Grace Hopper Superchip, which can be connected with additional Superchips by NVIDIA NVLink, allowing them to work together to deploy the giant models used for generative AI. This high-speed, coherent technology gives the GPU full access to the CPU memory, providing a combined 1.2TB of fast memory when in dual configuration.

HBM3e memory, which is 50% faster than current HBM3, delivers a total of 10TB/sec of combined bandwidth, allowing the new platform to run models 3.5x larger than the previous version, while improving performance with 3x faster memory bandwidth.