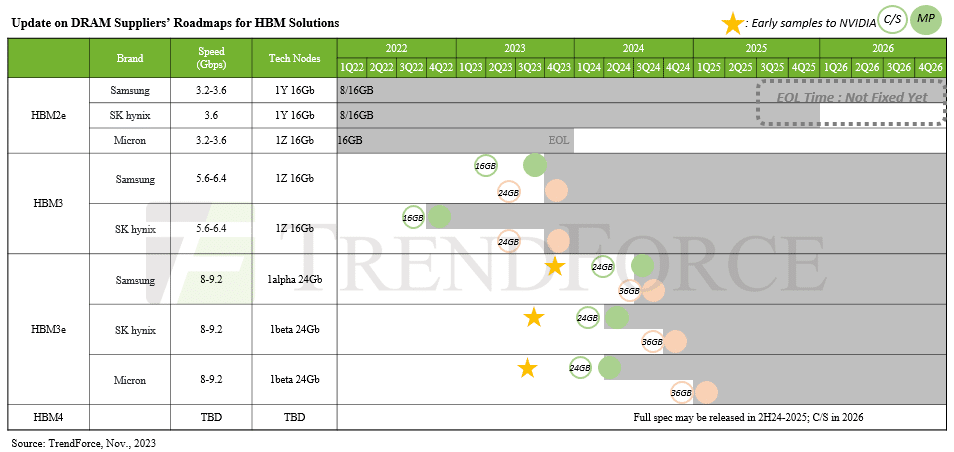

TrendForce has shared an updated supplier roadmap for HBM (High Bandwidth Memory), revealing that HBM4, the successor to HBM3e, is expected to launch in 2026.

According to TrendForce’s knowledge, specifications for HBM4 won’t be out until the second half of 2024 at the earliest, but the new memory is set to introduce some major architectural changes, as the publication explains:

HBM4 will mark the first use of a 12nm process wafer for its bottommost logic die (base die), to be supplied by foundries. This advancement signifies a collaborative effort between foundries and memory suppliers for each HBM product, reflecting the evolving landscape of high-speed memory technology.

With the push for higher computational performance, HBM4 is set to expand from the current 12-layer (12hi) to 16-layer (16hi) stacks, spurring demand for new hybrid bonding techniques. HBM4 12hi products are set for a 2026 launch, with 16hi models following in 2027.

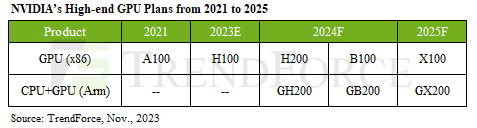

As for HBM3e, Samsung, SK hynix, and Micron have all evidently had something to offer already, having supplied early samples to NVIDIA for use in its future data center products, which appear to include an “X100” GPU and more:

In 2024, NVIDIA plans to refine its product portfolio further. New additions will include the H200, using 6 HBM3e chips, and the B100, using 8 HBM3e chips. NVIDIA will also integrate its own Arm-based CPUs and GPUs to launch the GH200 and GB200, enhancing its lineup with more specialized and powerful AI solutions.

AMD, on the other hand, will seemingly be adopting HBM3e a bit later:

AMD’s 2024 focus is on the MI300 series with HBM3, transitioning to HBM3e for the next-gen MI350. The company is expected to start HBM verification for MI350 in 2H24, with a significant product ramp-up projected for 1Q25.

Samsung announced HBM3e “Shinebolt” in October, a new type of DRAM that is set to bring a big speed boost for AI:

Samsung’s Shinebolt will power next-generation AI applications, improving total cost of ownership (TCO) and speeding up AI-model training and inference in the data center. The HBM3E boasts an impressive speed of 9.8 gigabits-per-second (Gbps) per pin speed, meaning it can achieve transfer rates exceeding up to more than 1.2 terabytes-per-second (TBps).

In a post from August, NVIDIA mentioned that HBM3e is 50% faster than HBM3, capable of delivering a total of 10 TB/sec of combined bandwidth.