GIGABYTE RTX 3080 Ti EAGLE Overclocking

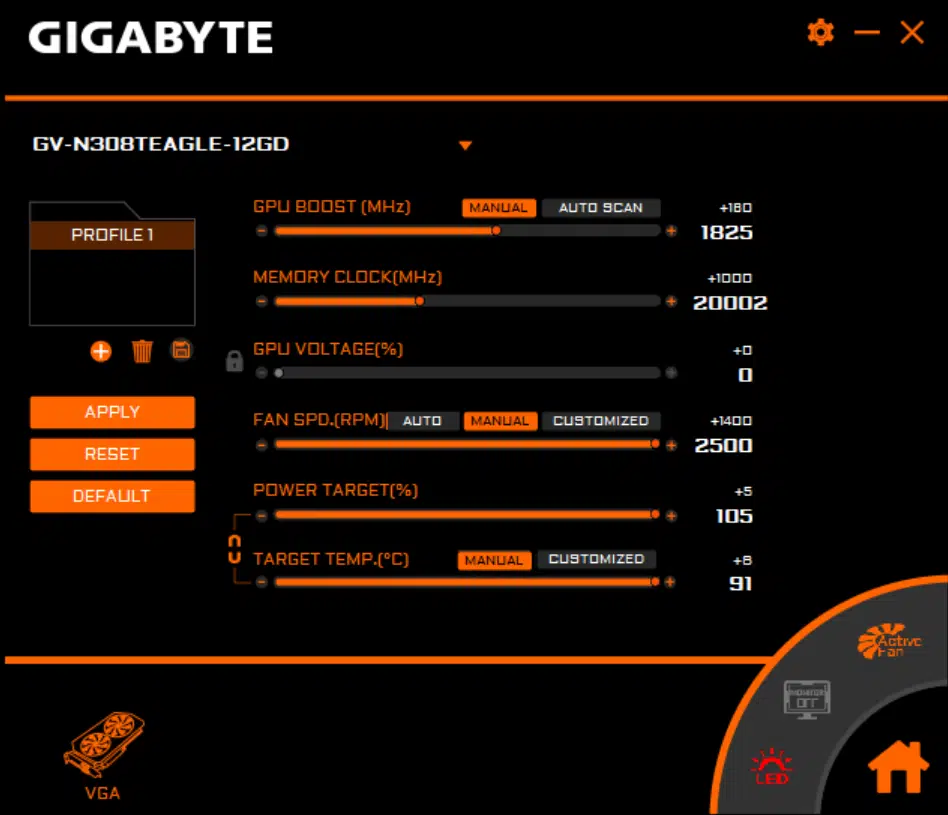

To overclock the GIGABYTE GeForce RTX 3080 Ti EAGLE 12G we can use GIGABYTE’s AORUS ENGINE software version 2.0.4. The newest version was released on 6/17 and supports the RTX 3080 Ti. It also includes RGB Fusion 2.0 software to control the RGB, it’s an optional install.

The GIGABYTE AORUS ENGINE software lets you control the GPU BOOST, MEMORY CLOCK, GPU VOLTAGE, FAN SPEED, POWER TARGET, and TARGET TEMP. Yes, you can unlock the Voltage. However, this is the rub on this video card, the Power Target advantage is very small. As you can see, we can only increase the Power Target up +5% from 100 to 105. That is very small. For comparison, the Founders Edition was able to be raised up 14% from 100 to 114, giving it more headroom than this video card.

Ultimately, this limits the card by TDP and means we hit that TDP wall much quicker when overclocking. We already determined that GDDR6X memory causes a large power hit, so with even less headroom, we have to be careful about that. However, it shouldn’t throttle due to the temperature on this video card. It also means, there is no way we can utilize a Voltage increase, that will just instantly put the card over the TDP. It’s best to let GPU Boost take control of Voltage.

The highest GPU Boost clock we could set, without harming performance, was +160 which brought the GPU Boost to 1825MHz. The highest we could set the memory was +1000 which brought the memory up to 20GHz from the default 19GHz, a 1GHz overclock. It wasn’t held back by temperature this time, it was held back by TDP. We think the memory really has the potential to at least hit 21GHz, if not held back by TDP. At 20GHz it is providing 960GB/s of memory bandwidth vs. 912GB/s at default 19GHz.

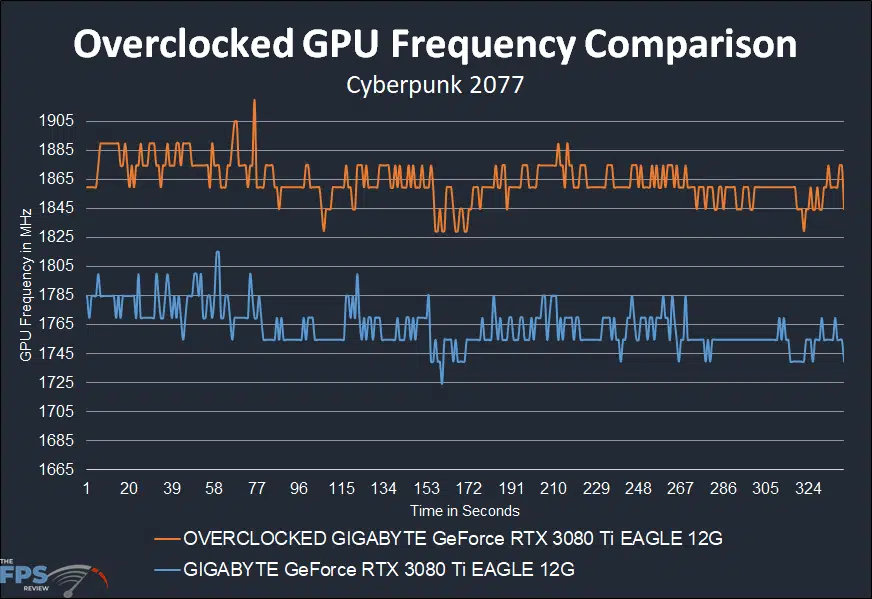

Above is our graph showing the default clock speed of the GIGABYTE GeForce RTX 3080 Ti EAGLE 12G (Blue) compared to the same video card now overclocked (Orange). You can see that at 1825MHz boost this video card seems to over around 1845-1865MHz while gaming. The average turns out to be 1864MHz. Since the default frequency average was 1764MHz this is a 6% overclock over the default frequency. This, therefore, will be our final overclock for our testing today 1864MHz/20GHz.

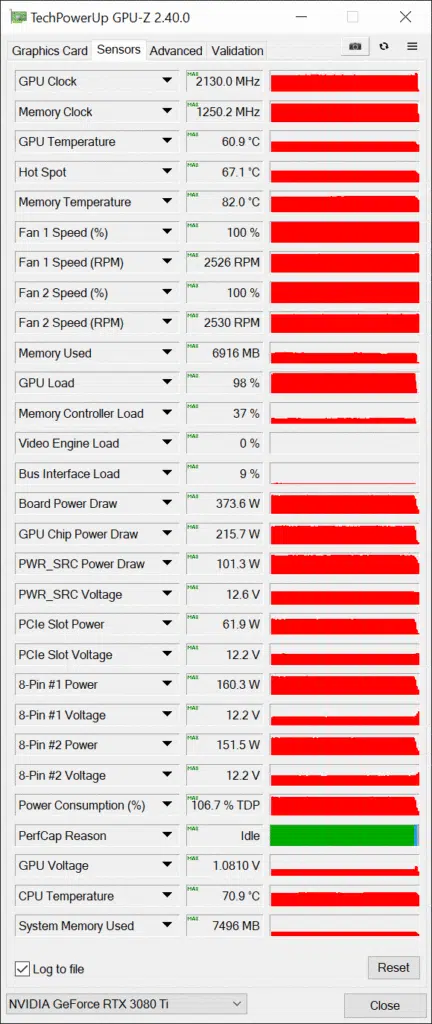

According to GPUz below it achieved this overclock at 100% fan sped at 60.9c and 67.1c hot spot. Memory temperature is very well managed at 82c with this overclock, temperature is not holding it back. GPU Voltage is at 1.0810V, which is boosted from the default Voltage, showing GPU Boost is managing it. The Power Consumption is above the TDP, so we are at the limits of this card in terms of power headroom.