Power and Temperature

To test the power and temperature we perform a manual run-through in Cyberpunk 2077 at “Ultra” settings for real-world in-game data. We use GPUz sensor data to record the results. We report on the GPUz sensor data for “Board Power” and “GPU Chip Power” when available for our Wattage data.

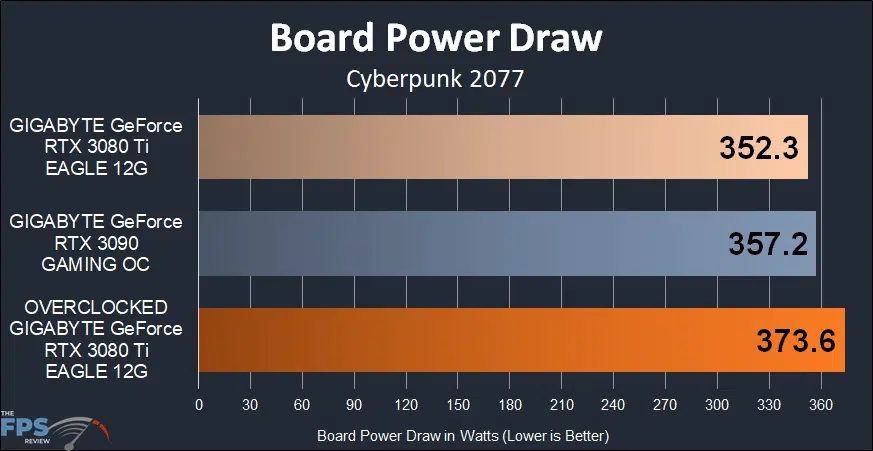

Looking at the Board Power Draw reported by GPU-Z it is no surprise the overclocked GIGABYTE GeForce RTX 3080 Ti EAGLE 12G consumes the most power, it is overclocked. The GIGABYTE GeForce RTX 3080 Ti EAGLE 12G at default actually chimes in with slightly lower board power compared to the GIGABYTE GeForce RTX 3090 GAMING OC. This makes sense, it has less RAM, but higher clock speeds on the GPU.

When we overclock it power demand goes up 6%. This is a perfect 1:1 performance lift we saw in games. We literally received an average of 6% performance gain, this is a very efficient performance to power increase and therefore the power draw increase makes perfect sense. Just be aware that it does draw this much power, so make sure your build can support that, with a good power supply.

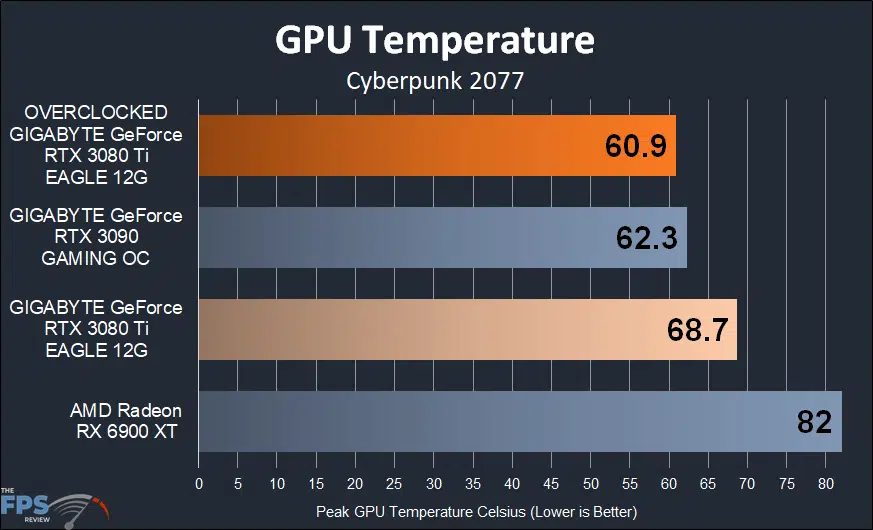

Temperatures were good with the GIGABYTE GeForce RTX 3080 Ti EAGLE 12G video card, relatively speaking. At 68.7c it is hotter than the GIGABYTE GeForce RTX 3090 GAMING OC, but remember that both have similar coolers and the GIGABYTE RTX 3080 Ti EAGLE 12G has a higher sustained GPU clock frequency. When you compare these temperatures to the Founders Edition the GIGABYTE RTX 3080 Ti EAGLE 12G runs cooler at 68.7c versus 74.2c on the Founders Edition, a 7% improvement in temperatures.

One of the largest improvements was with the memory temperatures. With the card overclocked, temperatures were in the lower 80’s, and the Founders Edition was much warmer even at 100% fan speeds. Memory temperatures at default, or overclocking, are just cooler on the GIGABYTE GeForce RTX 3080 Ti EAGLE 12G.

We also want to give huge kudos to the fan noise on this video card. It’s quiet. We didn’t even realize it was running so high, 78%, at default operation. When we turned them up to 100% they were one of the quietest fans we’ve ever heard.