Gameplay Performance Continued

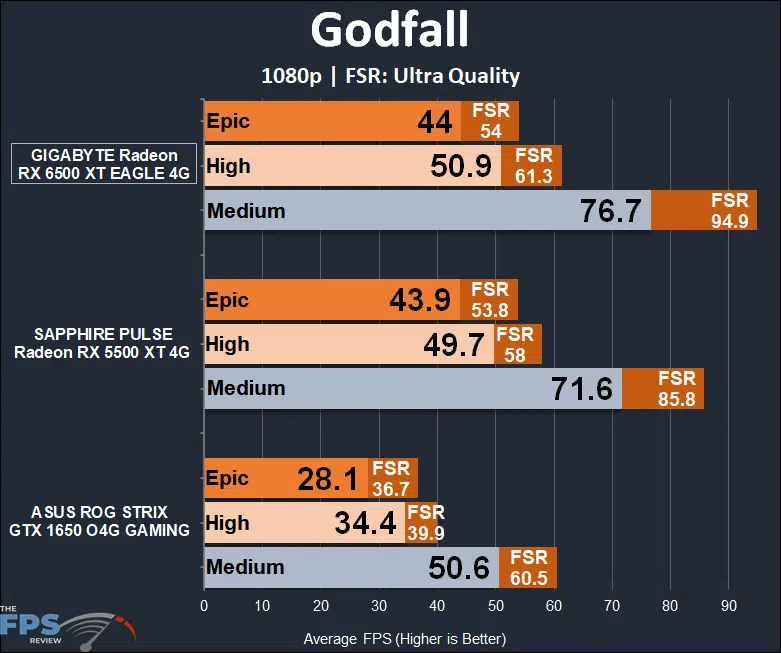

Godfall

We are using Godfall above, and the built-in quality presets. We have also included performance with AMD FX Super Resolution FSR enabled at each setting as well to compare how FSR helps performance. Ray Tracing is disabled.

Starting off at Epic quality, this game is not playable at all on any graphics card here at 1080p. It was especially choppy on the Radeon RX 6500 XT, despite the average framerate, the benchmark started off in the teens of FPS, it was pretty bad. Adding FSR did not resolve the issue. Moving down to High settings helped a lot, and the game was more tolerable, but ultimately it did not perform any faster on the RX 6500 XT than it did the RX 5500 XT at High settings. Even turning on FSR was still around the same performance.

Only at the Medium setting did the Radeon RX 6500 XT stand apart from the Radeon RX 5500 XT, by being about 7% faster. Medium settings are definitely the way to go here in this game on the Radeon RX 6500 XT. The RX 6500 XT was 42% faster than the GTX 1650. While FSR does help at High, we still experienced some stuttering on the Radeon RX 6500 XT until we moved down to Medium settings.

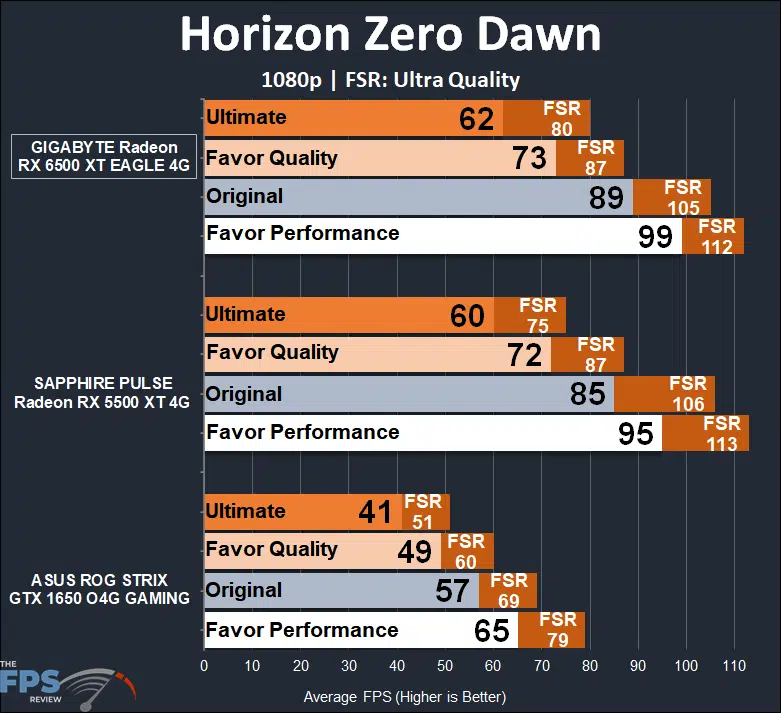

Horizon Zero Dawn

Horizon Zero Dawn now supports FSR as well, so we will utilize it here and see how much it improves performance. At Ultimate it appears that 62FPS is good, but there was still some hitching even with this good average number. Once we moved down to Favor Quality or Original then the game was super smooth. Overall, once again, the GIGABYTE Radeon RX 6500 XT EAGLE doesn’t offer much in the way of any performance improvement over the Radeon RX 5500 XT. It’s ever so slightly faster, but just barely, the FSR performance is exactly the same. It is much faster than the GeForce GTX 1650, so it has some big performance improvements there, but compared to last gens RX 5500 XT, no advantage.

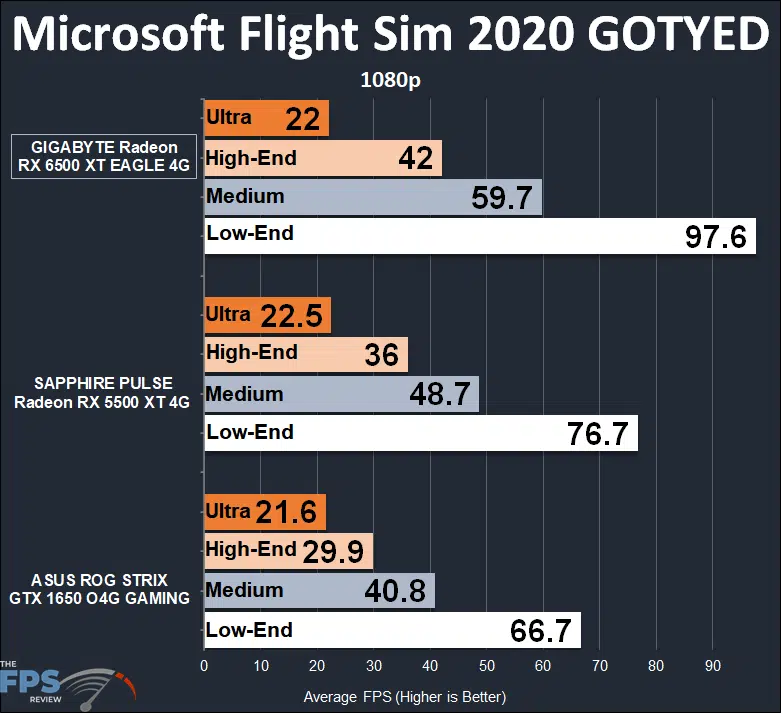

Microsoft Flight Simulator 2020 GOTYED

Lastly, we are using the newest Microsoft Flight Simulator 2020 Game of the Year Edition. We have also enabled the new DX12 API mode in the game. This game is severely bottlenecked at Ultra settings at 1080p on all these video cards, they are no different in terms of bottlenecking at that high setting. Only when we move down from one setting to the High-End quality setting do we see some differences. The GTX 1650 is not playable, but the RX 5500 XT, and more so the RX 6500 XT becomes playable. This game is playable at lower framerates, but it’s clear the new Radeon RX 6500 XT has an advantage in this game.

To get higher framerates you’ll want to move down to Medium settings. You can hit nearly 60FPS average on the new GIGABYTE Radeon RX 6500 XT EAGLE 4G, whereas the older Radeon RX 5500 XT could only manage around 49FPS average. It gets even better at Low-End settings, where you can hit some really high performance in this game on the Radeon RX 6500 XT. However, Low-End setting in this game looks like crap, we recommend not going below Medium settings in this game. At Medium settings, the newer Radeon RX 6500 XT is definitely going to provide a better experience.