Introduction

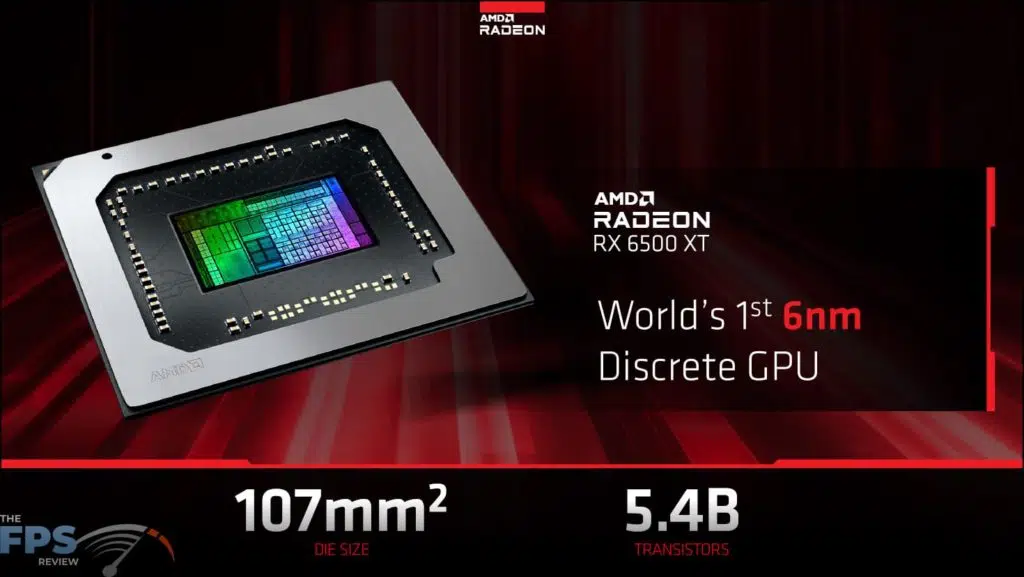

Today, AMD is launching its new Radeon RX 6500 XT GPU, which is a completely new ASIC built from the ground-up on TSMCs new N6 manufacturing node. This is the world’s first consumer 6nm GPU, and while that is surely impressive, what about the rest of the specs? Are they enough to justify the new MSRP and pricing realm we find ourselves in today for what the 6500 XT offers?

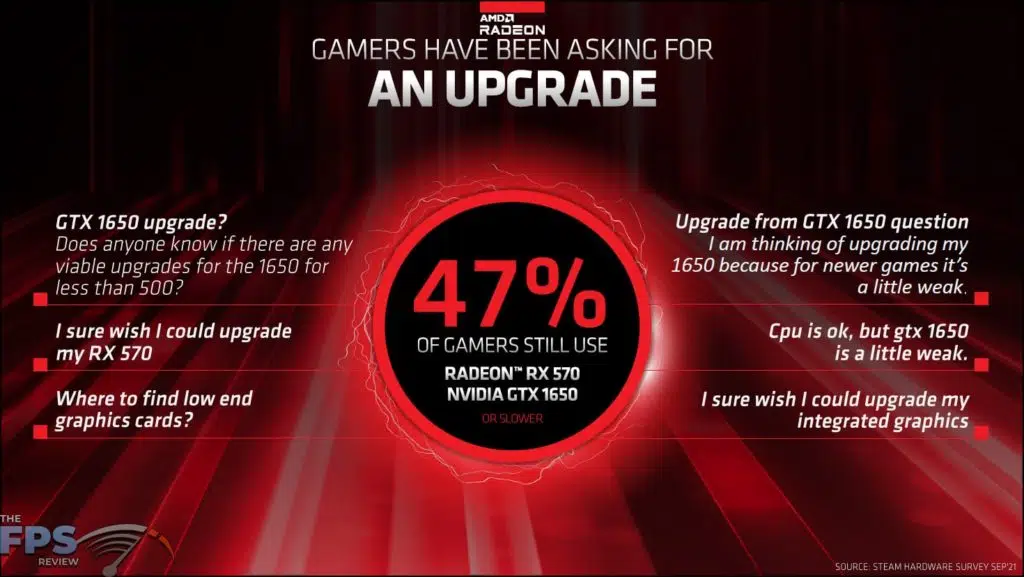

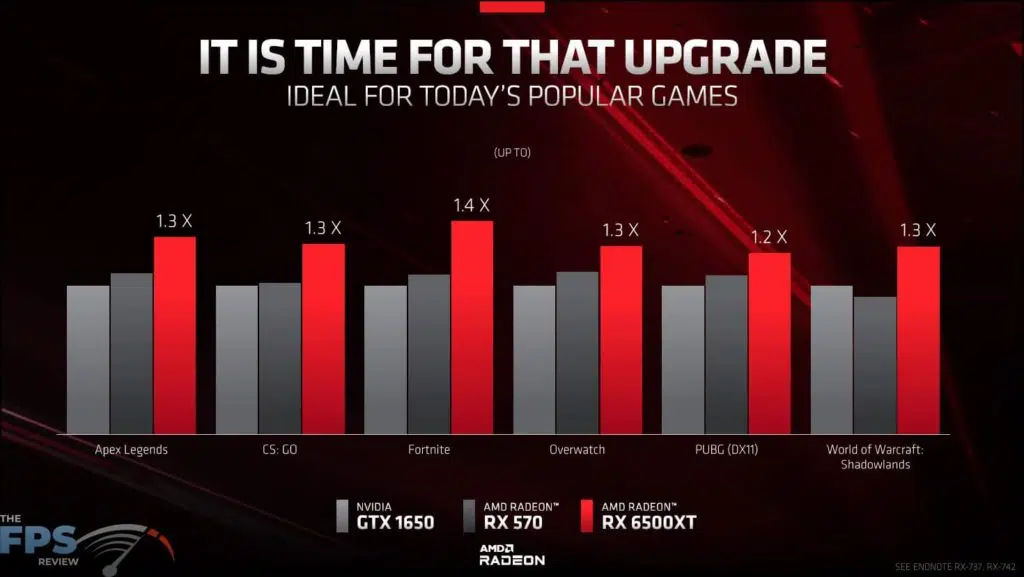

First and foremost, the AMD Radeon RX 6500 XT is AMD’s answer to the entry-level to mainstream video card level market. Think of the Radeon RX 6500 XT as an entry-level video card if you are just getting into gaming, or want to upgrade from an older video card like the Radeon RX 570 or GeForce GTX 1650. In fact, you might even think of it as a means to move from integrated graphics performance to a discrete video card level graphics performance, at least, the most cost-effective way to do so.

AMD Radeon RX 6500 XT

First, to the official MSRP, AMD has set the “SEP” of this video card at $199. However, what you are going to actually find is that manufacturers, Add-in-Board partners, and the like are actually going to be priced much higher than this, then consider the wackiness of retail and online pricing. The AIB pricing alone is simply going to be higher. We believe finding this card at $199 will be rare, as the base price set from AIBs is going to be higher from the get-go, including the card we have for review for today. You will only find this card as AIB models btw, this is an AIB-only card.

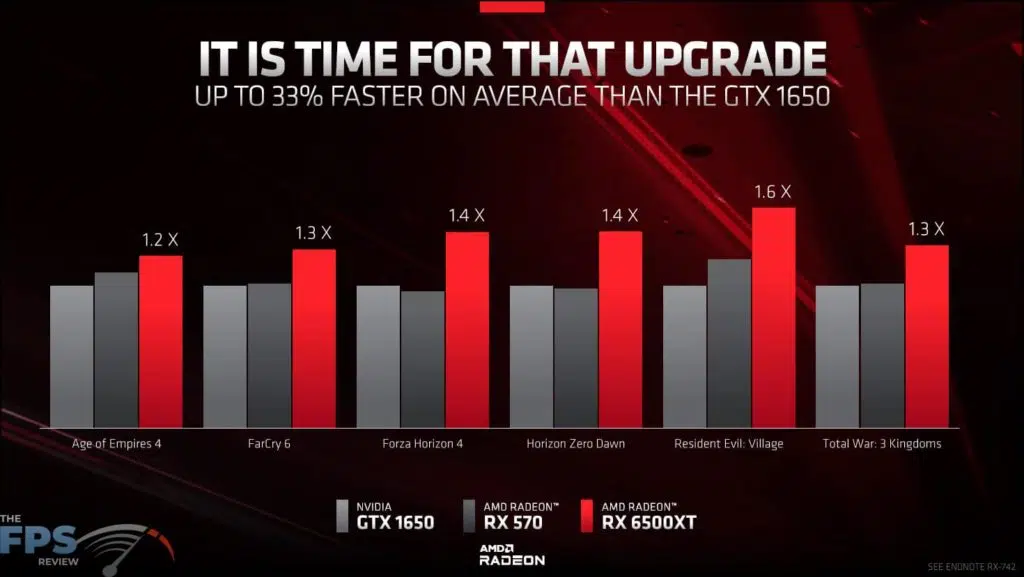

AMD is positioning this card as an upgrade from specifically a Radeon RX 570 or GeForce GTX 1650. If you look at the slide deck you will find the performance comparisons based against those video cards. While that is fine and all, we also feel it is important to compare this video card to the last generation’s Radeon RX 5500 XT to see what has transpired from generation to generation, as the Radeon RX 5500 XT is based on the RDNA1 architecture and the new Radeon RX 6500 XT is based on the new RDNA2 architecture.

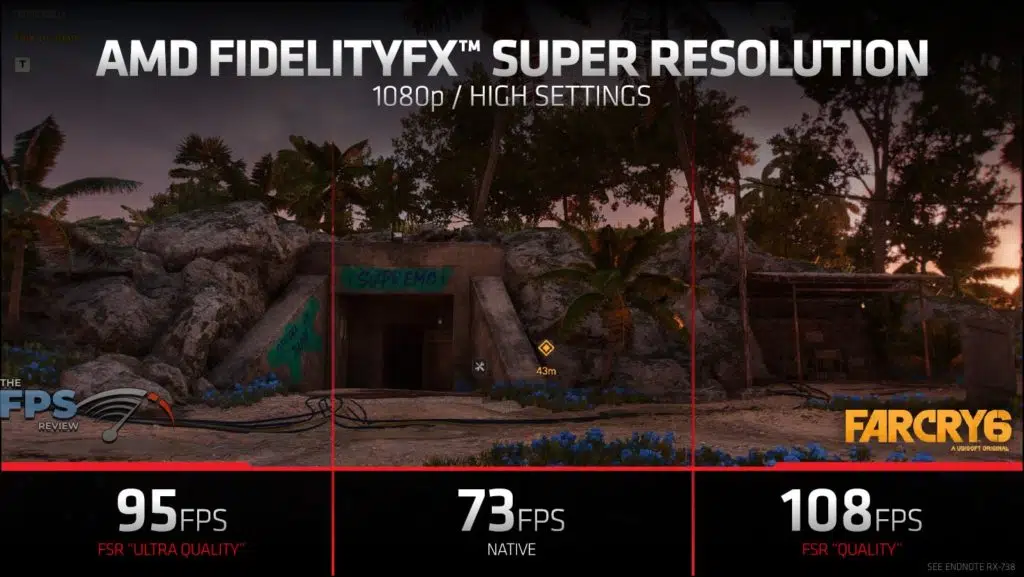

As this is an entry-level to mainstream video card, it is targeted at the 1080p gameplay experience. In addition, it is more apt to play games at 1080p at the Medium or High settings in current games. This is not a video card for the “Ultra” or “Extreme” or “Maxed Out” settings at 1080p, rather, it’s a card for the medium level of gameplay settings at 1080p in most cases.

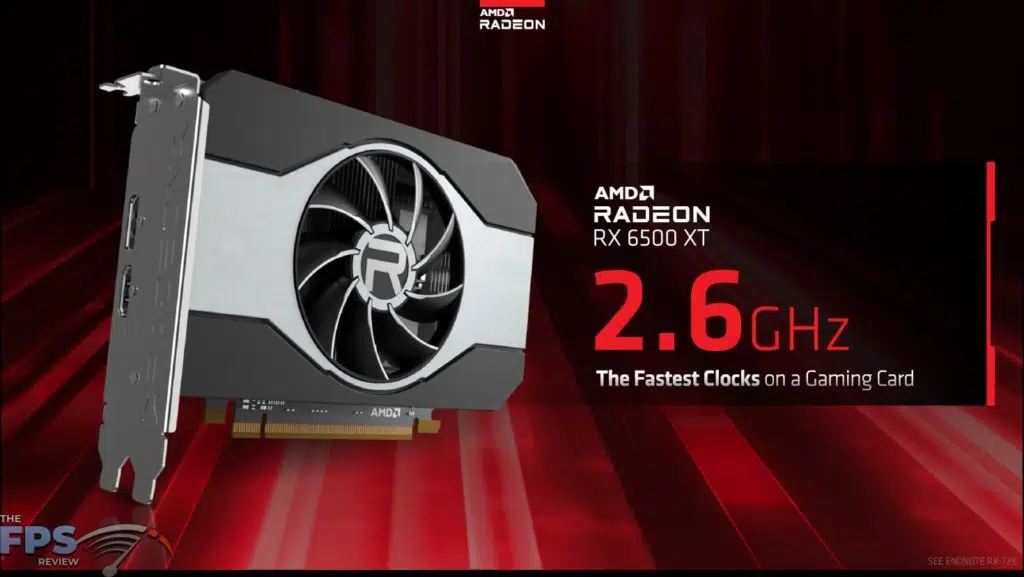

One of the unique features of the Radeon RX 6500 XT is that it is the first GPU out based on TSMC’s N6 (6nm) process. The GPU is 107mm2 with 5.4 billion Transistors. This has allowed AMD to hit a very high frequency, the fastest they claim, with the game clock on this GPU set at 2.6GHz, that’s fast! You might even imagine hitting 3GHz on overclocks, that’s pretty incredible.

Hardware Specs

| Specification | AMD Radeon RX 6500 XT | AMD Radeon RX 5500 XT |

|---|---|---|

| Manufacturing Node | TSMC N6 | TSMC N7 |

| Architecture | RDNA2 | RDNA |

| Compute Units | 16 | 22 |

| Ray Accelerators | 16 | N/A |

| Stream Processors | 1024 | 1408 |

| Infinity Cache | 16MB | N/A |

| ROPs | 32 | 32 |

| Game Clock/Boost Clock | 2610MHz/2815MHz | 1717MHz/1845MHz |

| Memory | 4GB GDDR6 | 4GB or 8GB GDDR6 |

| Bus Width | 64-bit | 128-bit |

| Memory Frequency | 18GHz | 14GHz |

| Memory Bandwidth | 144GB/s | 224GB/s |

| PCIe Interface | PCIe 4.0 x4 | PCIe 4.0 x8 |

| Media Encoder (VCE) | No | Yes |

| TDP | 107W/120W | 130W |

| MSRP | $199 | 4GB $169 | 8GB $199 |

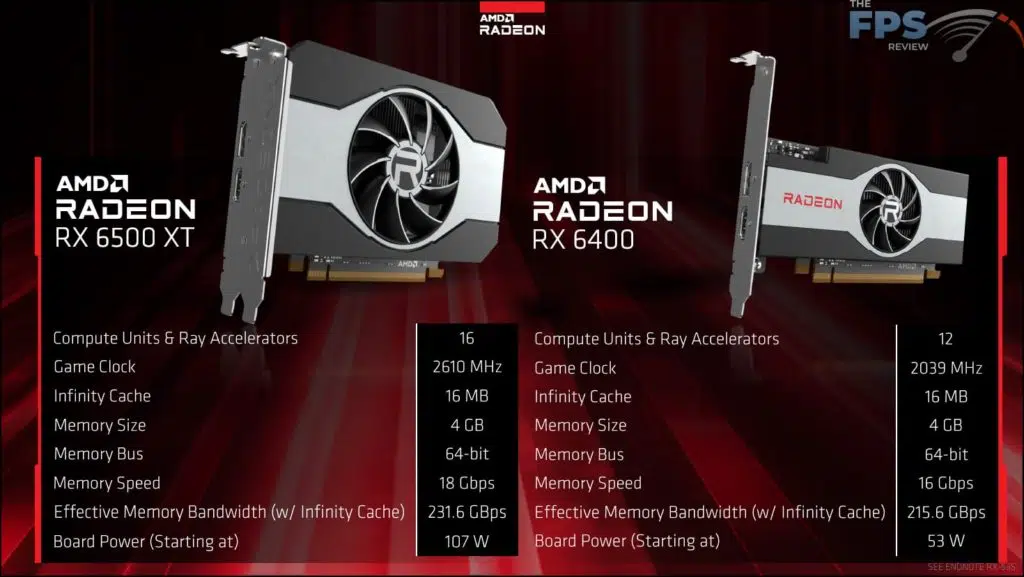

As we mentioned above, the Radeon RX 6500 XT is based on the new RDNA2 architecture and therefore does feature Infinity Cache. There is in fact 16MB of Infinity Cache on board, and boy does it need it with some of the constraints this video card has been given. First and foremost, you’ll notice that the memory bus width is only 64-bit, yes, a 64-bit memory bus with this GDDR6 memory.

That’s a very narrow bus, but remember the Infinity Cache is supposed to make up for it. To also help make up for the narrow memory bus AMD is running the fastest GDDR6 available at 18GHz. That provides 231.6GB/s of memory bandwidth. It’s not a lot, but again the Infinity Cache is supposed to make up for that. In addition, it has a small framebuffer, only 4GB on the Radeon RX 6500 XT, with no 8GB options like the Radeon RX 5500 XT had.

Other specifications about the GPU, it has 16 Compute Units and thus 16 Ray Accelerators for Ray Tracing. It has 1024 Stream Processors, 32 ROPs, and runs at 2610MHz game clock and 2815MHz boost clock. It has an official TDP or board power of 107W, but there also seems to be a secondary 120W potential some add-in-board partners may exploit. This would mean potentially higher clock speeds on those parts, perhaps for factory overclocked video cards.

As this is based on the RDNA2 architecture, it also supports Ray Tracing. However, it only has 16 Ray Accelerators, so the Ray Tracing is more likely there to experiment with, and have the capability of it, but in terms of practical performance with it, it’s probably not going to be playable with Ray Tracing, unless you really lower in-game graphics settings.

The Constraints

There are a few other constraints to consider on this video card that you should know about. The Radeon RX 6500 XT will be built with a PCIe 4.0 x4 signaling interface to save cost. That means it operates at PCI-Express 4.0 x4 connection, which is close to 8GB/s (a little less actually). Since this video card is based on x4 signaling, and not x8 like the previous Radeon RX 5500 XT, on any PCI-Express 3.0 system platform it will still only operate at x4 signaling and cut the bandwidth in half, taking it down to less than 4GB/s.

It’s a major constraint that will affect users on PCI-Express 3.0 platforms, which there are many of. You won’t get full bandwidth out of this card unless you are on a PCI-Express 4.0 or 5.0 system, which cuts a lot of users out who are on older systems, the kind of person who might want a video card upgrade. Again, the Radeon RX 5500 XT was on a PCIe 4.0 x8 interface, so in PCI Gen3 it still operates at x8 lanes, double that of the 6500 XT. We’ll talk more about this later.

As if all that wasn’t enough, there is one more piece of information that is important. The Radeon RX 6500 XT has ditched the AMD VCE encoding engine to save cost. While it can decode H.264 and VP9 and H.265, it cannot decode AV1, nor can it encode anything in hardware. That’s right, no encoding engine.

There simply is no option to encode using the VCE, it will resort to CPU only for Encoding, with no GPU assist at all. For any content creators who aren’t gamers, and looking for a cheap video card to offload video encoding, the Radeon RX 6500 XT will not have that functionality for you. The weird thing is, the Radeon RX 5500 XT, last gens card, had it. Seems like a move backwards, to us.

Our Goals In Testing

While there is certainly a lot of constraints here, our goal today is to show you how the video card scales up and down in-game settings at 1080p. To do this in our review today we are going to play several games at 1080p and test from the Ultra or Ultimate settings for the game at 1080p, down to the Medium or Low setting, showing all the levels in-between. This way you can find out what level of gameplay it might provide for you and what kind of performance you will expect. Are you aiming for 60fps at 1080p? Our gameplay performance testing today should show you where that lies with this card.