NVIDIA has officially launched its next-generation Blackwell platform, and with it comes a set of new and elaborate hardware for fueling the next stage in the AI craze, including some that the company says are powerful enough to enable “trillion-parameter-scale AI models.” These would include the GB200 NVL72, a new exascale computer that can deliver up to 1,440 PFLOPS and 3,240 TFLOPS of performance thanks in part to its 70+ Blackwell GPUs—new GPUs based on TSMC’s 4NP process that feature 208 billion transistors.

Blackwell announcements include:

- GB200 Grace Blackwell Superchip

- B200 and B100 Tensor Core GPUs

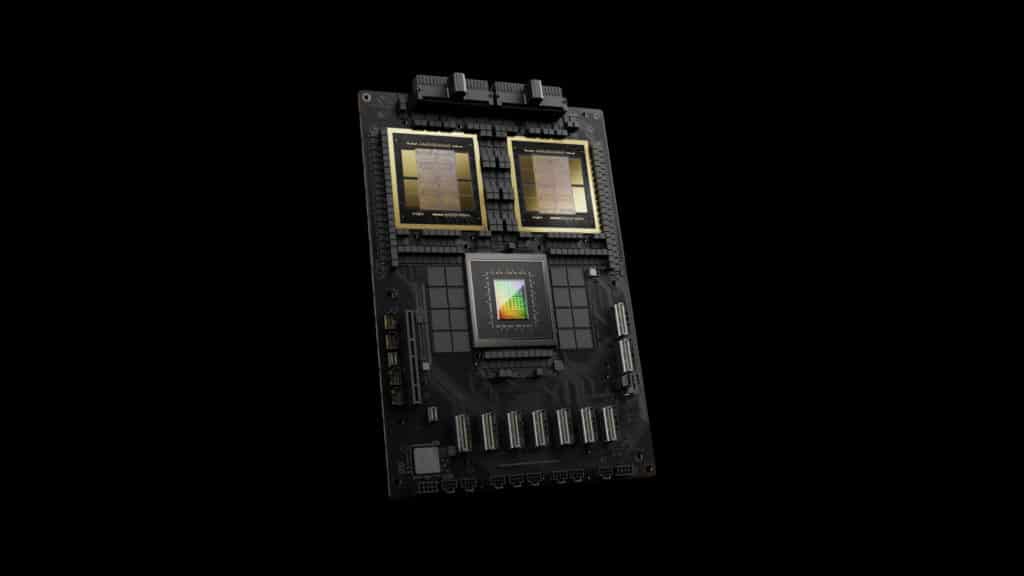

- HGX B200 and HGX B100 boards

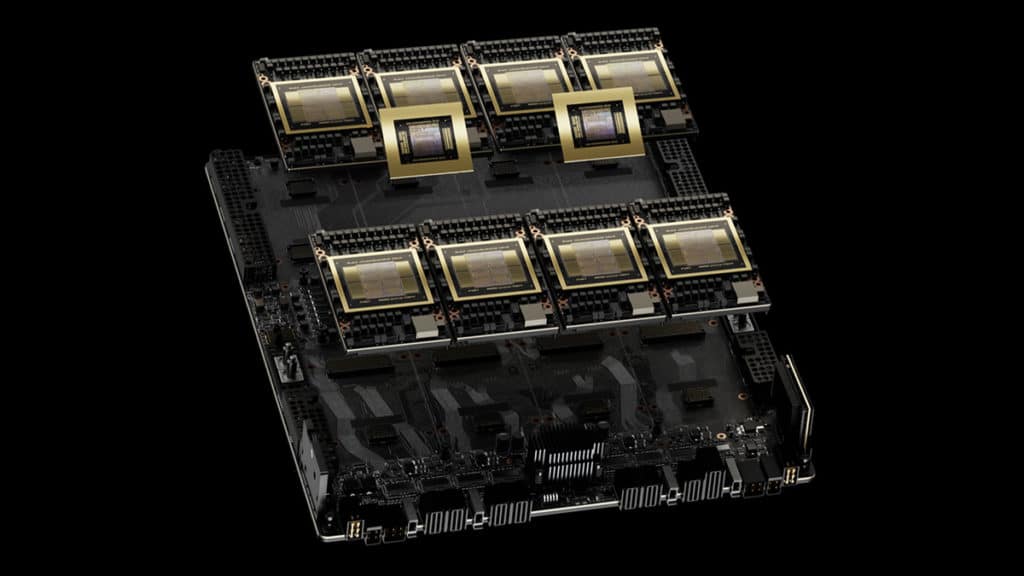

- GB200 NVL72 rack-scale system

- Quantum-X800 InfiniBand and Spectrum-X800 Ethernet platforms

New renders of Blackwell-powered hardware:

NVIDIA on how the Blackwell platform comprises six technologies:

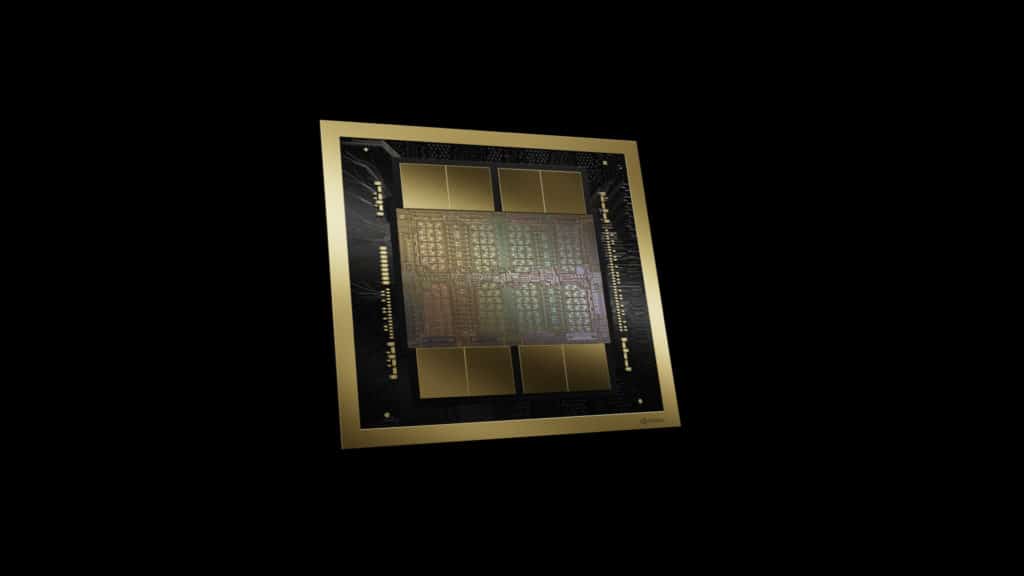

- World’s Most Powerful Chip — Packed with 208 billion transistors, Blackwell-architecture GPUs are manufactured using a custom-built 4NP TSMC process with two-reticle limit GPU dies connected by 10 TB/second chip-to-chip link into a single, unified GPU.

- Second-Generation Transformer Engine — Fueled by new micro-tensor scaling support and NVIDIA’s advanced dynamic range management algorithms integrated into NVIDIA TensorRT-LLM and NeMo Megatron frameworks, Blackwell will support double the compute and model sizes with new 4-bit floating point AI inference capabilities.

- Fifth-Generation NVLink — To accelerate performance for multitrillion-parameter and mixture-of-experts AI models, the latest iteration of NVIDIA NVLink delivers groundbreaking 1.8TB/s bidirectional throughput per GPU, ensuring seamless high-speed communication among up to 576 GPUs for the most complex LLMs.

- RAS Engine — Blackwell-powered GPUs include a dedicated engine for reliability, availability and serviceability. Additionally, the Blackwell architecture adds capabilities at the chip level to utilize AI-based preventative maintenance to run diagnostics and forecast reliability issues. This maximizes system uptime and improves resiliency for massive-scale AI deployments to run uninterrupted for weeks or even months at a time and to reduce operating costs.

- Secure AI — Advanced confidential computing capabilities protect AI models and customer data without compromising performance, with support for new native interface encryption protocols, which are critical for privacy-sensitive industries like healthcare and financial services.

- Decompression Engine — A dedicated decompression engine supports the latest formats, accelerating database queries to deliver the highest performance in data analytics and data science. In the coming years, data processing, on which companies spend tens of billions of dollars annually, will be increasingly GPU-accelerated.

HGX B200/B100 specs:

| HGX B200 | HGX B100 | |

|---|---|---|

| GPUs | HGX B200 8-GPU | HGX B100 8-GPU |

| Form factor | 8x NVIDIA B200 SXM | 8x NVIDIA B100 SXM |

| HPC and AI compute (FP64/TF32/FP16/FP8/FP4) | 40TF/18PF/36PF/72PF/144PF | 30TF/14PF/28PF/56PF/112PF |

| Memory | Up to 1.4TB | Up to 1.4TB |

| NVIDIA NVLink | Fifth generation | Fifth generation |

| NVIDIA NVSwitch | Fourth generation | Fourth generation |

| NVSwitch GPU-to-GPU bandwidth | 1.8TB/s | 1.8TB/s |

| Total aggregate bandwidth | 14.4TB/s | 14.4TB/s |

GB200 Series specs:

| GB200 NVL72 | GB200 Grace Blackwell Superchip | |

| Configuration | 36 Grace CPU : 72 Blackwell GPUs | 1 Grace CPU : 2 Blackwell GPU |

| FP4 Tensor Core | 1,440 PFLOPS | 40 PFLOPS |

| FP8/FP6 Tensor Core | 720 PFLOPS | 20 PFLOPS |

| INT8 Tensor Core | 720 POPS | 20 POPS |

| FP16/BF16 Tensor Core | 360 PFLOPS | 10 PFLOPS |

| TF32 Tensor Core | 180 PFLOPS | 5 PFLOPS |

| FP64 Tensor Core | 3,240 TFLOPS | 90 TFLOPS |

| GPU Memory | Bandwidth | Up to 13.5 TB HBM3e | 576 TB/s | Up to 384 GB HBM3e | 16 TB/s |

| NVLink Bandwidth | 130TB/s | 3.6TB/s |

| CPU Core Count | 2,592 Arm Neoverse V2 cores | 72 Arm Neoverse V2 cores |

| CPU Memory | Bandwidth | Up to 17 TB LPDDR5X | Up to 18.4 TB/s | Up to 480GB LPDDR5X | Up to 512 GB/s |

NVIDIA on the GB200 NVL72:

It combines 36 Grace Blackwell Superchips, which include 72 Blackwell GPUs and 36 Grace CPUs interconnected by fifth-generation NVLink. Additionally, GB200 NVL72 includes NVIDIA BlueField-3 data processing units to enable cloud network acceleration, composable storage, zero-trust security and GPU compute elasticity in hyperscale AI clouds. The GB200 NVL72 provides up to a 30x performance increase compared to the same number of NVIDIA H100 Tensor Core GPUs for LLM inference workloads, and reduces cost and energy consumption by up to 25x.

Jensen Huang, founder and CEO of NVIDIA said:

For three decades we’ve pursued accelerated computing, with the goal of enabling transformative breakthroughs like deep learning and AI. Generative AI is the defining technology of our time. Blackwell is the engine to power this new industrial revolution. Working with the most dynamic companies in the world, we will realize the promise of AI for every industry.