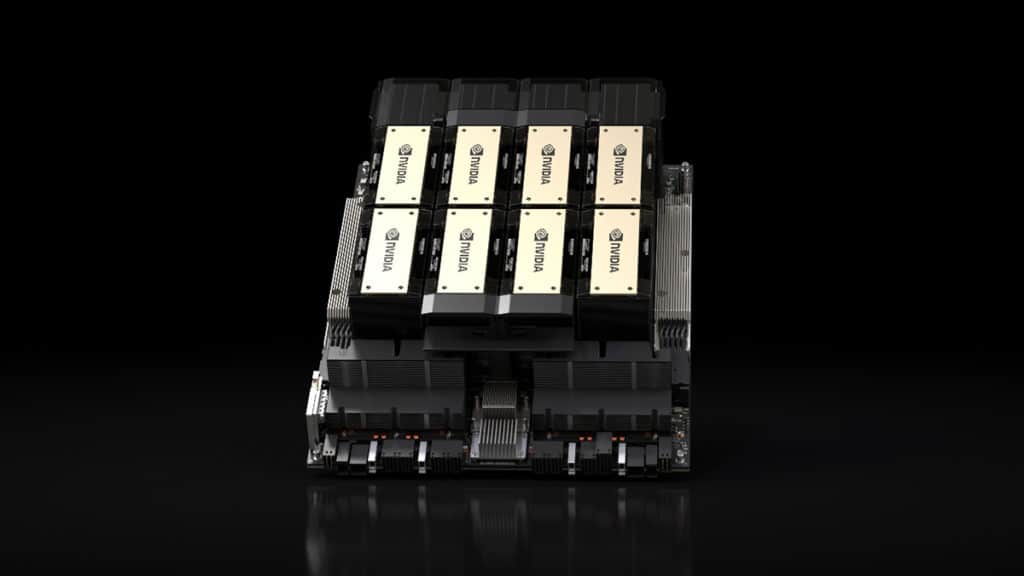

The B100 and B200, NVIDIA’s upcoming high-end AI processors based on the next-generation “Blackwell” architecture, will feature support for 192 GB and 288 GB of HBM3e memory, respectively, according to specifications shared by the @XpeaGPU account ahead of NVIDIA’s GTC 2024 event. Jensen Huang, Founder and CEO of NVIDIA, is expected to unveil all of the details about his company’s new accelerators during his opening keynote, which will premiere later today.

Memory comparison:

- H100: 80 GB (HBM3)

- H200: 96 GB (HBM3), 144 GB (HBMe)

- B100: 192 GB (HBM3e)

- B200: 288 GB (HBM3e)

The word from @XpeaGPU:

I dont want to spoil Nvidia B100 launch tomorrow but this thing is a monster. 2 dies on CoWoS-L, 8×8-Hi HBM3e stacks for 192GB of memory. One year later, B200 goes with 12-Hi stacks and will offer a beefy 288GB. And the performance! it's… oh no Jensen is there… me run away! pic.twitter.com/YFXzrnSRmG

— AGF (@XpeaGPU) March 17, 2024

Keynote info:

- Monday, March 18

- 1 p.m. Pacific

- SAP Center in San Jose, Calif.

Teaser:

Reuters on potential pricing of the B100:

Demand for Nvidia’s current AI chips has outstripped supply, with software developers waiting months for a chance to use AI-optimized computers at cloud providers. Nvidia is unlikely to give specific pricing, but the B100 is likely to cost more than its predecessor, which sells for upwards of $20,000.