Introduction

The wait is finally over, what has seemed like ages, AMD has finally released a series of new GPUs aimed at the high-end gaming enthusiast level of performance. The performance to finally provide an enjoyable 4K gaming experience, something which AMD has never offered before.

For the last, very long while, years even, NVIDIA has dominated this performance segment. AMD instead, decided to ignore this level of performance and focus on the mainstream segment. This left competition void in the very high-end, and NVIDIA simply had the fastest video card and best 4K experience with the GeForce RTX 2080 Ti reigning for literally years, since 2018. AMD offered no competition whatsoever. AMD offered no 4K gaming video card. Is this truly AMD’s comeback? Let’s find out.

History

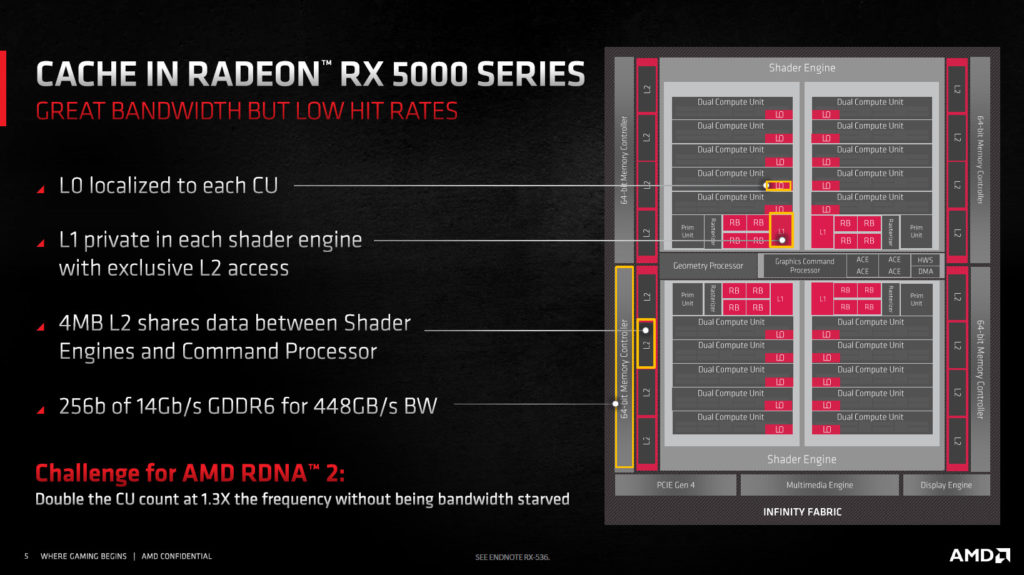

Let’s do a little history before we dive into the new Radeon RX 6000 series video cards based on RDNA2. Let’s first travel back to AMD’s most recent video card launch in July of 2019 with the AMD Radeon RX 5000 series. The Radeon RX 5000 series was based on AMD’s RDNA (1st generation RDNA) architecture. However, something you should know about RDNA1, it did share some leftovers from the previous GCN 5.0 Vega architecture. It was more of a hybrid architecture, moving away from Vega, but not quite delivering truly next-generation features and performance yet. It was a good attempt, and a big efficiency upgrade from Vega, but not as big of an upgrade as RDNA2 architecture is which today’s Radeon RX 6000 series video cards are based on.

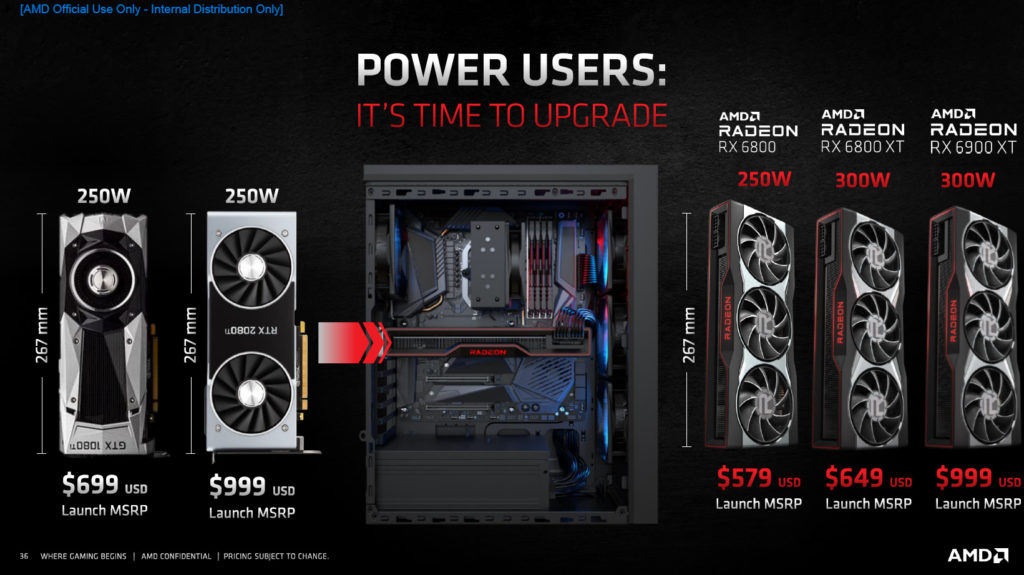

The Radeon RX 5700 XT, the most expensive video card out of the bunch debuted at $399. This was as fast and expensive as it got for AMD with RDNA1 on the PC in the last launch. At this pricing, it compared to the NVIDIA GeForce RTX 2060 SUPER in pricing. AMD did not offer a 4K performance video card, or anything to rival the GeForce RTX 2080, RTX 2080 SUPER, and RTX 2080 Ti. Those video cards simply dominated Radeon RX 5700 XT performance as they were meant to provide absolutely the fastest performance. Then the GeForce RTX 2080 Ti offered the best 4K experience.

If we travel back even further in 2019 to the launch prior to the Radeon RX 5000 series we come to a unique launch, the Radeon VII in February of 2019. This video card was unique in many ways, as it was AMD’s first 7nm based GPU for the consumer. This was a big bold step forward. It also uniquely had 16GB of HBM2 memory, another bold move. However, these innovations could not save the fact that the GPU was based on the older GCN 5.0 Vega architecture, which is two generations old at this point. This really held the performance back in gaming, and despite 16GB of HBM2 memory, it was not the card for 4K either despite the $699 price tag.

In fact, the nature of this video card was based on a workstation-class GPU from AMD, and it was clear the DNA of Radeon VII was tied to that platform. Though the Radeon VII launched at $699, the same price as the GeForce RTX 2080 and RTX 2080 SUPER, it was not capable performance-wise to provide an enjoyable 4K experience. It was nowhere near RTX 2080 Ti levels of performance which had launched prior to it in September of 2018. It just could not compete on performance. It offered a decent 1440p experience, and that was all. In the end, the video card was short-lived with AMD discontinuing the video card only five months from the launch.

But if you look at it from a price perspective the Radeon VII was the last GPU AMD launched at the higher $699 price point. And now, the new Radeon RX 6800 XT will replace that price point at a bit lower of a price in fact.

Radeon RX 6000 Series Announcement

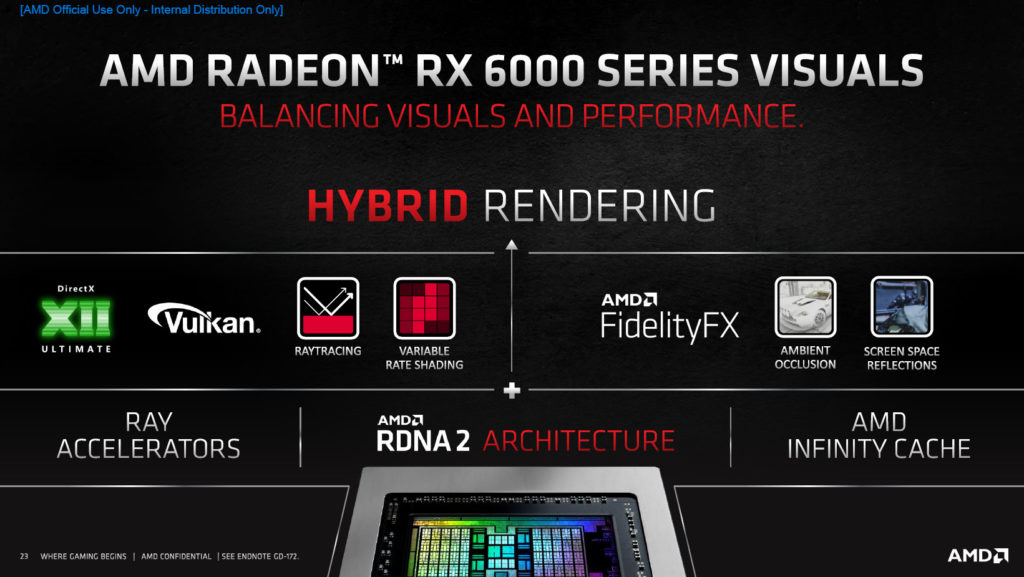

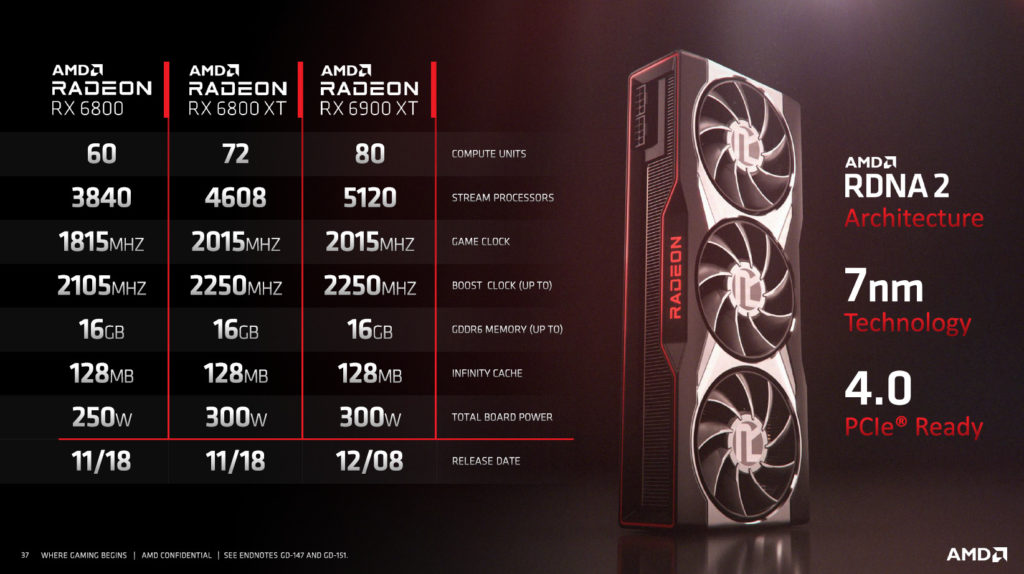

Here we are to today, November 18th, 2020, what may have been a bad year for pretty much everyone, may end up being a good year for AMD in the end. What is being launched today is the Radeon RX 6800 XT and Radeon RX 6800 video cards. These new video cards are based on the totally new RDNA2 architecture, gone are the legacy components of GCN and Vega. RDNA2 brings new hardware features such as Ray Tracing, and Infinity Cache, and DirectX 12 Ultimate support.

Finally, AMD is aiming high again placing these video cards in a higher performance segment. The Radeon RX 6800 XT will retail for $649, and the Radeon RX 6800 will retail for $579.

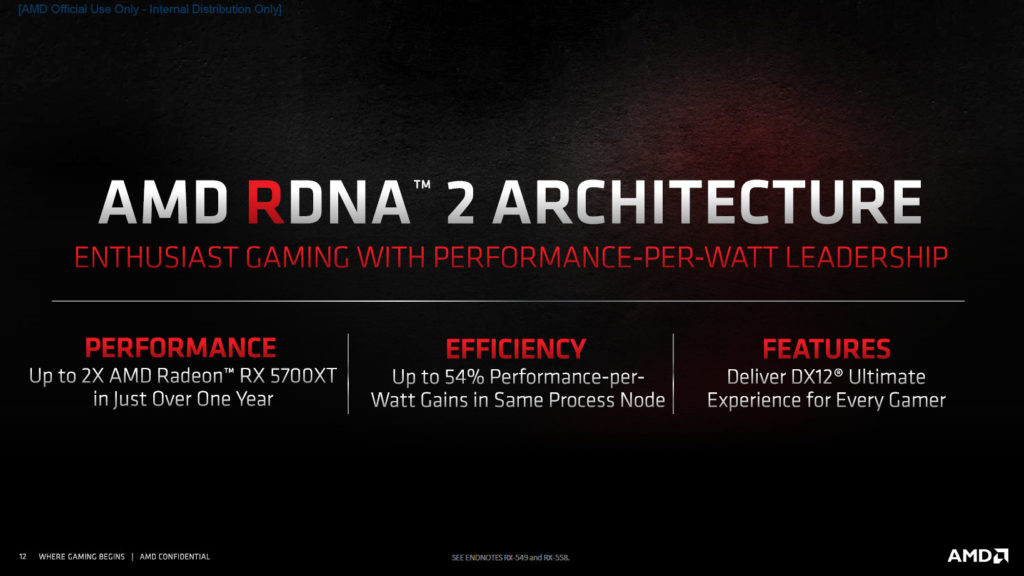

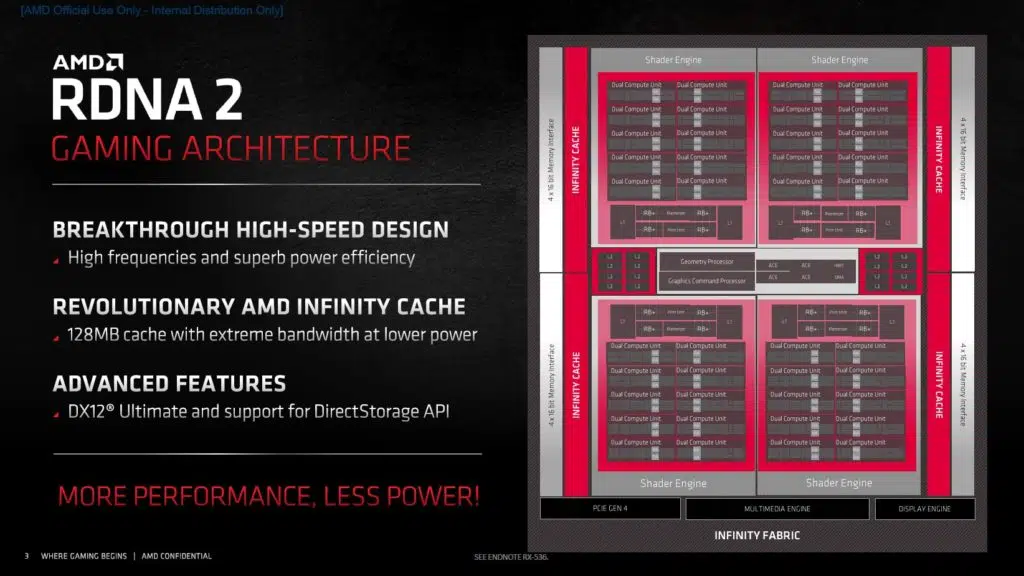

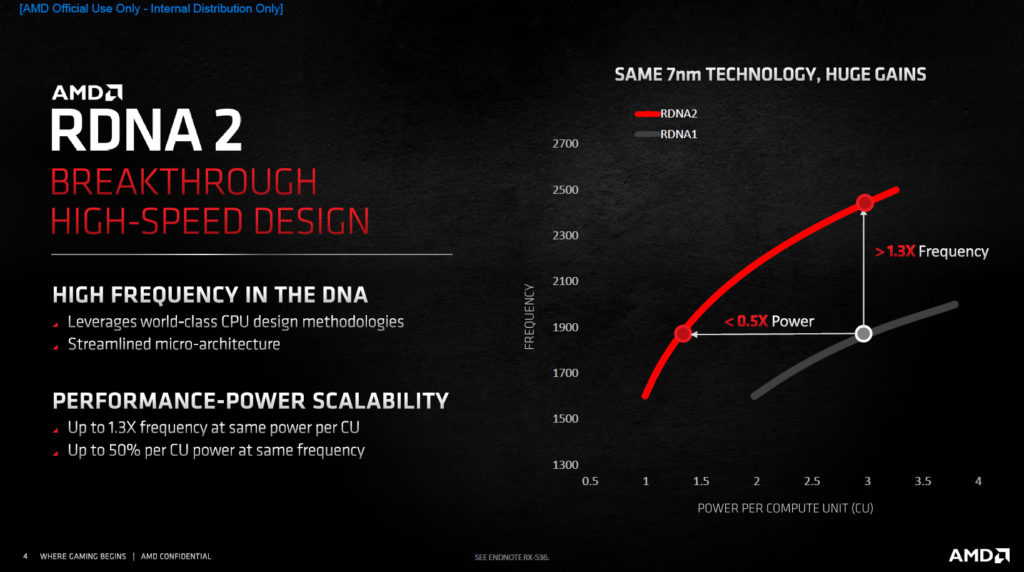

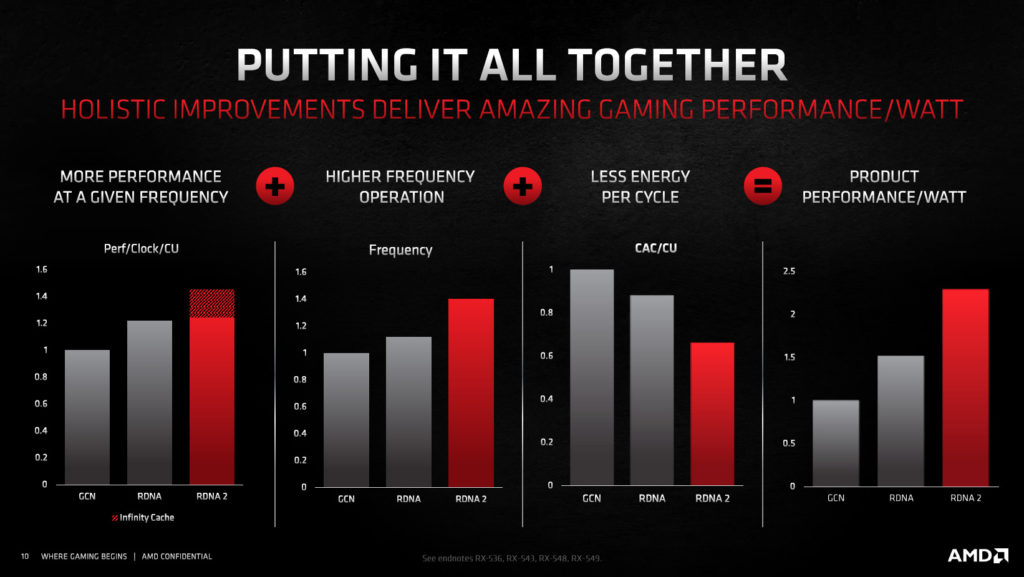

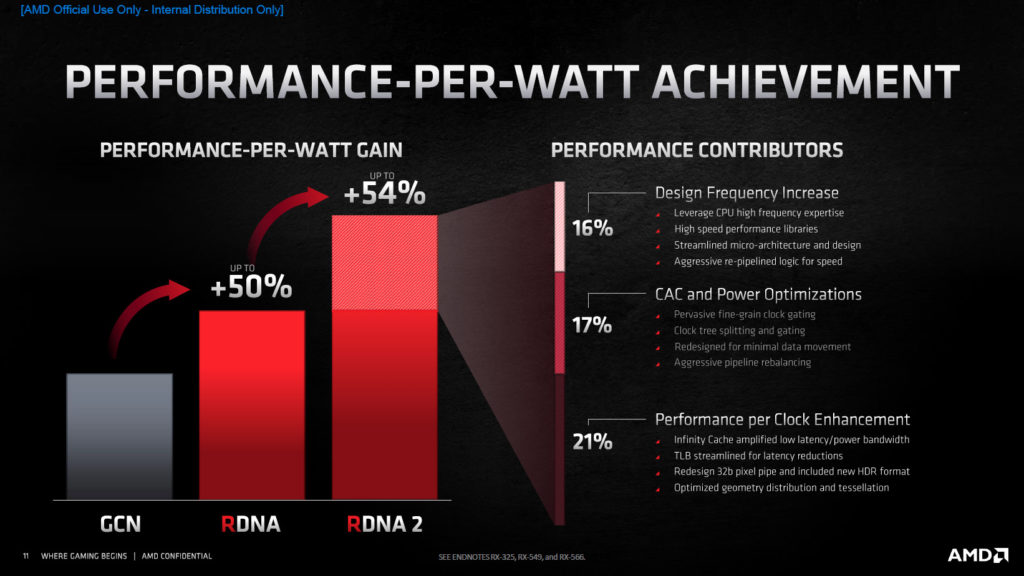

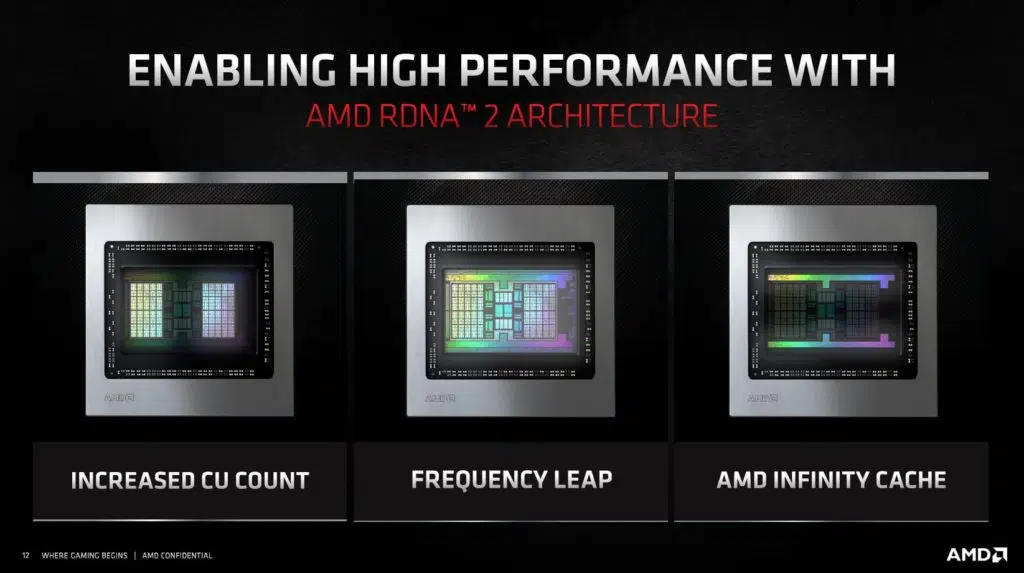

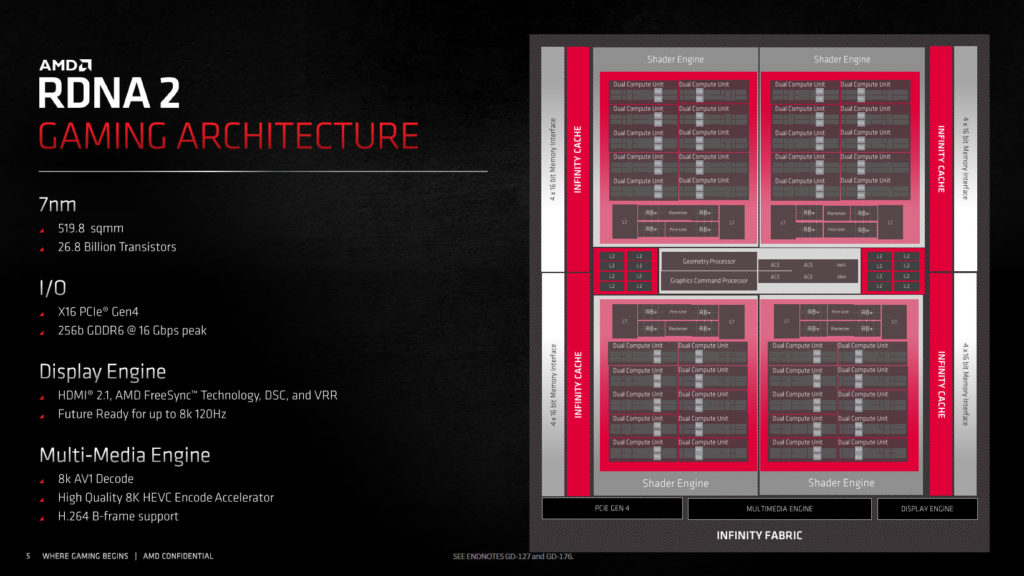

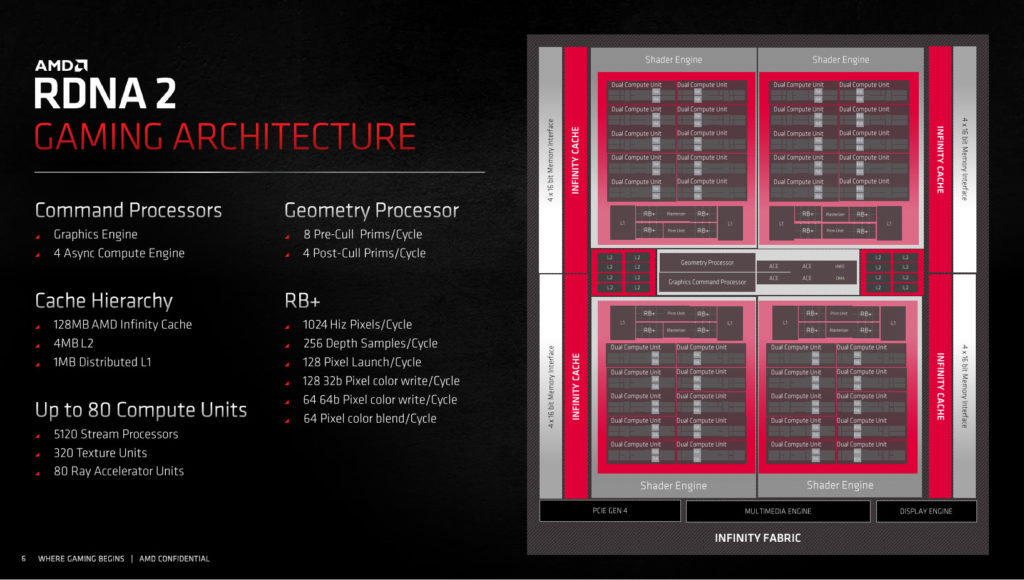

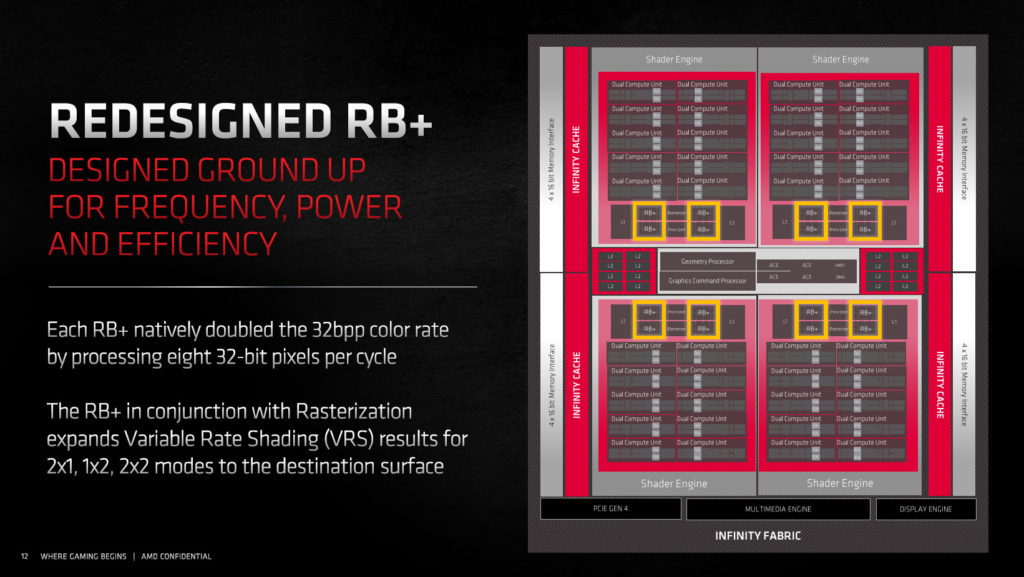

RDNA 2

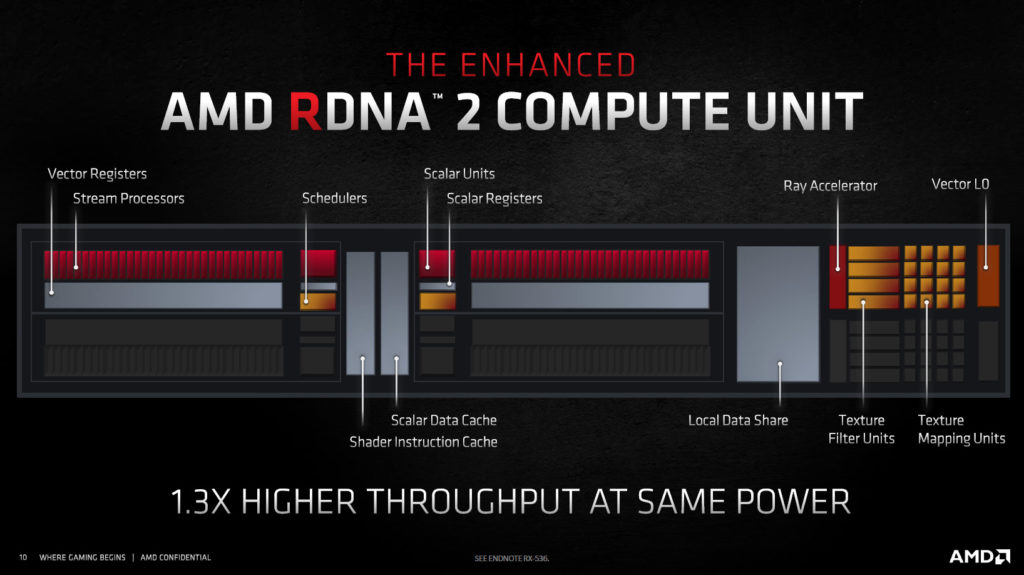

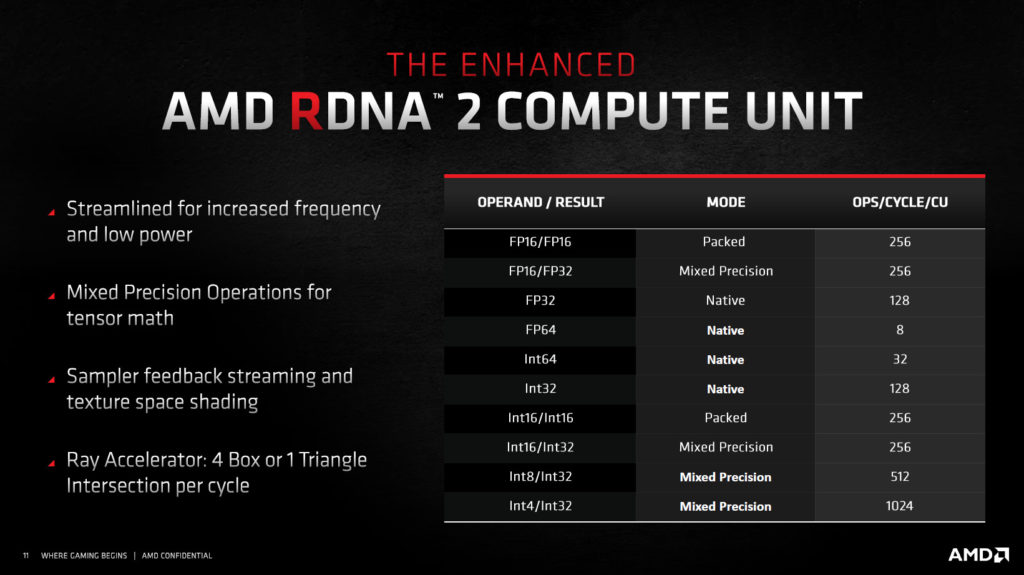

Let’s dive into the RDNA2 architecture and see what makes these GPUs tick before we dive into the specs on both. The RDNA2 architecture is designed for performance and a big upgrade in performance per Watt, or efficiency. It is also geared for DX12 Ultimate support and supports all the new features in DX12 Ultimate. The design was made also so that RDNA2 can obtain high frequencies and integrates a new type of Cache. The manufacturing process is the same TSMC 7nm, but AMD has improved the design to hit a higher frequency target, as well as greater density with more CUs and added features.

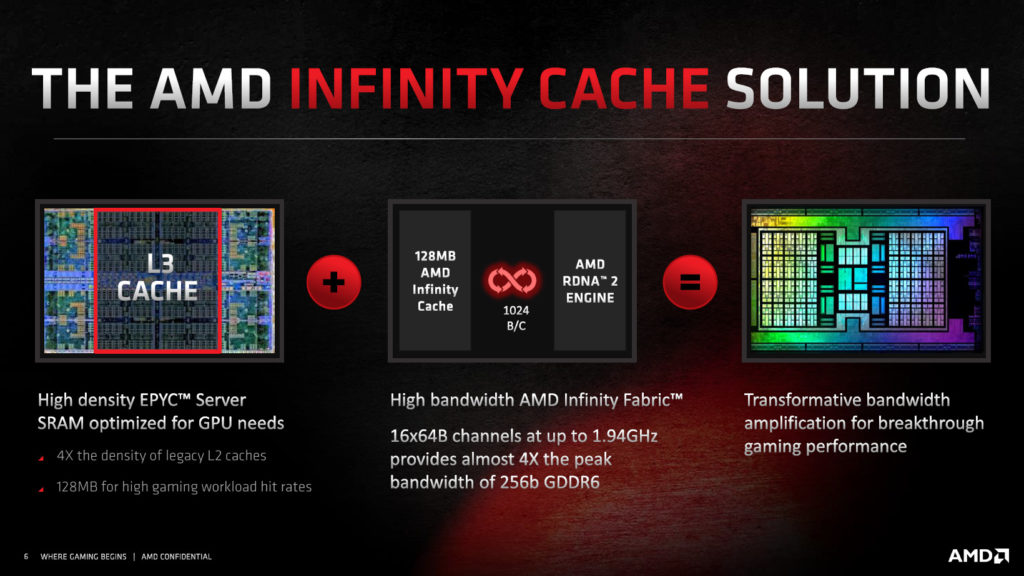

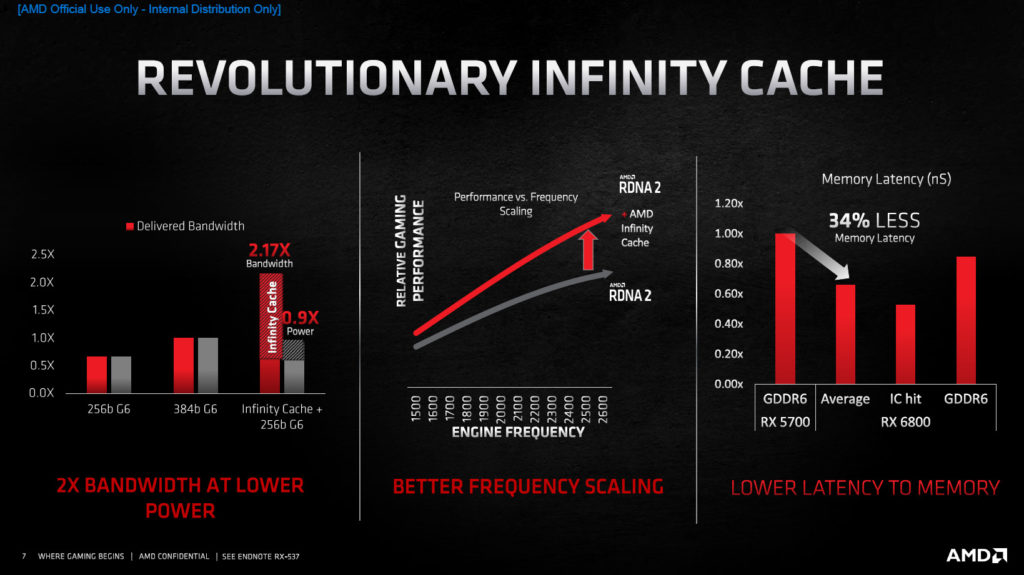

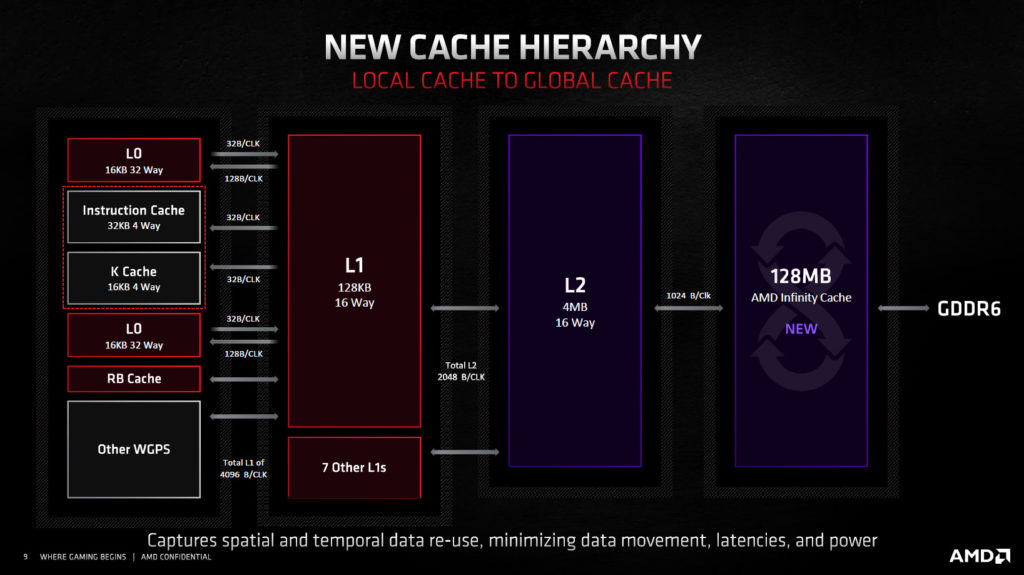

One of the most important new features in RDNA2 is the inclusion of a new Cache AMD calls Infinity Cache. This is a 128MB portion of embedded memory that allows all CUs to directly address inside the GPU. The goal is to improve performance per Watt, reduce latency, and optimize for high internal frequencies. This is one major new feature that separates RDNA2 from previous generations and we expect to see it in future generations, ever-expanding and being optimized.

The goal to make AMD RDNA 2 a highly power-efficient architecture resulted in the creation of the AMD Infinity Cache – a cache level alters the way data is delivered in GPUs. This global cache allows fast data access and acts as a massive bandwidth amplifier, enabling high performance bandwidth with superb power efficiency.

A highly optimized on-die cache results in frame data delivered with much lower energy per bit. With 128MB of AMD Infinity Cache, up to 3.25x effective bandwidth of 256-bit of GDDR6 is achieved, and when adding power to the equation, up to 2.4x more effective bandwidth/watt vs 256-bit GDDR6 is achieved.

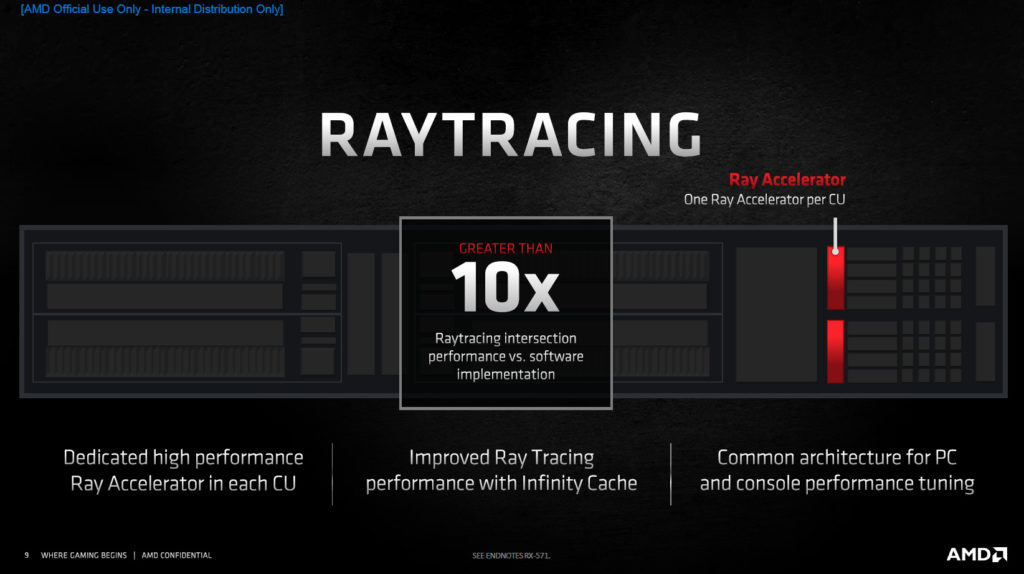

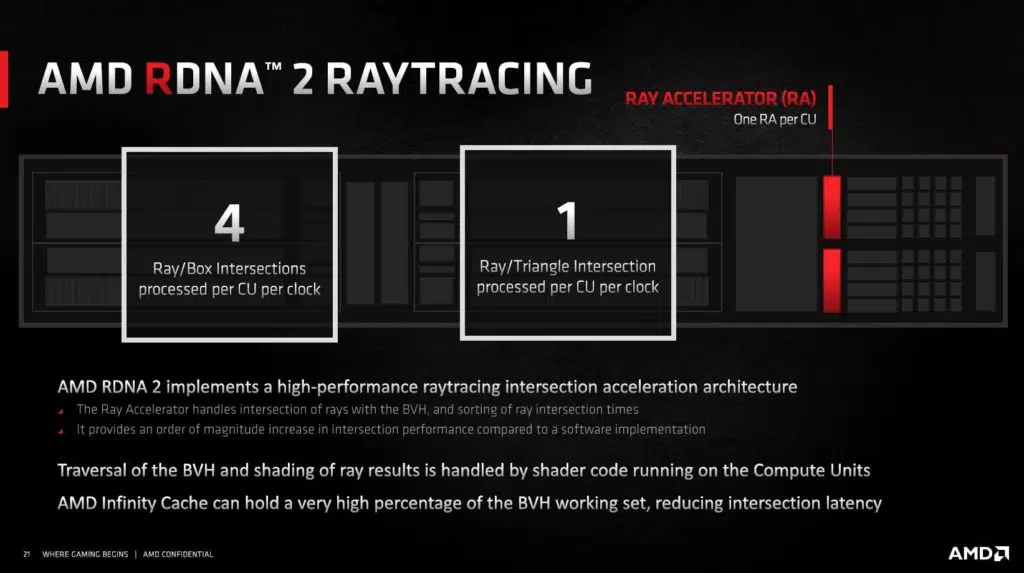

RDNA2 also includes some new hardware, it has dedicated hardware Ray Accelerators, one per CU. The Ray Accelerator handles intersections of rays with the BVH and sorting of ray intersection times. Each Ray Accelerator is capable of 4 Ray/Box intersections processed per CU per clock and 1 Ray/Triangle intersection processed per CU per clock. Traversal of the BVH and shading of ray results are handled by the shader code running on the CUs. The Infinity Cache can hold a high percentage of the BVH working set, reducing intersection latency. RDNA2 supports DX12 Ultimate, DXR for Ray Tracing and Variable Rate Shading, Mesh Shaders and Sampler Feedback.

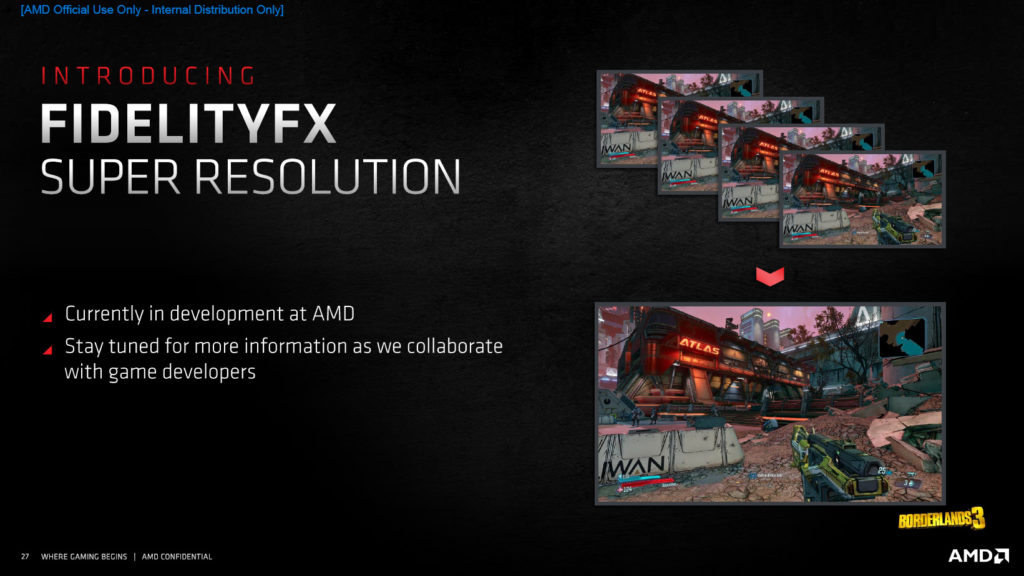

The power savings result in the Radeon RX 6800 XT being a 300W TDP video card, and the Radeon RX 6800 a 250W TDP video card. AMD does state that these are capable of 4K gaming. AMD is working on a Super Resolution feature similar to NVIDIA’s DLSS, but it is not yet implemented, that will be a future addition.

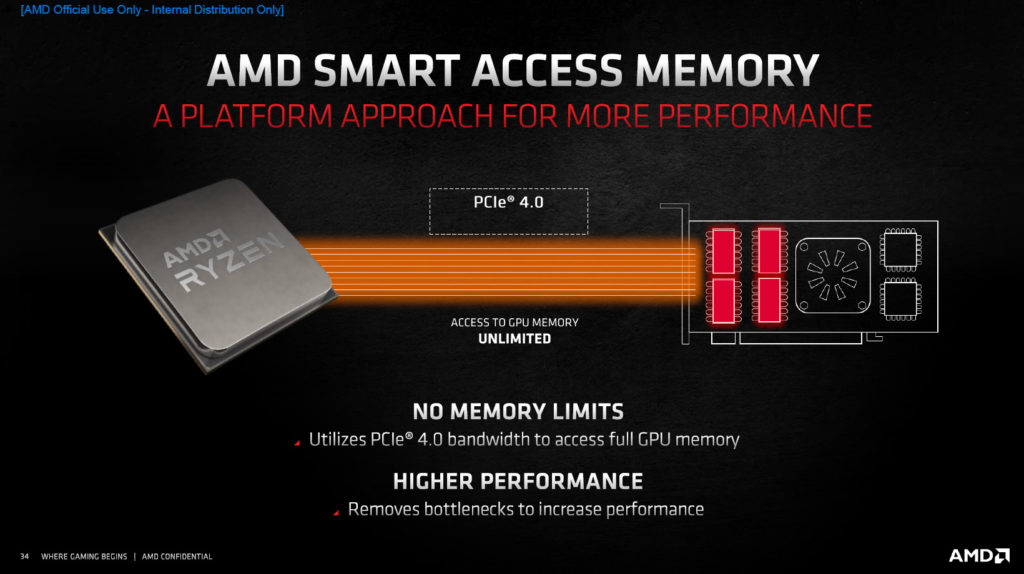

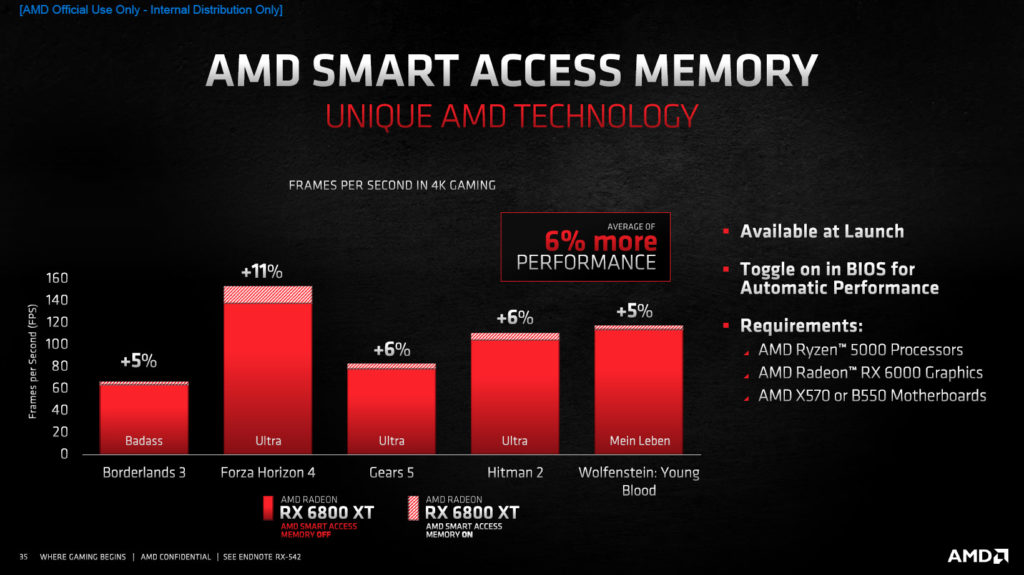

Smart Access Memory

AMD supports a new feature it calls Smart Access Memory. However, it is actually a PCI-Express feature spec. The PCI-Express feature is called Resizable PCI BAR. It is a feature that can be enabled on a platform basis in PCI-Express 4.0, and possibly even down to PCI-Express 3.0. Right now, AMD is utilizing the feature but you need an AMD X500 series motherboard, a Zen 3 CPU, and a Radeon RX 6000 series GPU to enable it. You will be able to download a new BIOS for your motherboard where it will be required to turn the feature on. By default, the feature will be off. AMD’s plans call for this option to be enabled by default in future motherboard releases.

AMD made an announcement recently clarifying information on this feature. AMD states the feature is not proprietary and can work with other hardware. NVIDIA also chimed in on the feature and stated that it too can support this PCI-Express feature in GeForce RTX 30 series and that it will in the future with similar performance results. Performance results reported by AMD are in the single-digit percentage range (5-6% on average) for uplift. Therefore, right now, the feature is disabled by default, requires a specific hardware combination (at the moment) with a new BIOS update and you must turn it on manually in the BIOS. This may change in the future as it is adopted and turned on by default and supported by both AMD and NVIDIA.