Test Setup

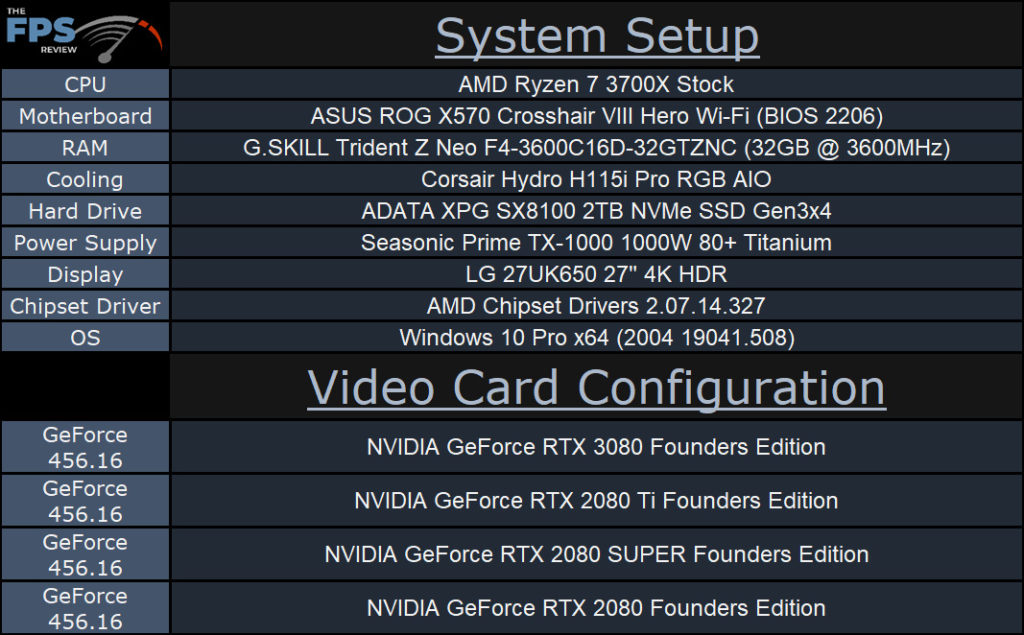

Please read our GPU Test Bench and Benchmarking Refresher for an explanation of our test system, procedures, and goals. More information on our GPU testing can be found here. Check out our KIT page where you can see all the components in our test system configuration for reviewing video cards.

NVIDIA provided us a press driver for the GeForce RTX 3080 Founders Edition, this was version 456.16 and we used this driver on every video card.