Memory Bandwidth

AIDA64

This program has many tools for determining memory bandwidth as well as various latency values.

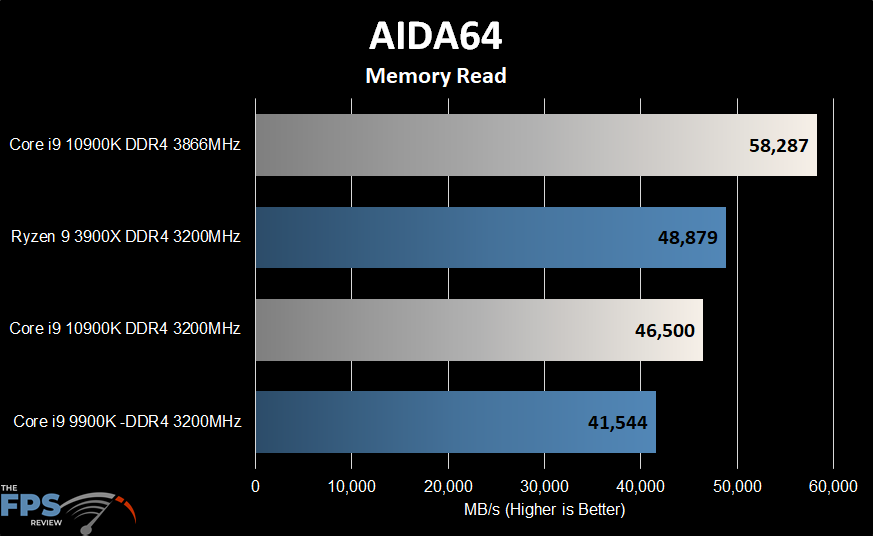

Memory Read

Here we can see solid performance out of all the test systems. The memory controller on the 3900X is very competitive here, turning in a slightly higher score than our 10900K. However, the difference is fairly small. We do see a marked improvement in the 10900K compared to the outgoing 9900K.

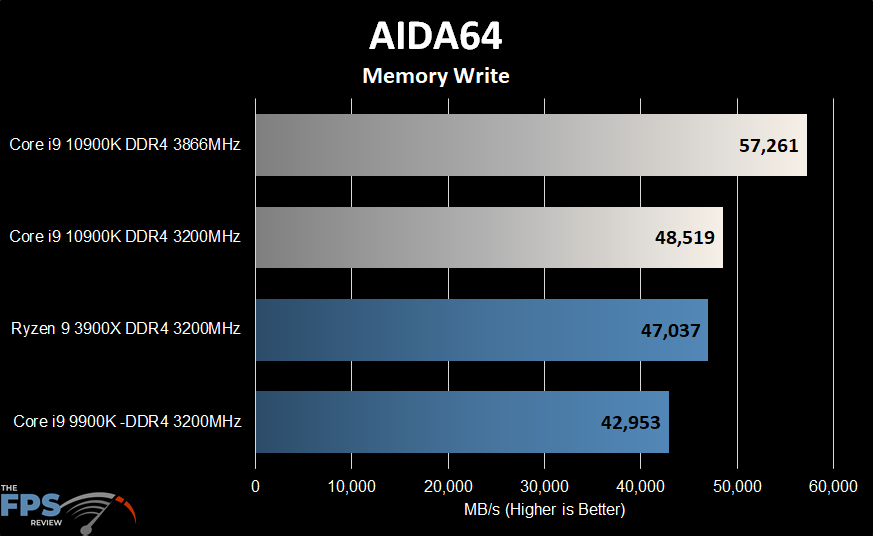

Memory Write

The AMD and Intel mainstream CPU’s trade blows here. The Intel 10900K comes out with a slight lead. Again, Intel has definitely improved the memory controller of the 10th generation CPUs.

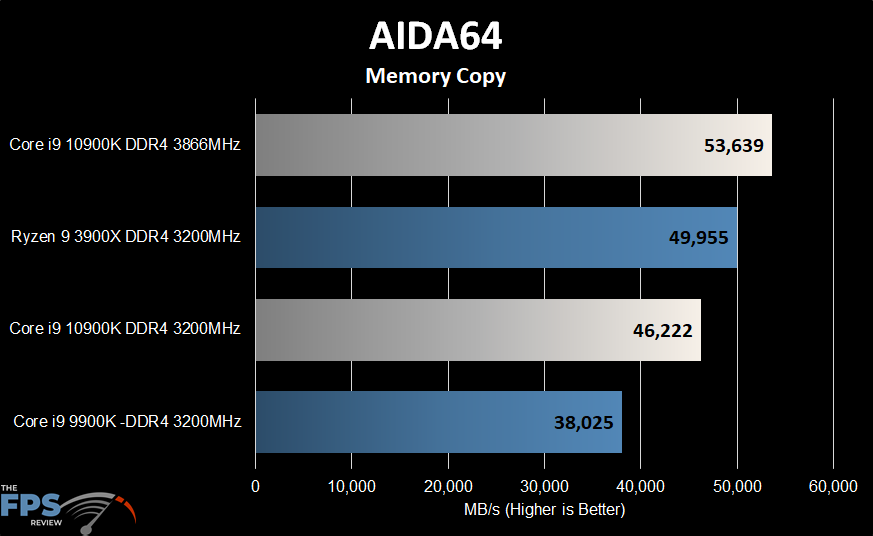

Memory Copy

Here, the AMD Ryzen 9 3900X is considerably faster than the Intel Core i9-10900K. Still, Intel does improve on the previous iteration of its Skylake architecture by a good margin. One thing to point out here is that while Intel may win and lose in these tests, it’s CPU’s do not have the same Infinity Fabric clock divider, which limits the Ryzen 3000 series after about DDR4 3800MHz. Overclocked to DDR4 3866MHz, Intel can easily win these memory tests.

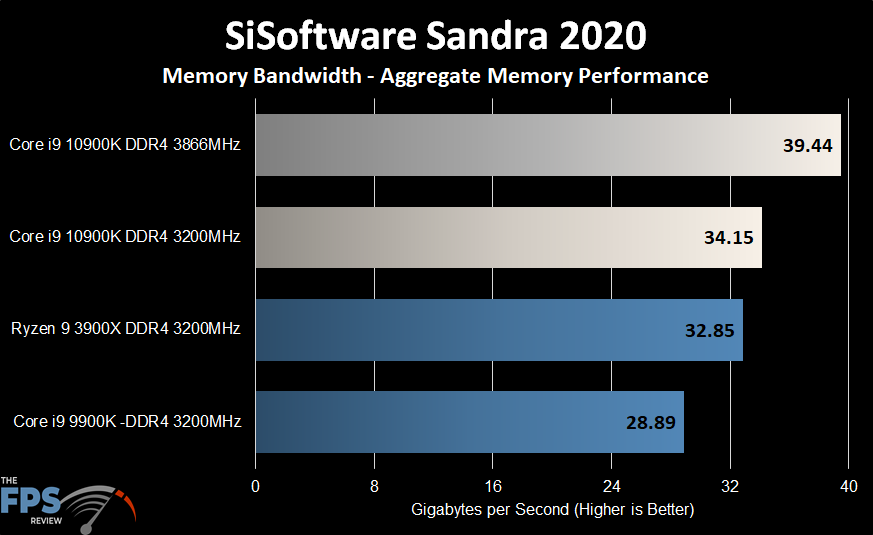

Sandra Memory Bandwidth

This latest iteration of Sandra shows us aggregate memory performance, rather than splitting up the values as we did with AIDA64.

This test shows us raw memory bandwidth for each test configuration. You can see the strength of Intel’s memory controller as it produces the highest results overall.