Power Consumption

Generally, it is this author’s opinion that the power consumption of a CPU matters very little given the role they take. Battery life isn’t usually a concern and the ability to employ more substantial cooling methods is available for standard desktop form factors.

That’s not to say that I don’t believe power matters. It does. Performance per watt for Intel’s Core i9 10980XE was absolutely awful compared to AMD’s Ryzen 9 3950X. While the 10980XE was often faster (especially when overclocked), it pulled nearly twice as much power for only having two more cores. This is an extreme example, but it does make a difference. In my opinion, you absolutely need custom liquid cooling to get the most out of any 10980XE build. Of course, all things being equal between two CPU’s, it makes sense to get the one that uses less power.

As I stated earlier, Intel increased the TDP of the 10900K from 95w to 125w. This is only when considering stock clock speeds. Once turbo frequencies come into play, that number gets quite a bit larger.

Power Testing

Our power consumption methodology is quick and dirty. It involves using a Kill-A-Watt device with only the test machine connected to it sans monitor and any external devices. The idle power is tested at the Windows desktop on a clean system while doing nothing but running background tasks. Load testing is done by using Cinebench R20 in a multi-threaded test. The power is then observed on the Kill-A-Watt instrument.

These devices are not known for being super accurate, so this is a ballpark measurement. Cinebench R20 is largely used because it doesn’t utilize the GPU, which keeps the impact of the component to a minimum. All the systems we’ve tested from day one have been tested in this manner.

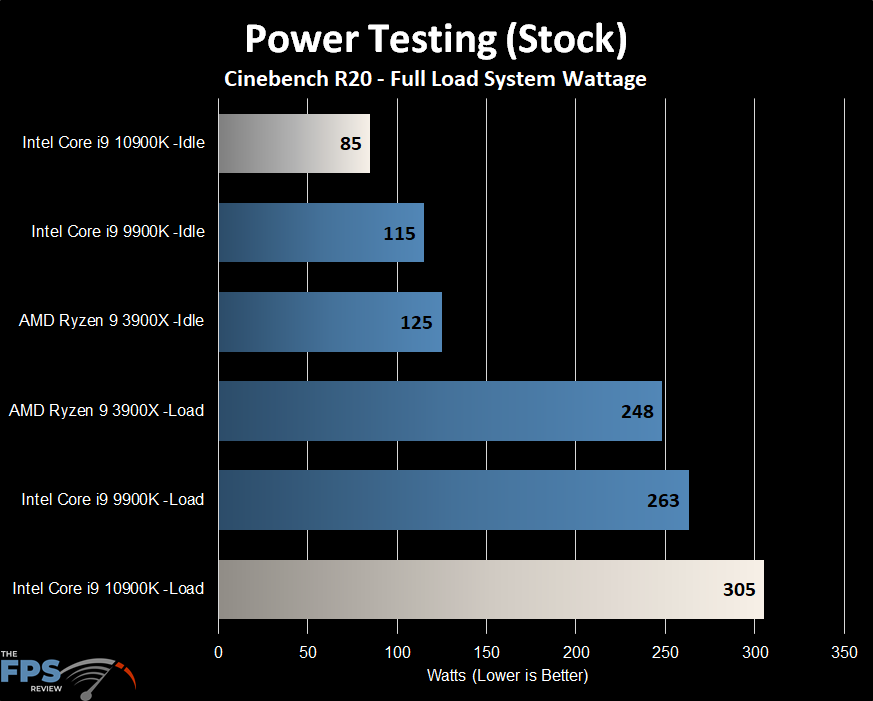

While idling at stock speeds, the power consumption of the 10900K was surprisingly low coming in at 85w. This is a fairly substantial improvement over its predecessor which idles at 115w in this example.

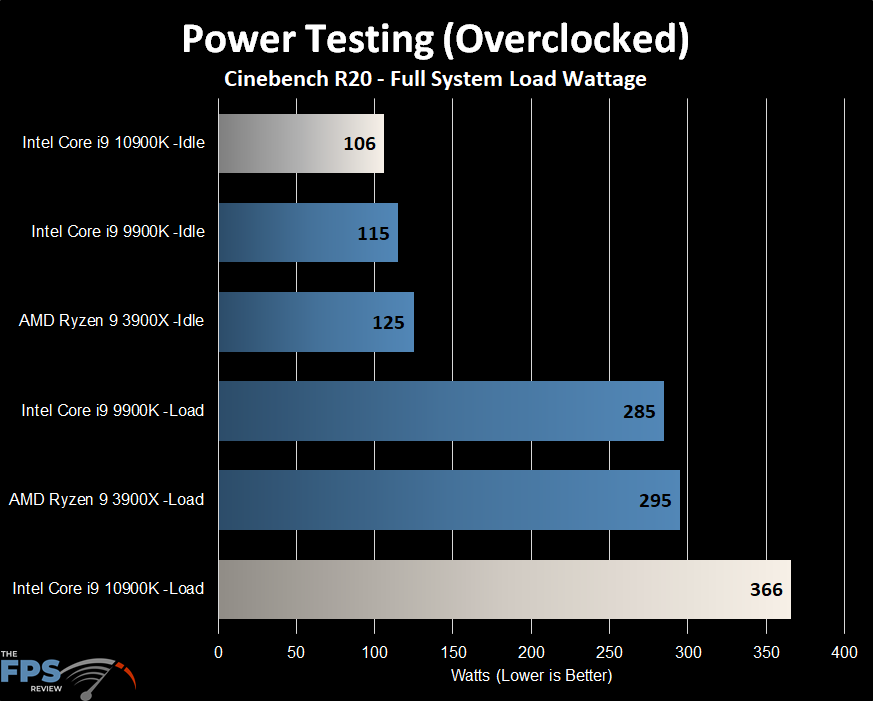

However, things get ugly when you overclock the 10900K. It quickly increases the system’s total power draw to 366w. Idle increases to 106w, which is still below that of the 9900K. Clearly Intel has improved its efficiency enough to allow for two additional cores while still gaining some reduction in consumption. That said, this is in no way a good result. The CPU uses far more total power under load than the Ryzen 9 3900X does despite the latter having two more cores. In fact, while not shown here, these numbers are closer to a Ryzen 9 3950X which has 6 more cores and threads than the 10900K does.

Temperature Testing

Temperature testing is quick and dirty. Using Cinebench R20 to load cores, we checked the package temperature via AIDA64. While I do have an overclocked result, it’s important to note that these aren’t final overclocking temperatures and aren’t indicative of long term stability testing results.

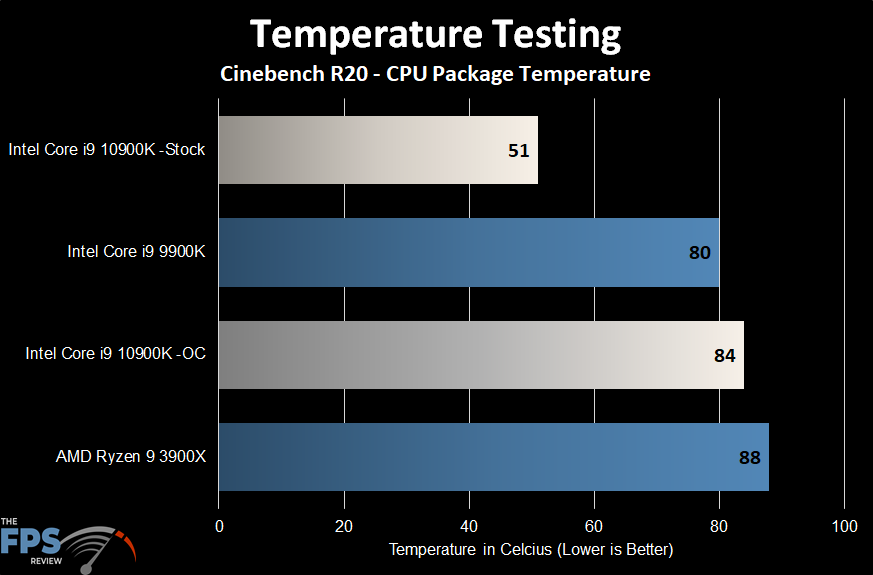

At stock speeds, the temps for the 10900K were extremely low. This shows Intel has definitely increased its thermal efficiency somehow. The older 9900K in the same circumstances has two fewer cores and is considerably warmer. I had thought the reading might be in error until the 10900K was overclocked. At which point it reached much higher temperatures that were more in line with the other test systems.