Gaming Benchmarks Part 1

Now we come to the meat and potatoes of the Core i9-10900K. This is a follow up to the world’s fastest gaming processor. Intel claims it is the new king of that arena. Frankly, it has to be as it gets pummeled pretty badly by the Ryzen 9 3900X which has been around for the better part of a year now.

Generally, we are usually more GPU bound than CPU bound, but as you’ll see the CPU still matters even in GPU bound conditions. Also, many people still game at 1080P, which highlights stronger CPU’s as this is a somewhat CPU bound resolution.

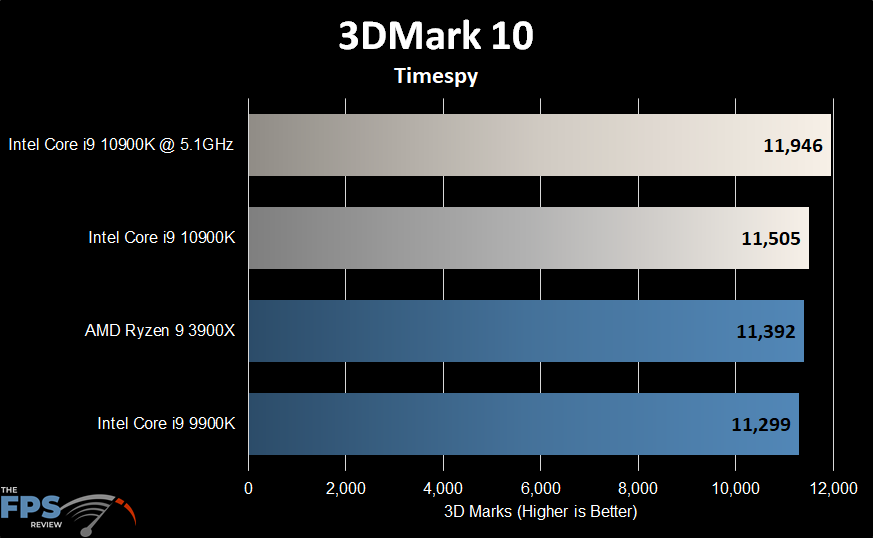

3DMark 10

Intel CPU’s tend to outscore their AMD counterparts in this test. Therefore, I am not terribly shocked by the outcome. We can also see an all-core 5.1GHz overclock earns us some extra points in this test.

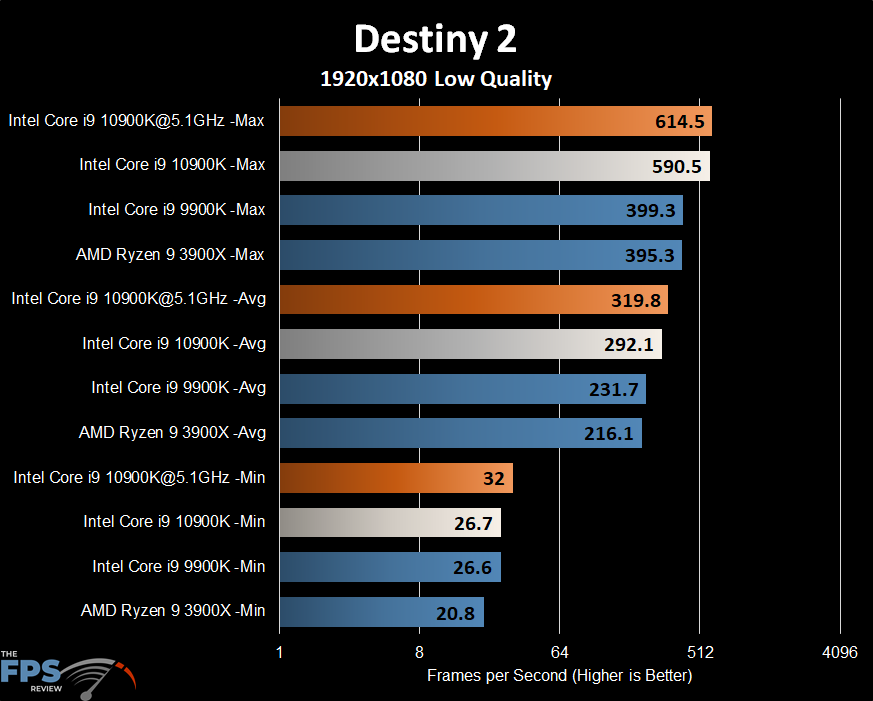

Destiny 2

I apologize for the ugly graphs. The logarithmic scales were necessary given the huge gap between the minimums and the maximum frame rates here. As you can see, the 10900K achieves the best performance when overclocked across the board. Even so, our FPS can drop really low in areas where there are lots of enemies, explosions, and weapons fire. Intel does gain quite a bit over the 9900K with the improved clock speed and cache added to the 10900K.

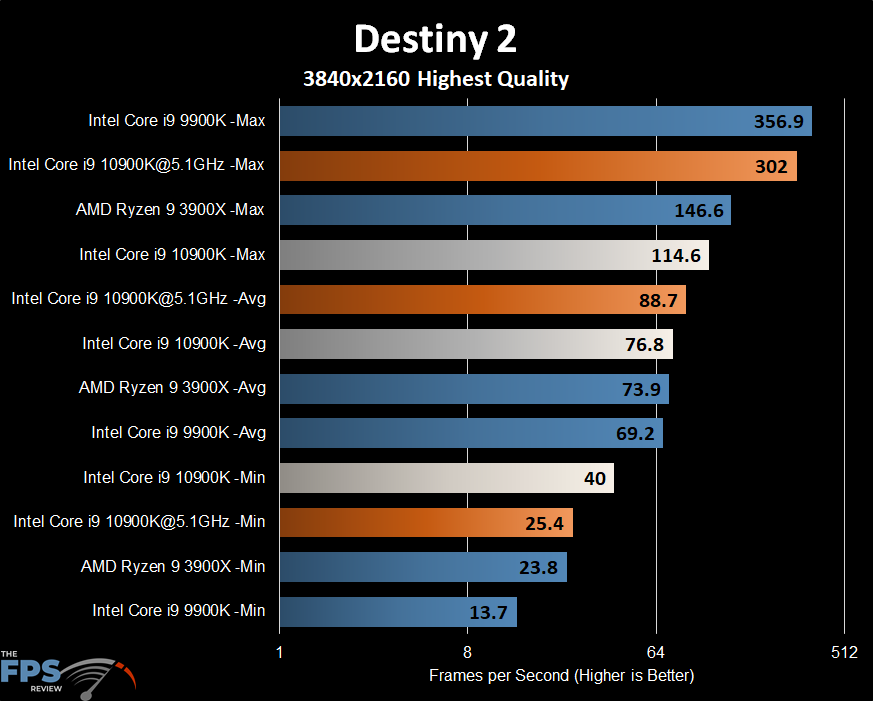

Again, at 4K we see solid gains going from the 9900K to the 10900K. Interesting to note that the 9900K has the worst minimum frame rates here. This is a sharp contrast to almost a year ago when I first tested the 3900X in this game. Aside from the maximum frame rates, the 3900X is a better choice than the aging 9900K is. However, 10900K is the fastest where it counts. Although, I can’t explain why the 9900K still has higher maximums.