Rendering Benchmarks

Here, we are looking at each CPU’s ability to perform rendering and encoding tasks.

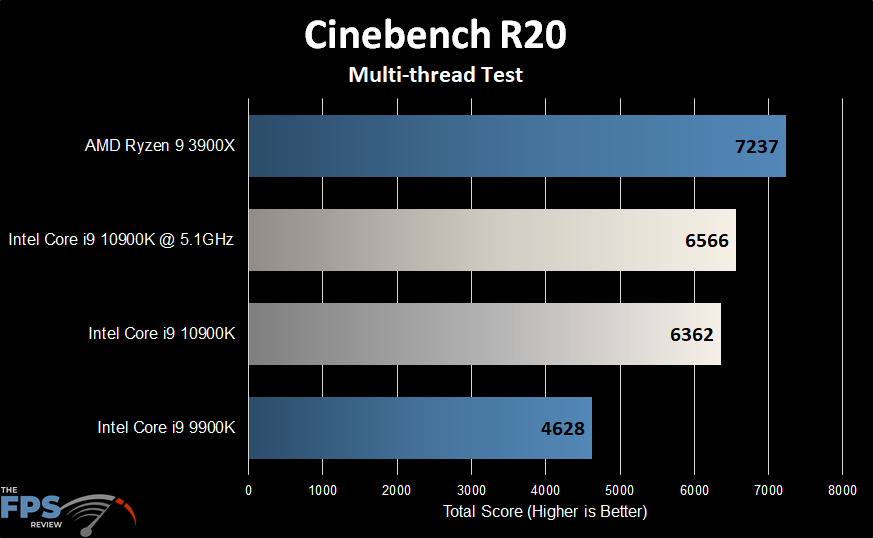

Cinebench R20 Multithread

Here we can see Intel close the gap with AMD quite a bit. However, the lack of core count makes this a virtually impossible task. Again, we see major improvement over the outgoing Core i9-9900K.

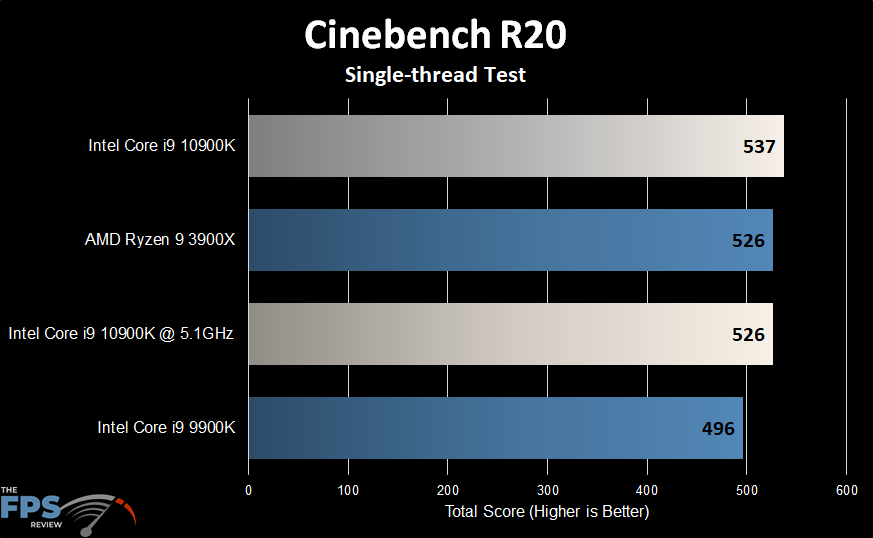

Cinebench R20 Single Thread

Given the vastly different clock speeds between the 3900X and the Core i9-10900K, one can easily see AMD’s impressive IPC gains over the Zen+ cores. Indeed, Comet Lake has to hit very high speeds to maintain parity, much less exceed it. The gains are there over the older 9900K, but they aren’t huge.

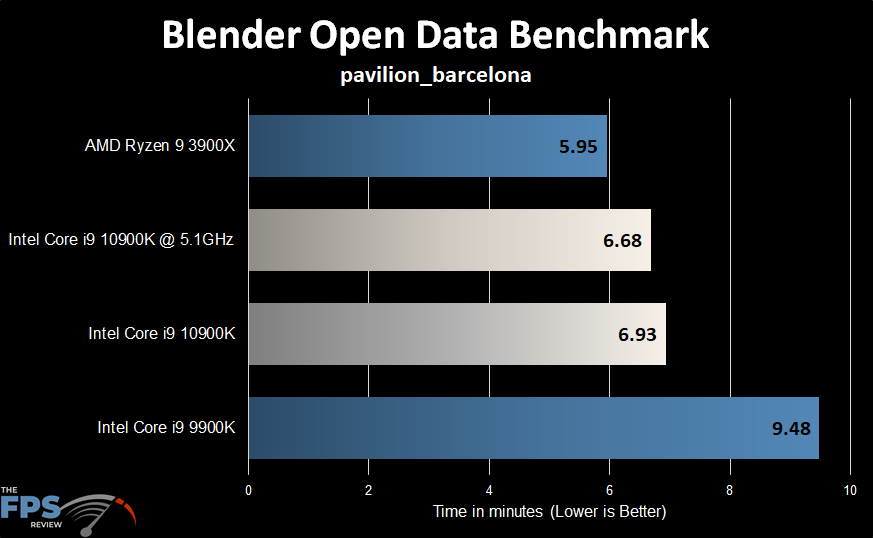

Blender Open Data Benchmark

This is the Blender Open Beta Benchmark version 2.04. This Blender Benchmark allows you to download multiple demos for rendering and render up to six of them in sequence. This can take an extremely long time to run all of them. You also have the option of testing different versions of Blender from the same launcher. We chose two of the tests out of the six, which seemed to have a longer run time than the others.

Blender pavilion_barcelona

In this benchmark, we saw a dramatic difference between the old Intel Core i9-9900K and the 10900K. We also saw that the increased clocks and cache are still no match for two extra cores and two extra threads.

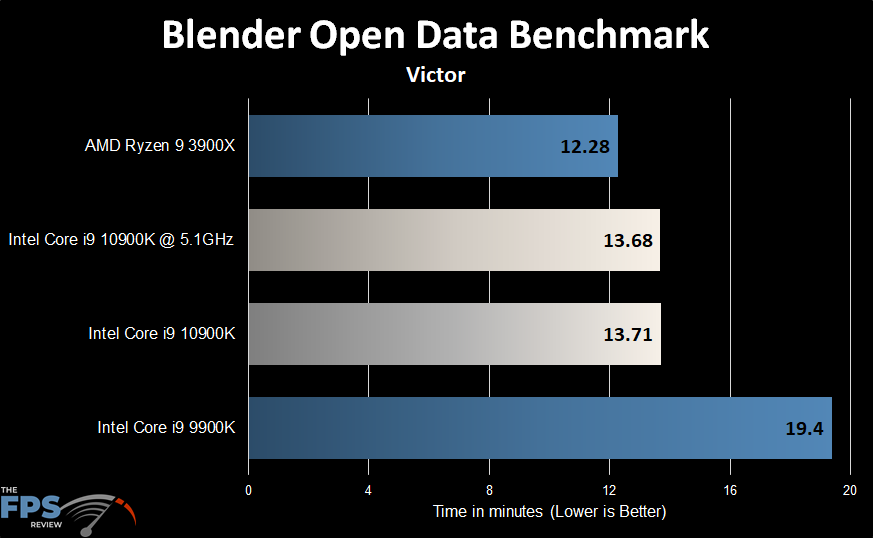

Blender Victor

In this test we see the same basic delta between each of the systems tested. The AMD Ryzen 9 3900X managed to edge out the best Intel has to offer in its mainstream segment.